What is an SEO site audit

There are 4 variables that affect how much traffic and sales you get from Google.

- The target audience. How many search queries and how often your potential customers enter, what tasks they intend to solve, what is their most frequent buying scenario (whether they always buy after switching from Google or prefer to buy on social networks).

- Google. By what formula does the Google algorithm work at a particular point in time, how it crawls, indexes and ranks sites, for which actions it punishes and rewards.

- Your competitors. How long has their site existed and how much it has already been pumped by links, traffic from other sites, how good is their team, strategy and resources, whether they are connecting other traffic sources (for example, branded offline and online advertising) in order to improve their search positions.

- You yourself. How much better than your competitors do you know how Google works and the needs of your target audience, how long has your site been around, how good your team, strategy and resources are.

This step-by-step checklist will help you analyze and improve your website. But remember that this is often not enough to be the first in a niche. In addition to improving the site, study your target audience, competitors and stay tuned for Google updates.

Launch SEO site audit now

While you are reading the checklist, Sitechecker will perform an online audit of your site and generate a list of tasks to fix errors

When you need an SEO audit

1. Before launching the site

It is at the time of creating a site that you set its structure, URLs, links, meta tags and other important elements. Auditing a site before publishing it will help you avoid mistakes that can slow down the growth of its visibility in search.

2. Before buying and selling a website

If you sell a site, then it is important for you to bring it into a marketable state 🙂 If you buy, then it is important for you to assess all its vulnerabilities and errors that may lead to problems in the future. The higher the transaction value, the more expedient it is to spend time on a thorough audit.

3. Before and after site migration

Site migration is an even more complex process than launching. This is one of the most dangerous processes, after which the site can easily lose its position due to technical errors. It is necessary to audit two versions of the site at once, the old and the new. At the same time, you need to check that the transfer of link weight, content occurs correctly. Use our website migration checklist to avoid the most common mistakes during migration.

4. After making big changes to the site

Major changes can be called changes that affect more than 20% of pages or concern the most valuable pages in terms of traffic and conversions. Examples of such changes: changing URLs, changing the design of the site, adding new scripts and styles, changing the internal linking, deleting and adding pages, adding new language versions of the site.

All these situations contain risks that at a glance you will not see the problems that the Google bot and users will face when visiting the new version of the site.

5. To regularly search for problems

Even if you work with the site slowly, periodically making small changes, after a while, the number of problems can accumulate and slow down your growth. Most often, problems accumulate associated with broken links, redirects, the appearance of duplicates and low-value pages, cannibalization of requests.

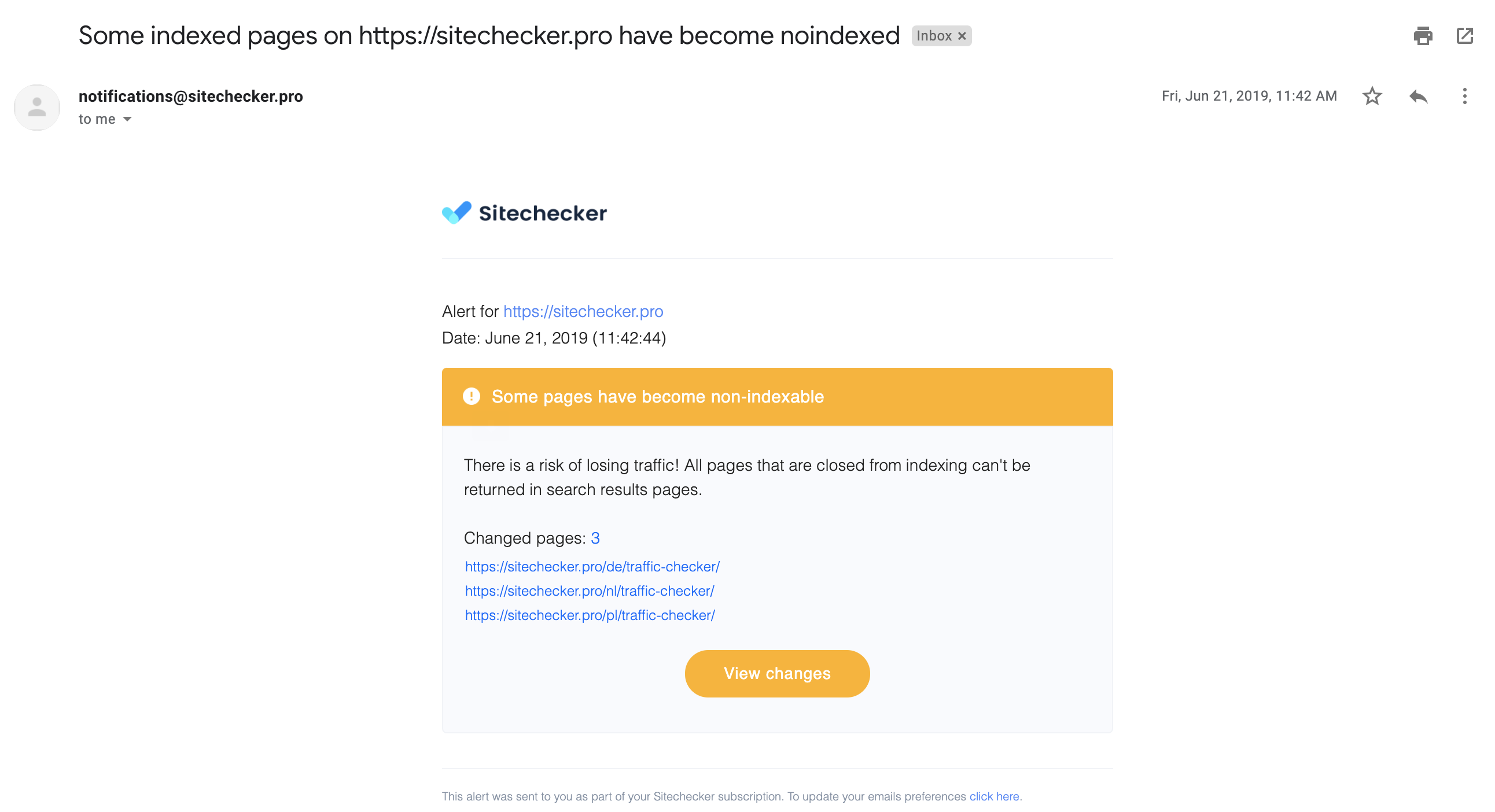

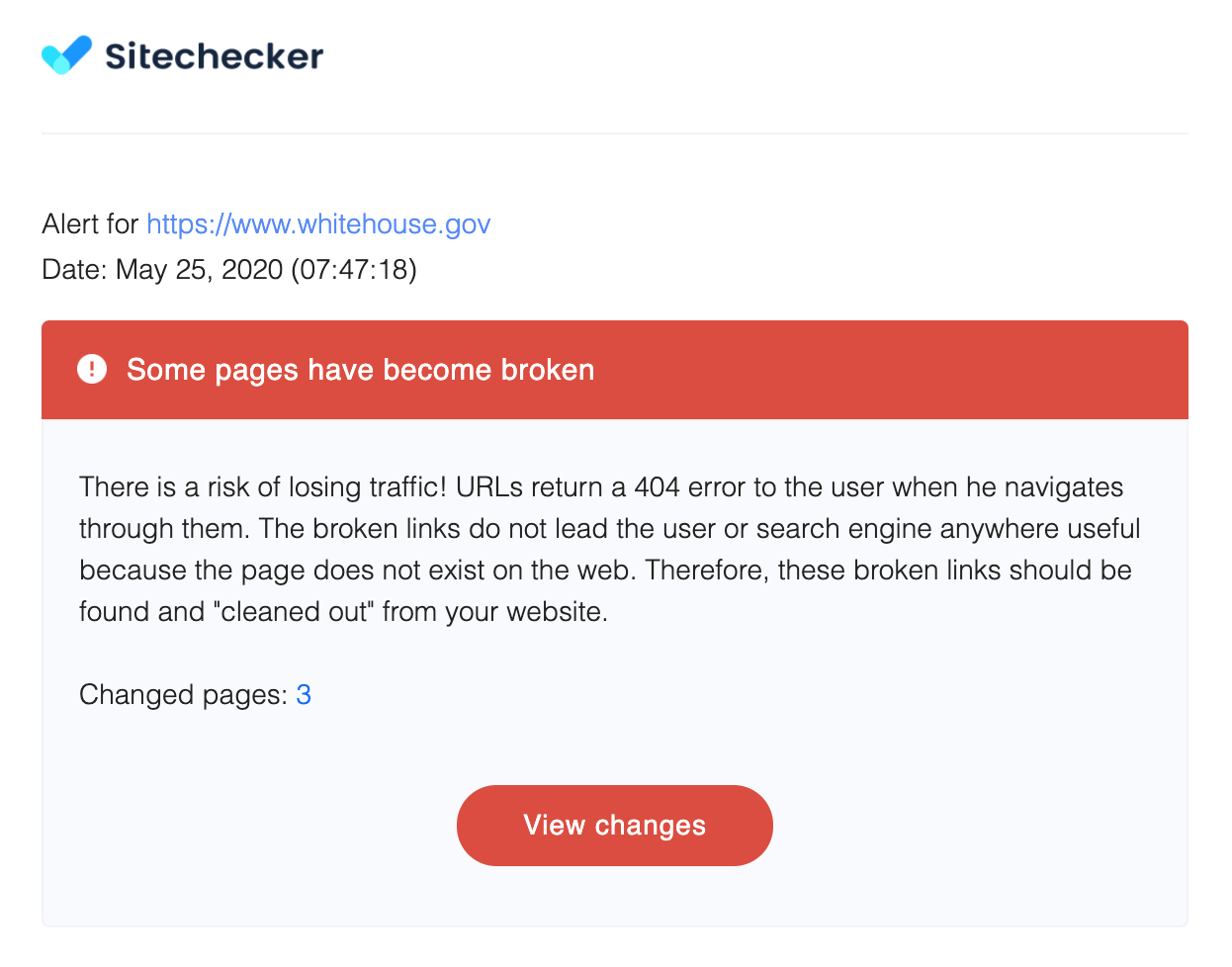

Therefore, it is important to set yourself, as a rule, to conduct an audit once a month, quarter, six months, a year, depending on your dynamics of work on the site. But the manual version is increasingly becoming a thing of the past. Some tools can scan your site once a month, week, or day and send you notifications when they find technical errors on the site.

Here is an example of the email that Sitechecker sends when it finds pages that have been closed from the index.

6. To create or adjust an SEO strategy

At this stage, the SEO audit is performed using data from Google Analytics and Google Search Console. We need data on the effectiveness of pages, keywords, backlinks in order to identify where we can get the fastest increase in traffic and conversions, which pages we need to prioritize, and which, on the contrary, should be given or merged.

9 steps for a complete SEO audit

1. Check if the site is working

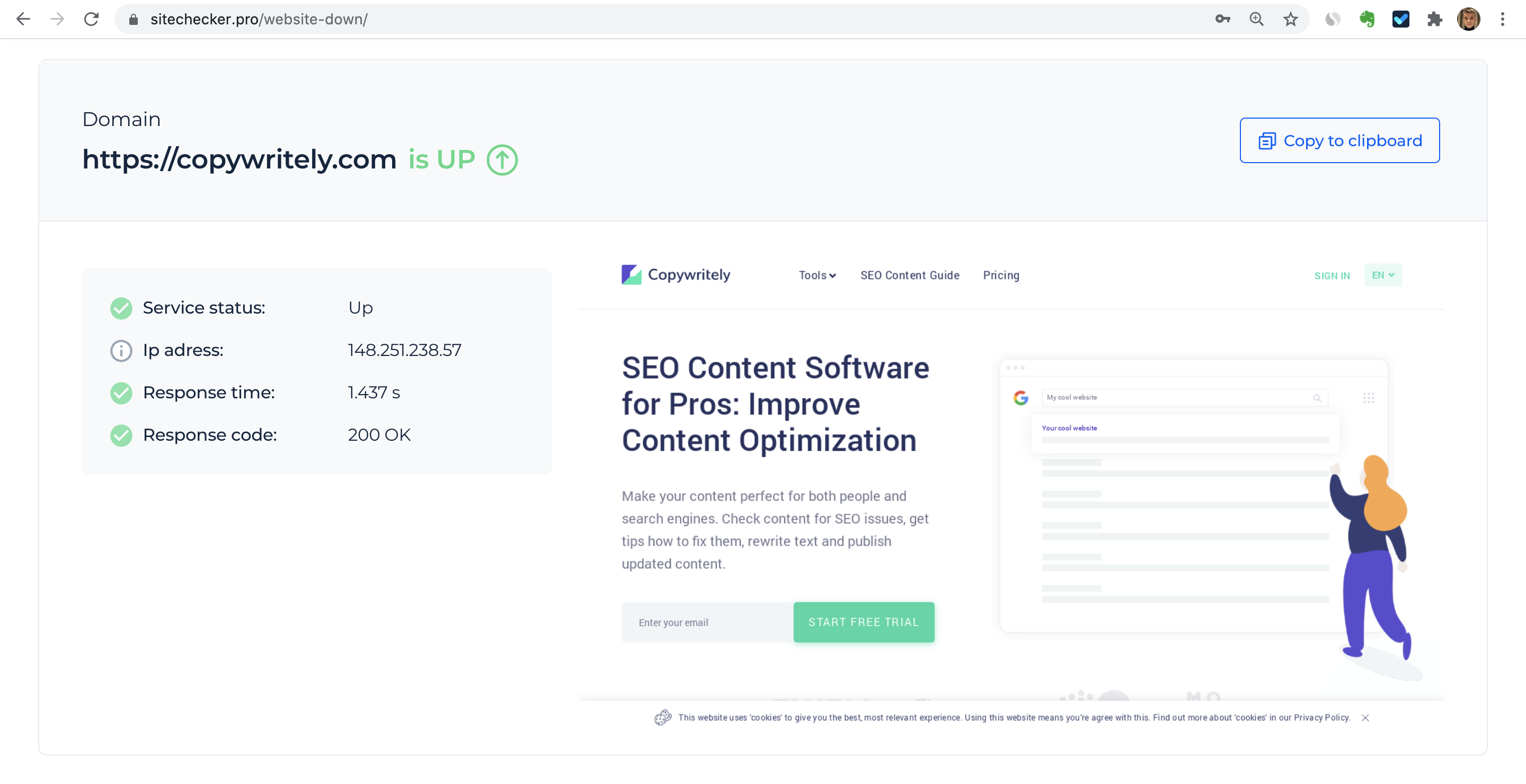

To do this, simply go to the site from any device and make sure the site loads. After that, check if the website is down or up through the eyes of a robot.

If it is important to check the availability of a site in different countries, you can try the tool from Uptrends.

The site may not load for a variety of reasons: an expired domain, errors when updating plugins or CMS, an accident or technical problems on the side of the hosting provider, a DDoS attack, or hacking of the site by hackers.

Additionally, using an SEO Grader

can help you analyze how these issues impact your site’s SEO score. It evaluates technical factors like site accessibility and performance, giving you insights into how well your site is optimized.

2. Check if the site is in the SERP

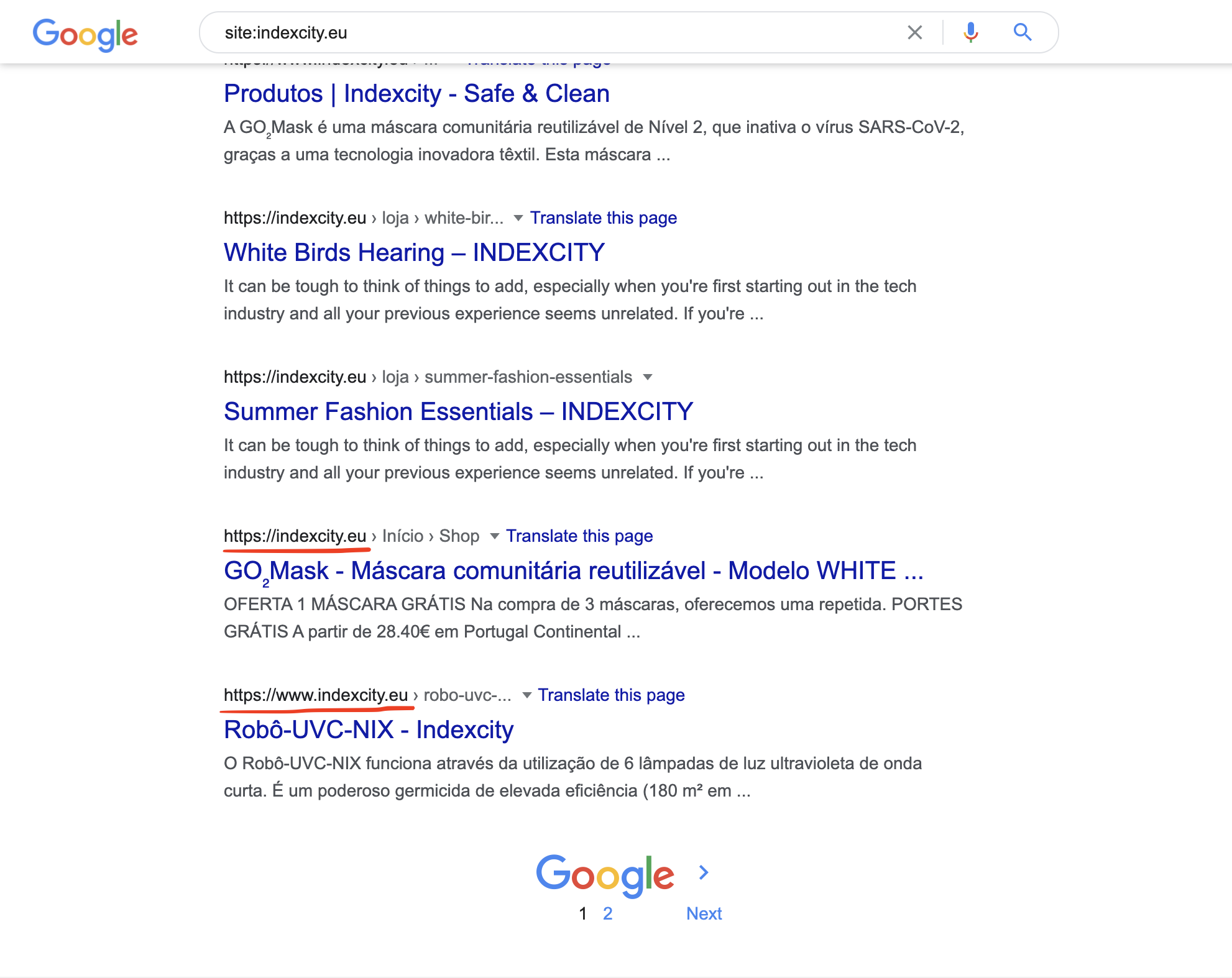

At this stage, we are only looking at the presence of the site on the search results page, without evaluating any errors. Type site: domain.com into a Google search. We will use this method often to find various problems.

The site may be absent from the search for a variety of reasons.

- The site is too new and Google hasn’t found out about it yet;

- There is no or little content on the site;

- You have closed the site from indexing and/or crawling by a search bot;

- The site came under the filter or penalties.

If you are faced with this problem, then you need to conduct a separate audit of the reasons for the absence of the site in the search results. And after fixing it, you can move on to the next steps.

3. The site has only one working version

If the site has several working versions (that is, they have a status code of 200), then this can lead to the appearance of duplicates in Google, indexing of pages on different versions of the domain, spreading the link weight between several versions of the domain.

Of the four domain versions:

- http://domain.com

- https://domain.com

- http://www.domain.com

- https://www.domain.com

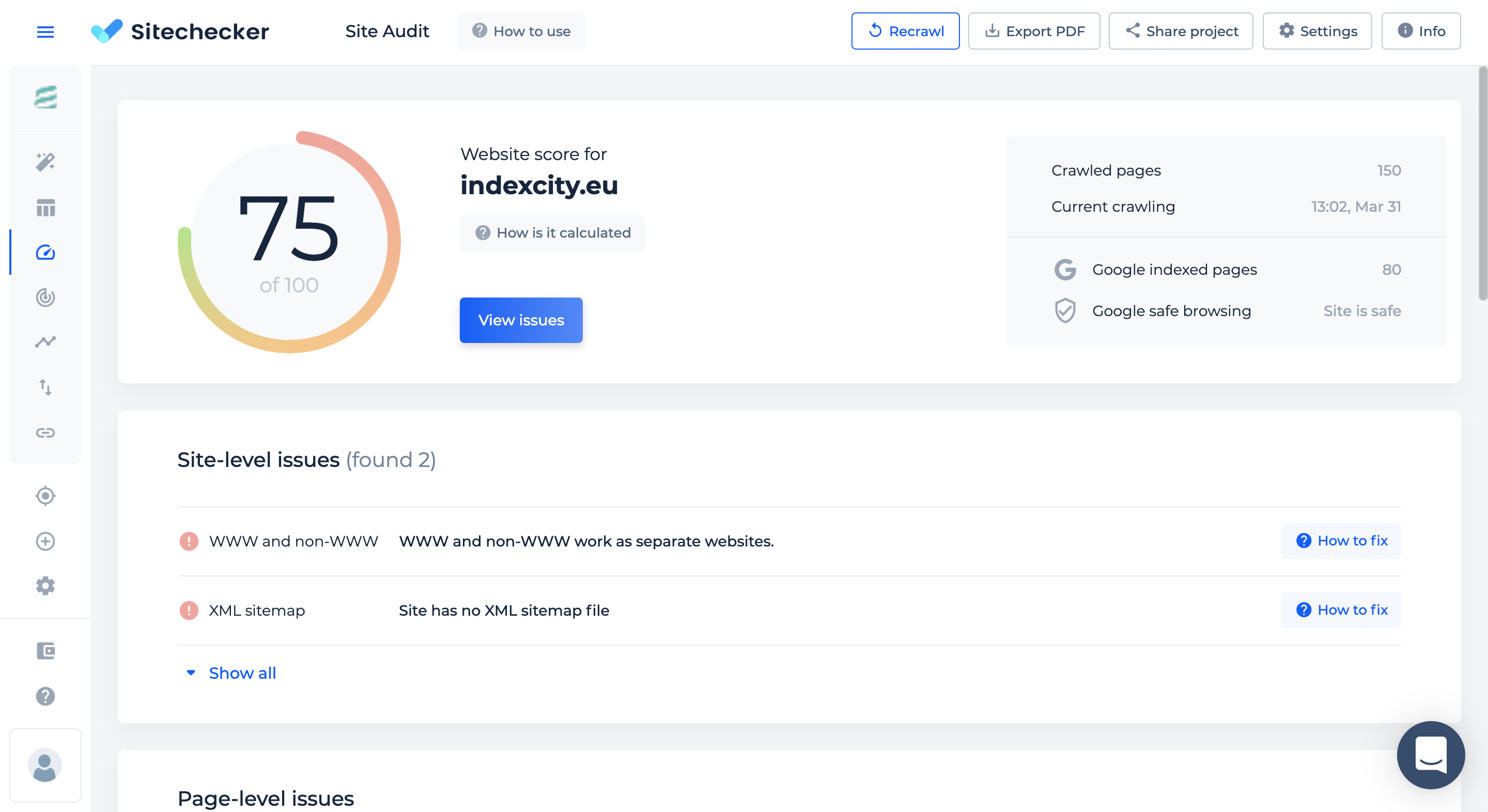

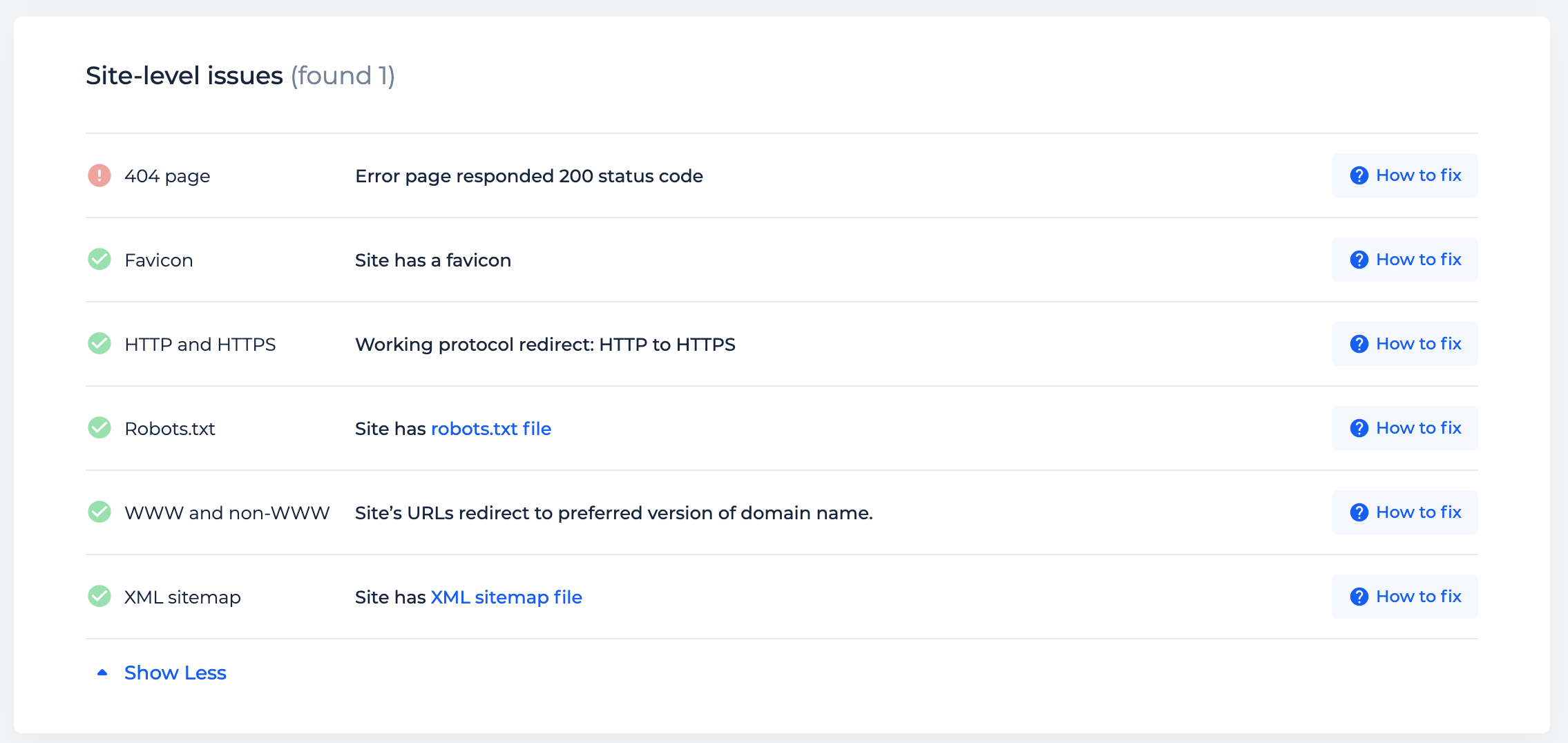

Only one version should have status code 200, and the remaining three should be redirected to the main one. Other errors can lead to duplicate pages, but problems with redirects between HTTP and HTTPS, WWW and non-WWW affect the entire site. Sitechecker can help identify such problems.

4. The site is loaded from different devices

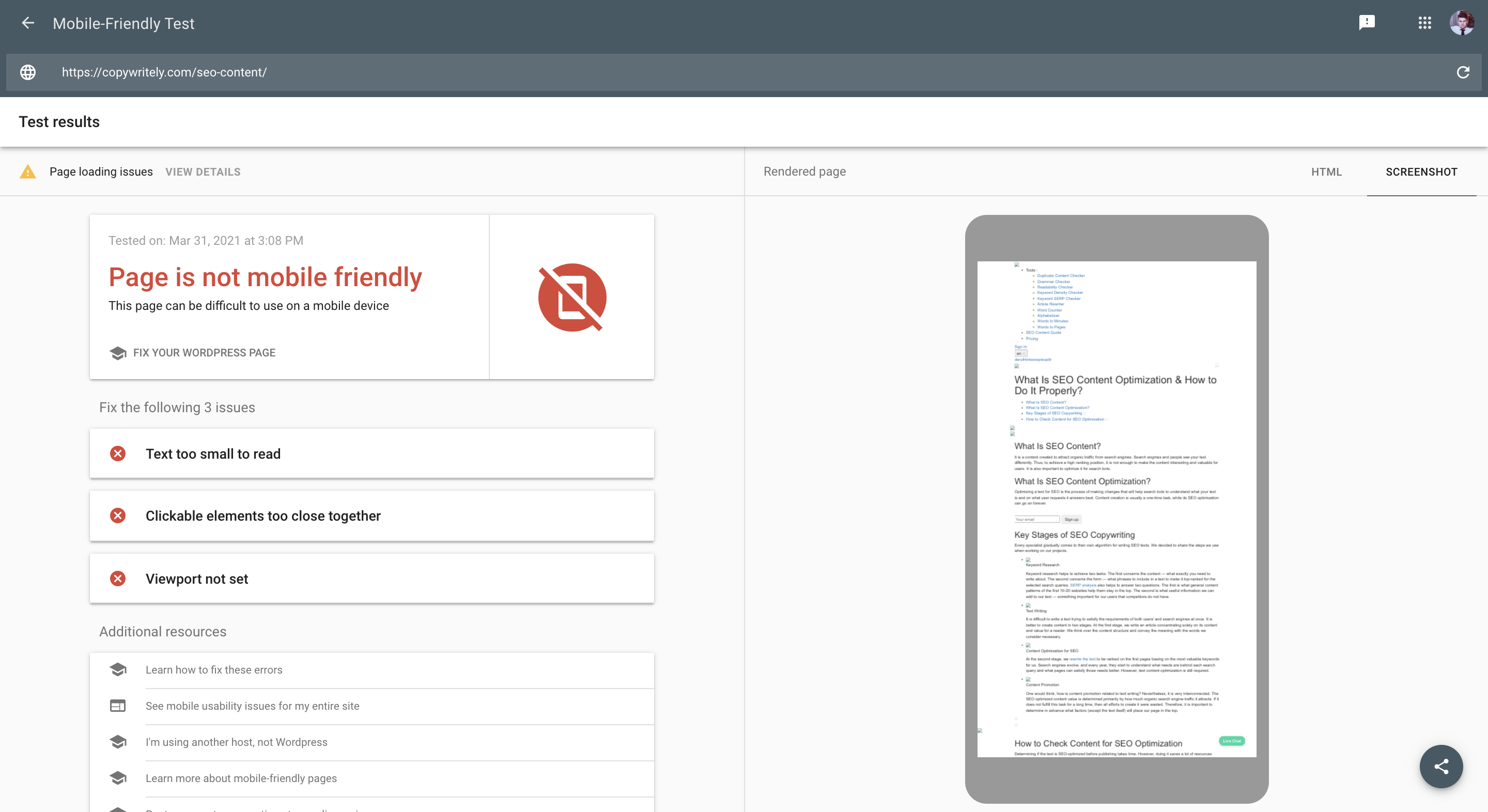

To do this, you also need to look at the site with your own eyes and the eyes of a Google robot. Go to the site from your mobile and run the test in the Mobile-Friendly Test tool.

For example, from my mobile, I see that the page https://copywritely.com/seo-content/ is loading fine.

But when I check it in the tool, I see that the page is not mobile-friendly.

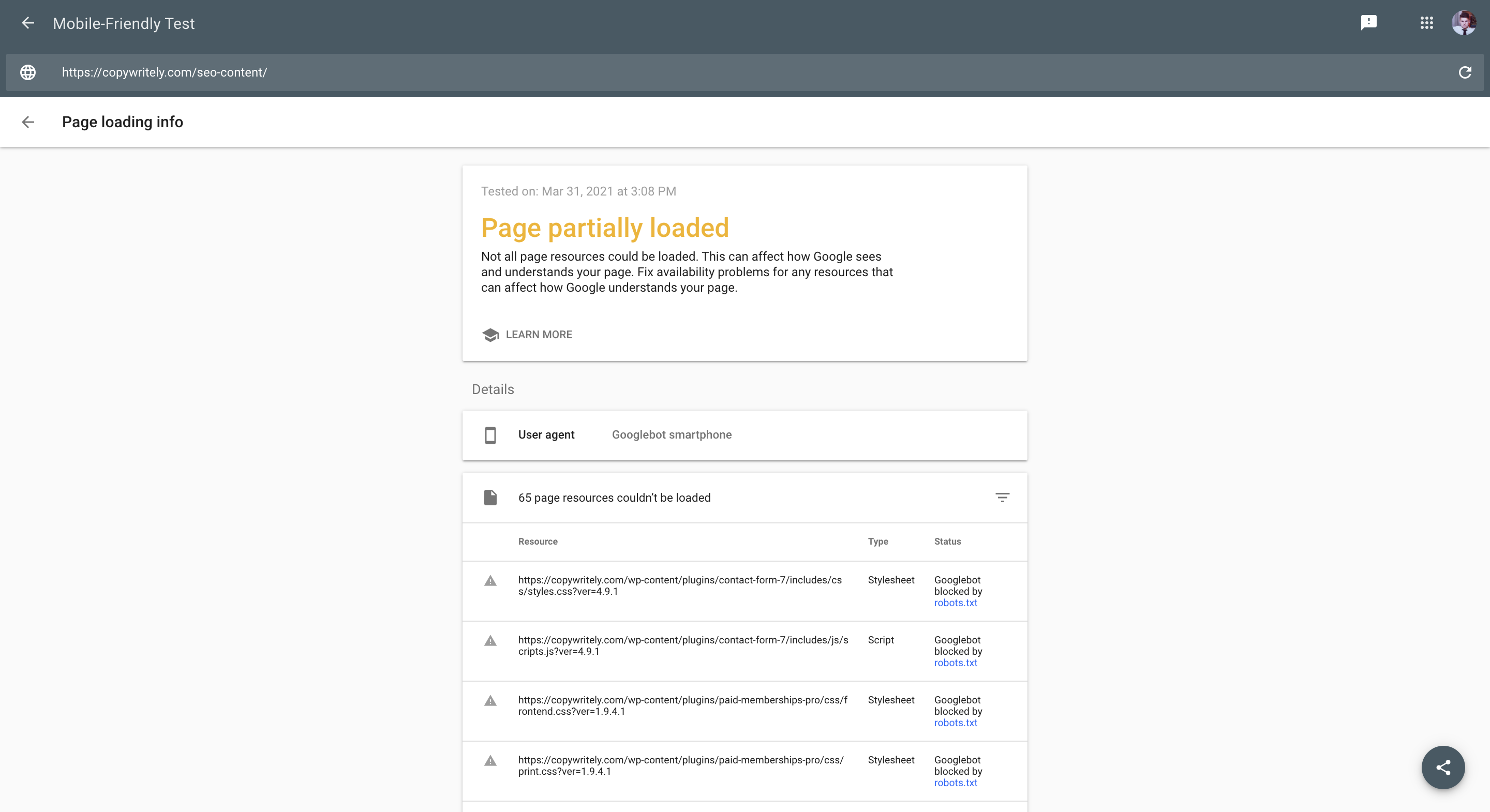

If you go to the details of the report, you can see all the problems that the tool found. Some images and CSS, JS files are closed from crawling in robots.txt. Therefore, the Googlebot does not see the page as the end-user sees it.

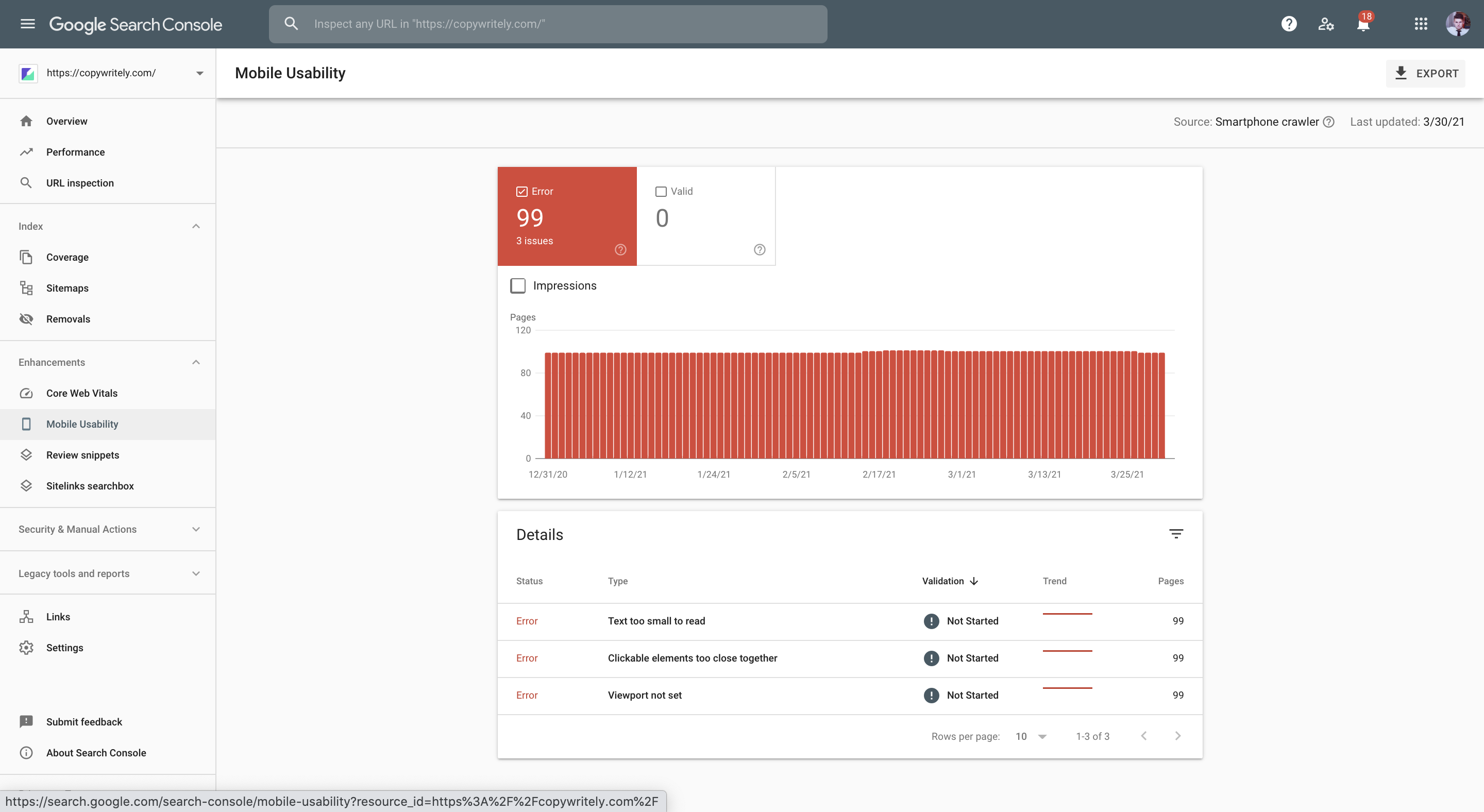

If you’ve already added your site to Google Search Console, you can see a summary of responsive issues across all pages.

It is also important that the tool can show you errors that you yourself would not have noticed. For example, clickable elements are too close to each other.

5. The site works on the HTTPS version

Until recently, the introduction of HTTPS was only a recommendation of Google. Now, this is almost a mandatory item, even for sites that do not store user information and on which there are no transactions.

Although a single visit to one of your unprotected websites may seem benign, some intruders look at the aggregate browsing activities of your users to make inferences about their behaviors and intentions and to de-anonymize their identities.

For example, employees might inadvertently disclose sensitive health conditions to their employers just by reading unprotected medical articles.<...>

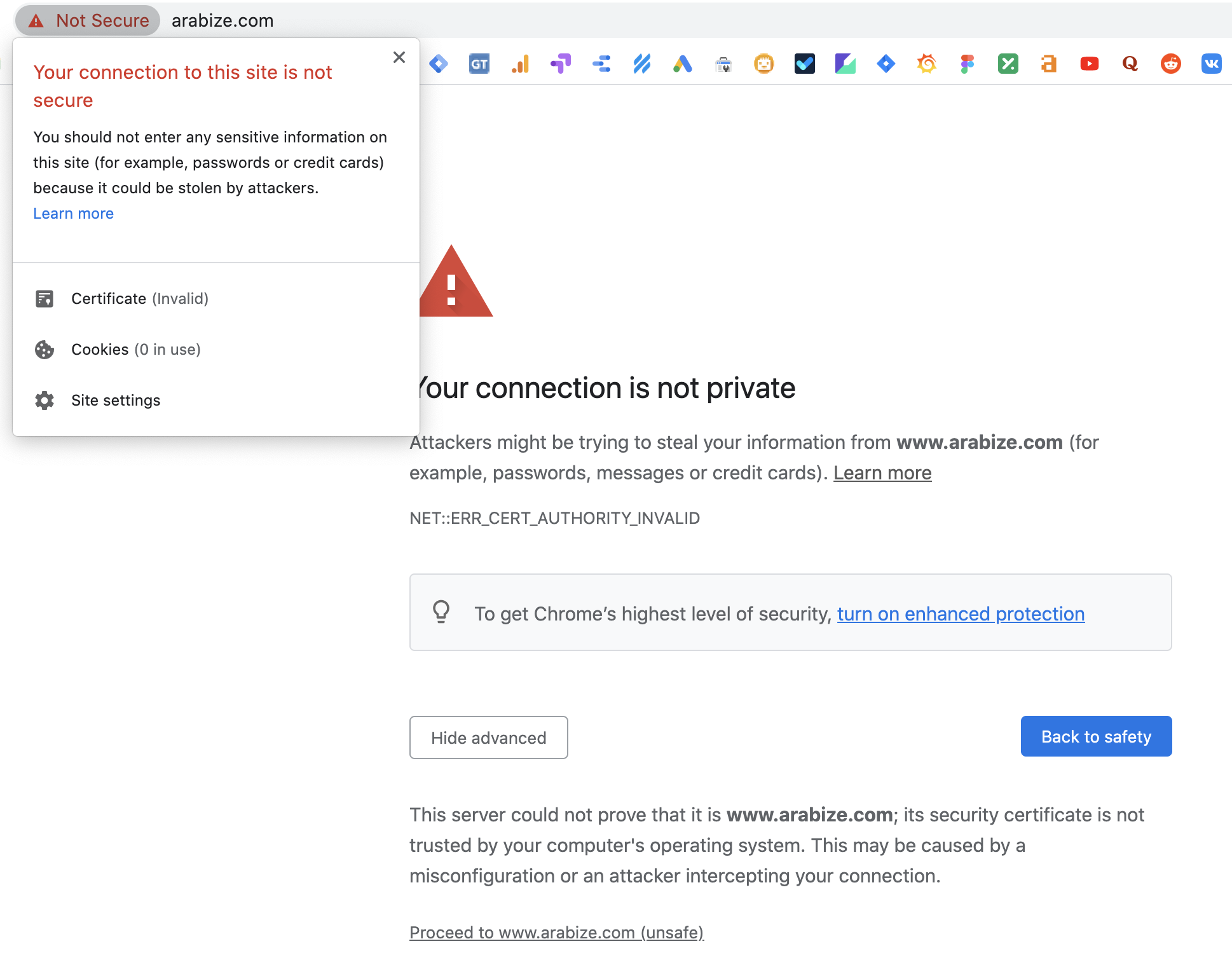

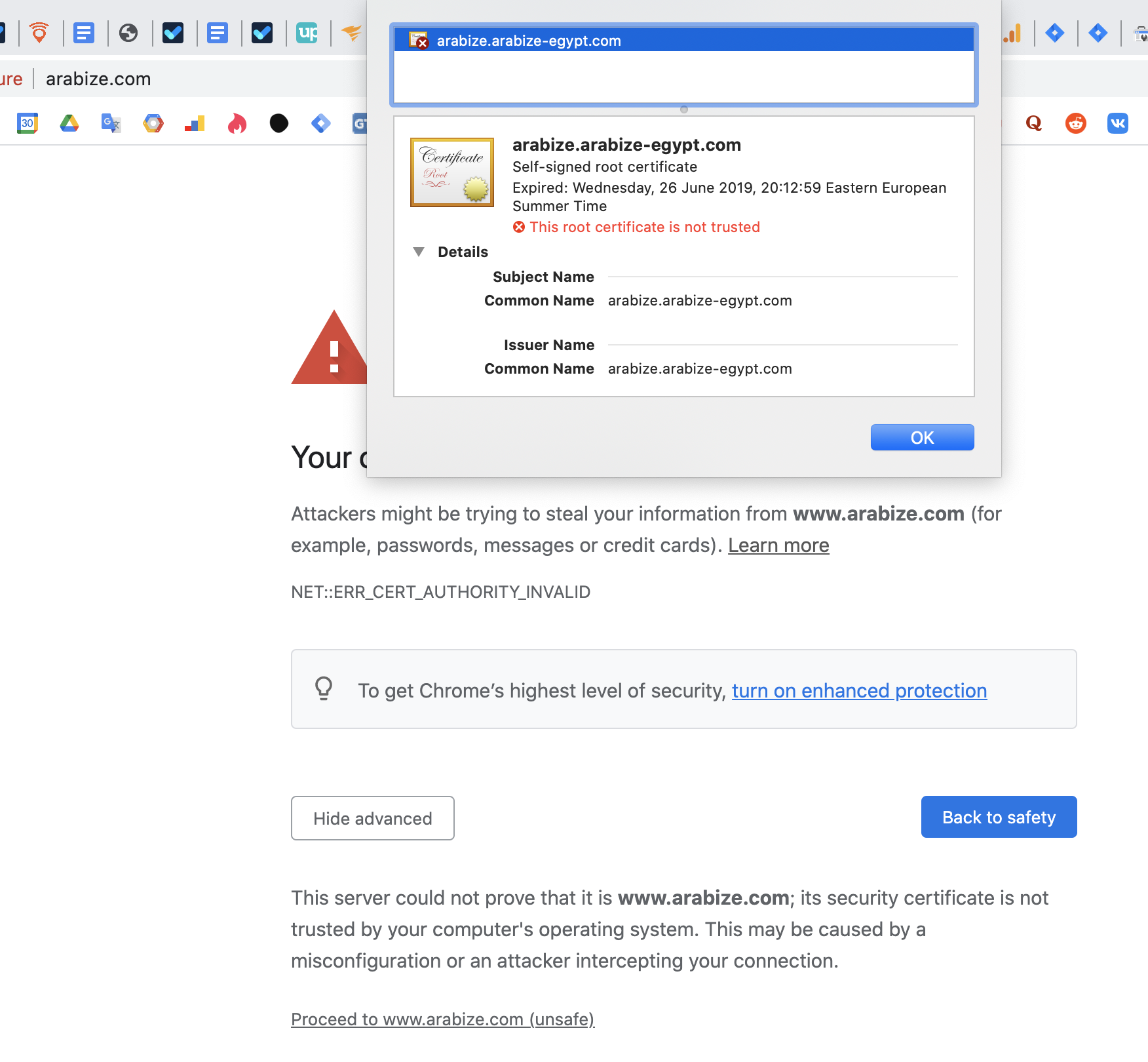

In this case, it is important not only that the HTTPS certificate is present, but also that it is valid. Almost all browsers (I checked in Google Chrome, Opera, Safari) show a notification about certificate problems. As a rule, you can still get to the site, but after another two clicks.

If users come to your site for the first time, then they are unlikely to want to go to the resource after such a browser notification.

The reason for the invalid certificate can also be easily found out. You need to click on the Not secure message in the browser line and go to the details of the SSL certificate.

I use the Let’s Encrypt certificate on all sites. Many hosting providers already include automatic issuance and renewal of these certificates as a default service. Ask your hoster about this.

6. The site is safe for users

The presence of HTTPS adds confidence to your site in the eyes of users but does not completely insure against any vulnerabilities. For a long time, malicious or unwanted software (software) or content that uses social engineering methods to manipulate your users may be installed on the site, and you will not know about it.

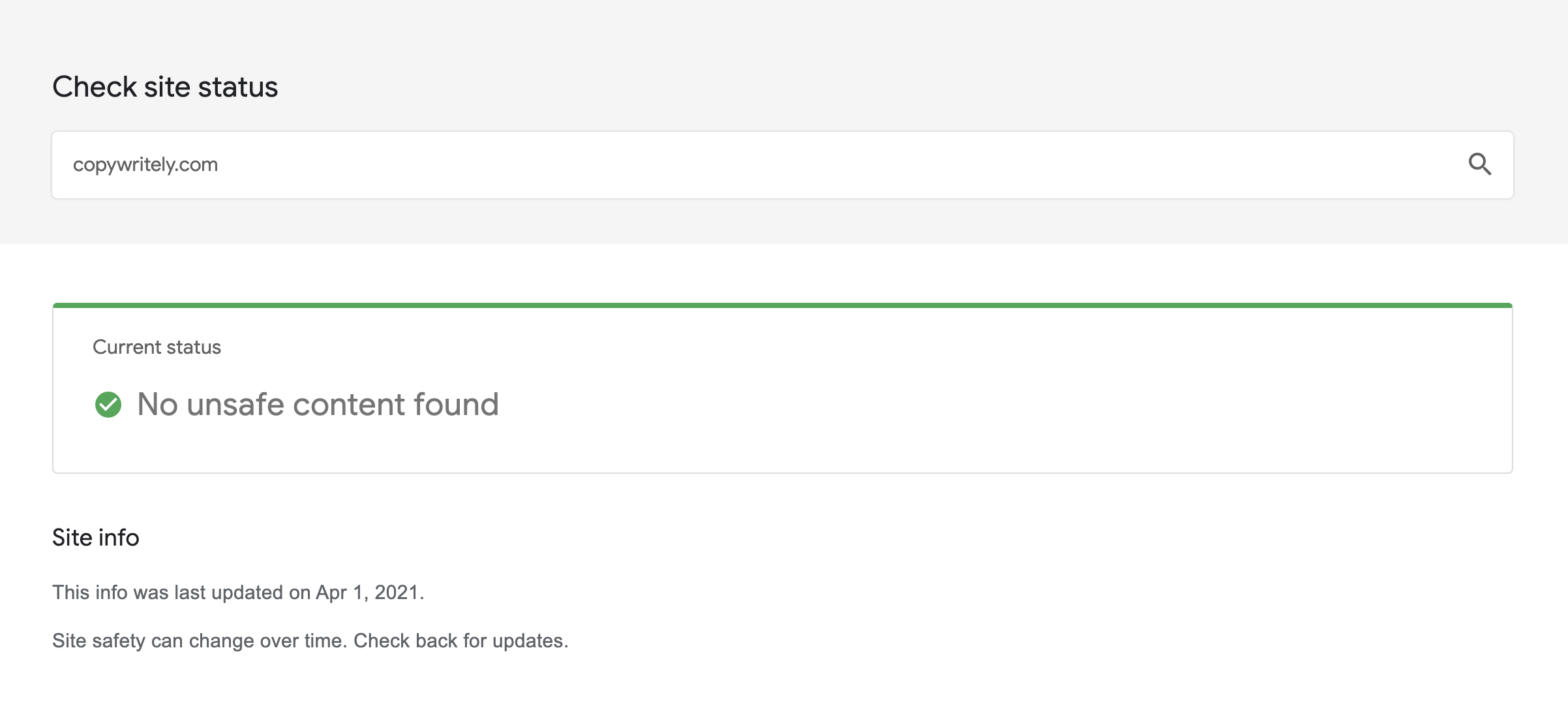

To quickly notify site owners of such problems, Google has created a special report in the Google Search Console “Security Issues”. You can also use the Google Transparency Report.

I faced this problem myself. My site was showing banner ads when I visited it from a mobile device. If you use WordPress, then this can also happen due to hacking of plugins or themes that you have installed. Use Google Help to learn more about how to secure your site.

7. The site is not on the blacklists

A blacklist is a list of domains, IP addresses, and email addresses that users complain about for sending spam. They are public and private. People can use these lists to block unwanted mailings.

If you send emails to your customers, you can easily get caught in them and slow down the effectiveness of your email marketing. If you also send product letters to users, then the purity of your domain becomes critically important.

Examples of known blacklists:

- Spamhaus Block List (SBL);

- Composite Blocking List (CBL);

- Passive Spam Block List (PSBL);

- Spamcop;

- Barracuda Reputation Block List (BRBL).

On each of these sites, you can check if your site is on the list and what you need to do to remove it from the list. You can also use our blacklist checker that checks the presence of your site in many spam databases at once.

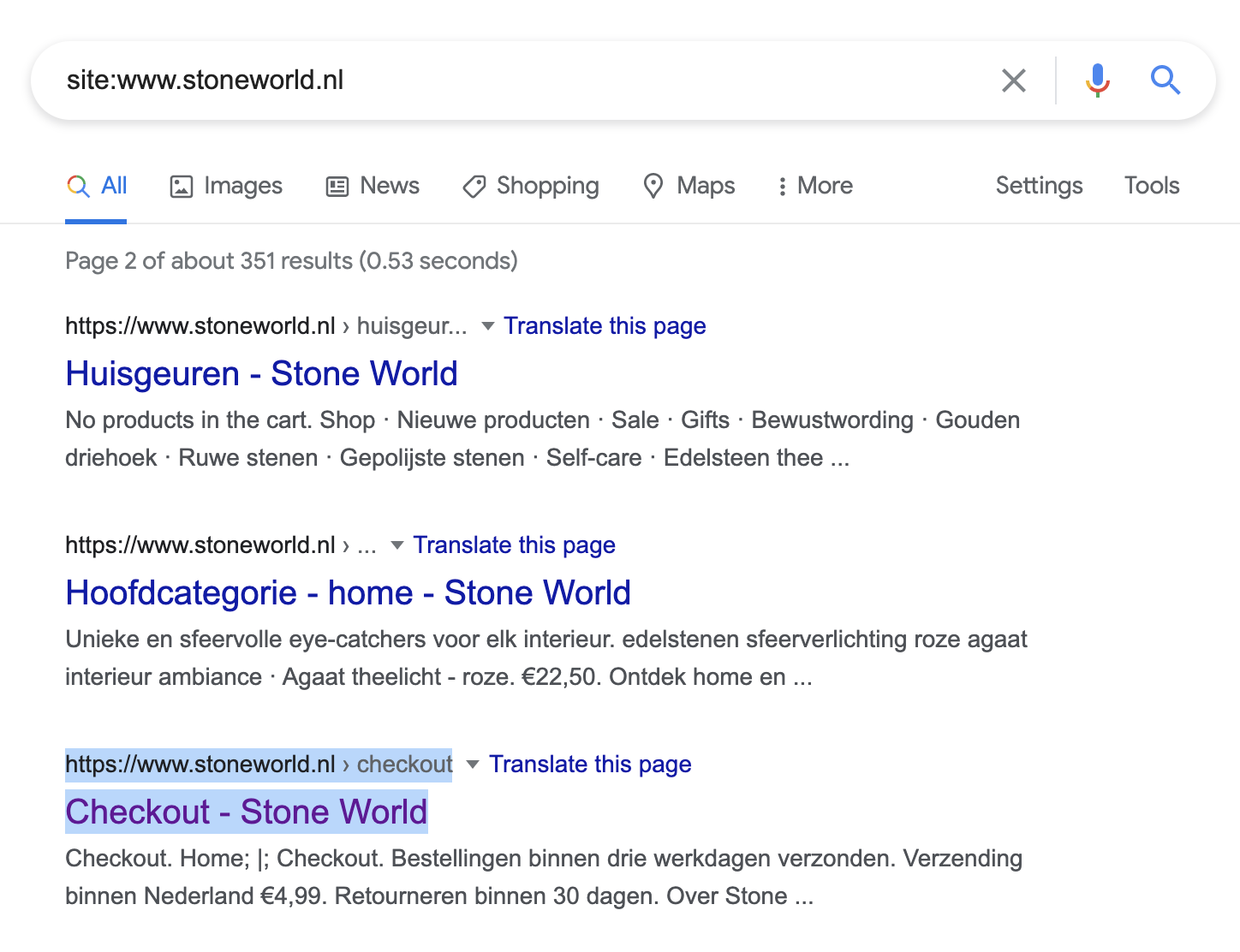

8. There are no extra pages in search results

In this step, we go through the SERPs with our own eyes to make sure that Google is not indexing unnecessary pages.

These pages include:

- duplicate pages;

- indexed search or filter pages (unless you intentionally index them);

- checkout pages;

- picture pages;

- any information pages that users are unlikely to search for.

Such pages can be indexed due to:

- the inattention of developers, administrators, content managers;

- problems with plugins, themes;

- hacking the site by intruders and generating pages for their own purposes;

- link building by attackers to non-existent URLs, unless you have configured a 404 server response for such pages.

If the site has already been shown in the search for a long time and generates traffic, then it is important to put tracking of problems with page indexing on the stream. The number of pages in the index can grow and fall both at your will (when you deliberately delete pages or add new ones) and as a result of the above errors.

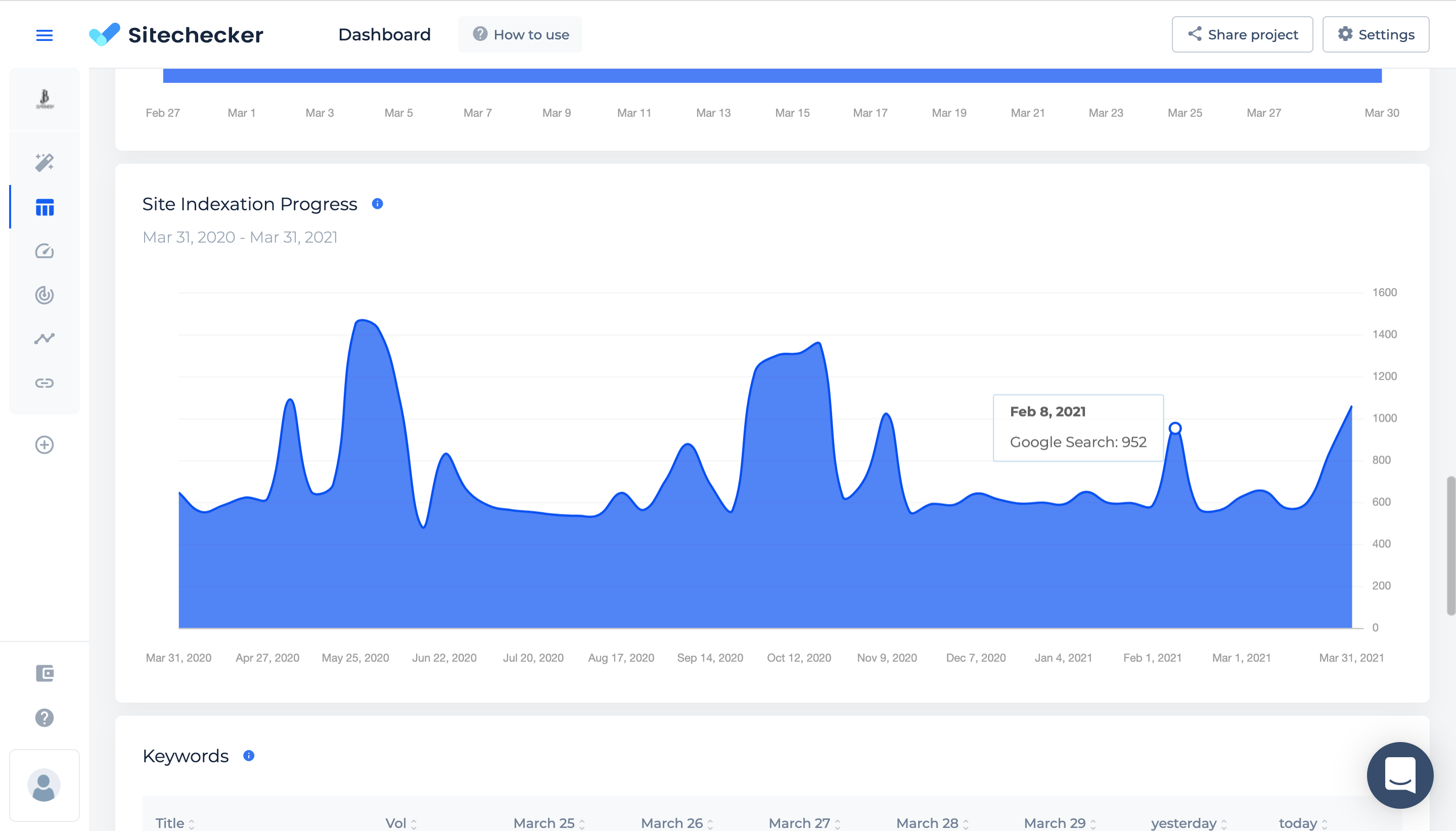

Sitechecker has a special chart on which you can assess whether everything is normal with the indexing of your site. Dramatic bursts of pages in the index can serve as a trigger for a separate audit of what is new in the SERP or which pages have dropped out of it.

9. Check your robots.txt, sitemap.xml, and 404 server response settings

These settings help to partially insure the site against the above problems. Extra pages in the index are harmful not only because users get a negative experience by hitting them, but also because the Google bot will crawl less and less often the necessary pages.

The amount of time and resources that a Googlebot can spend on one site is at the heart of the so-called crawl limit. Please note that not all crawled pages on the site are indexed. Google analyzes them, consolidates them, and determines if they need to be added to the index. The scan limit depends on two main factors: the scanning speed and the need for scanning.<...>

<...>The resources that Google can allocate for crawling a particular site are calculated taking into account its popularity, uniqueness, value for users, as well as the power of servers. There are only two ways to increase the crawl limit: by allocating additional server resources for crawling, or (more importantly) by increasing the value of the content posted on the site for Google Search users.<...>

Sitechecker will help you identify the absence of these settings, but will not write all the conditions for you yet. Make sure that the robots.txt file contains rules that prohibit the crawling of pages that are not relevant for search, the sitemap.xml file contains links to all significant pages, and when requesting a non-existent page, the server always gives a 404 status code.

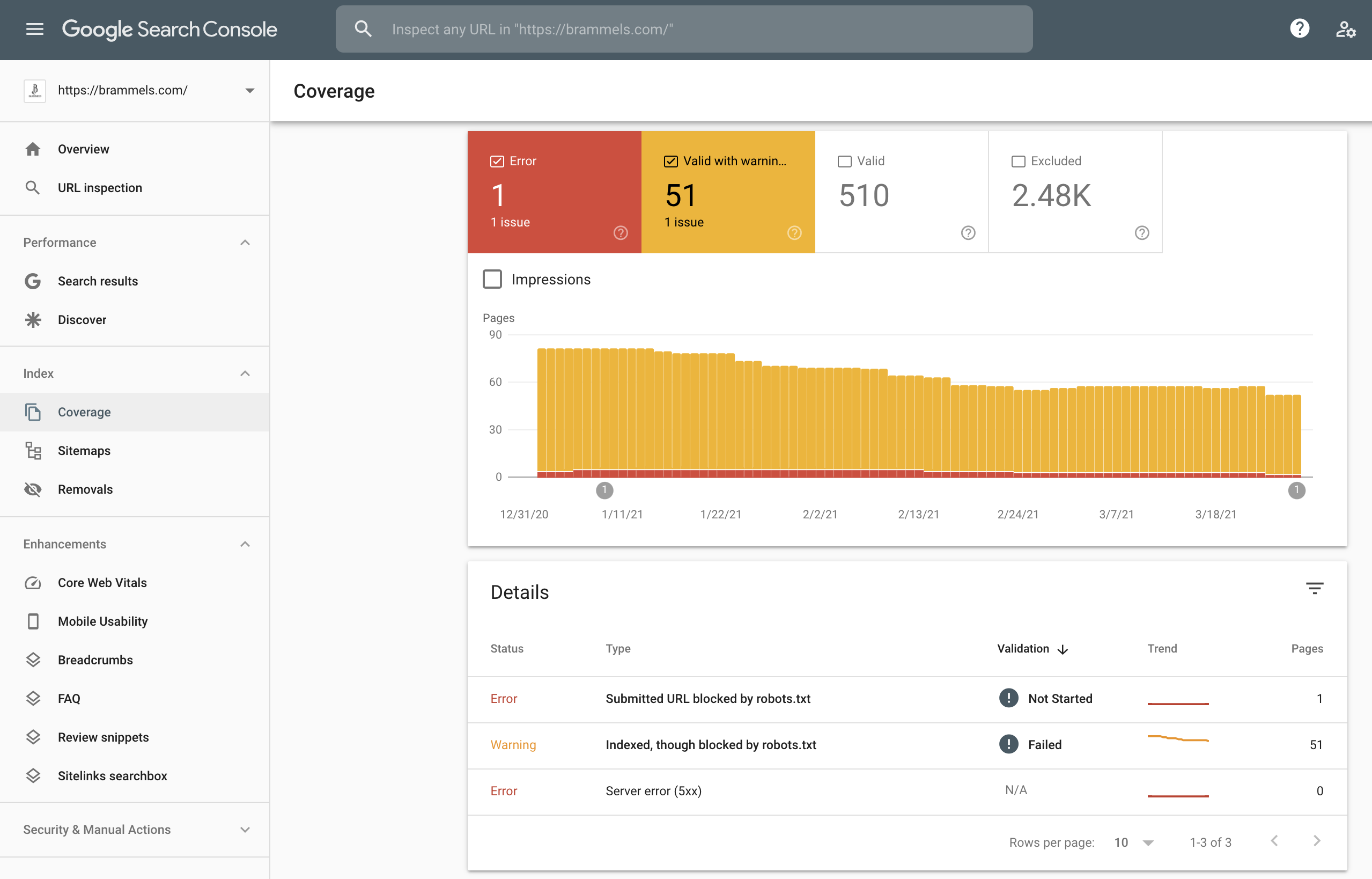

Also, check the errors in the Coverage report in Google Search Console to find crawl and indexing issues. Use the instructions from Google to understand what this or that status in the report means.

10. Check the presence and correctness of canonical tags

This is another important setting that insures you against problems with duplicate pages. Even if you did not find duplicates in the process of researching the search results for your site, this does not mean that adding canonical tags can be ignored.

You may not even know:

- that you have pages on your site that differ little from one another;

- that people put backlinks to different URLs of the same page.

And if you yourself do not choose which version of the page is the main one, and which version is the copy, then Googlebot will do it for you.

Google chooses the canonical page based on a number of factors (or signals), such as whether the page is served via http or https; page quality; presence of the URL in a sitemap; and any rel=canonical labeling. You can indicate your preference to Google using these techniques, but Google may choose a different page as canonical than you do, for various reasons.<...>

Especially remember the last thesis from the quote: “you can indicate your preference to Google using these techniques, but Google may choose a different page as canonical than you do, for various reasons.”.

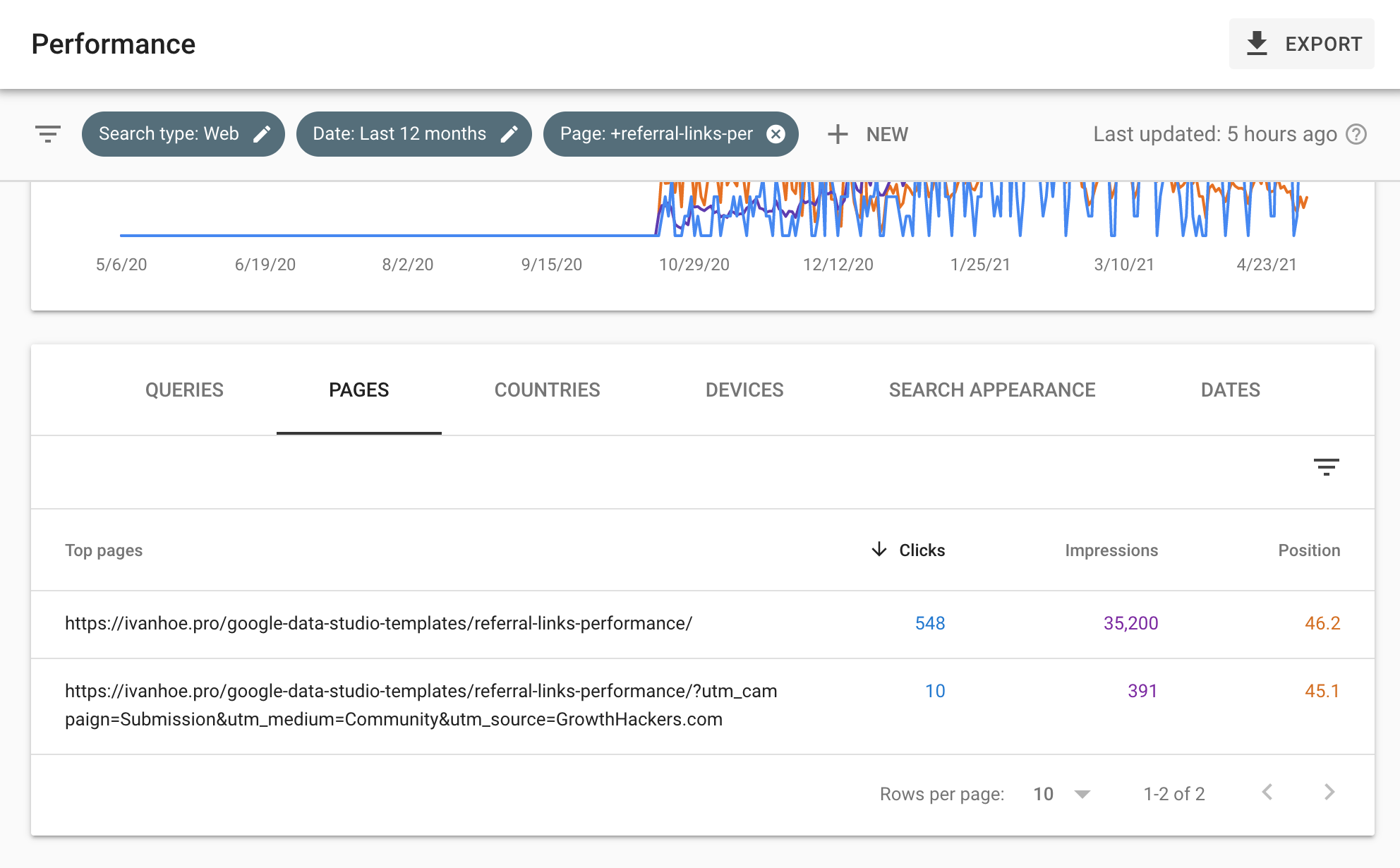

I felt it the hard way when I published one of the site’s pages on GrowthHackers. Even though the page contains the correct canonical tag, Google has indexed the utm-tagged version of the page for a while.

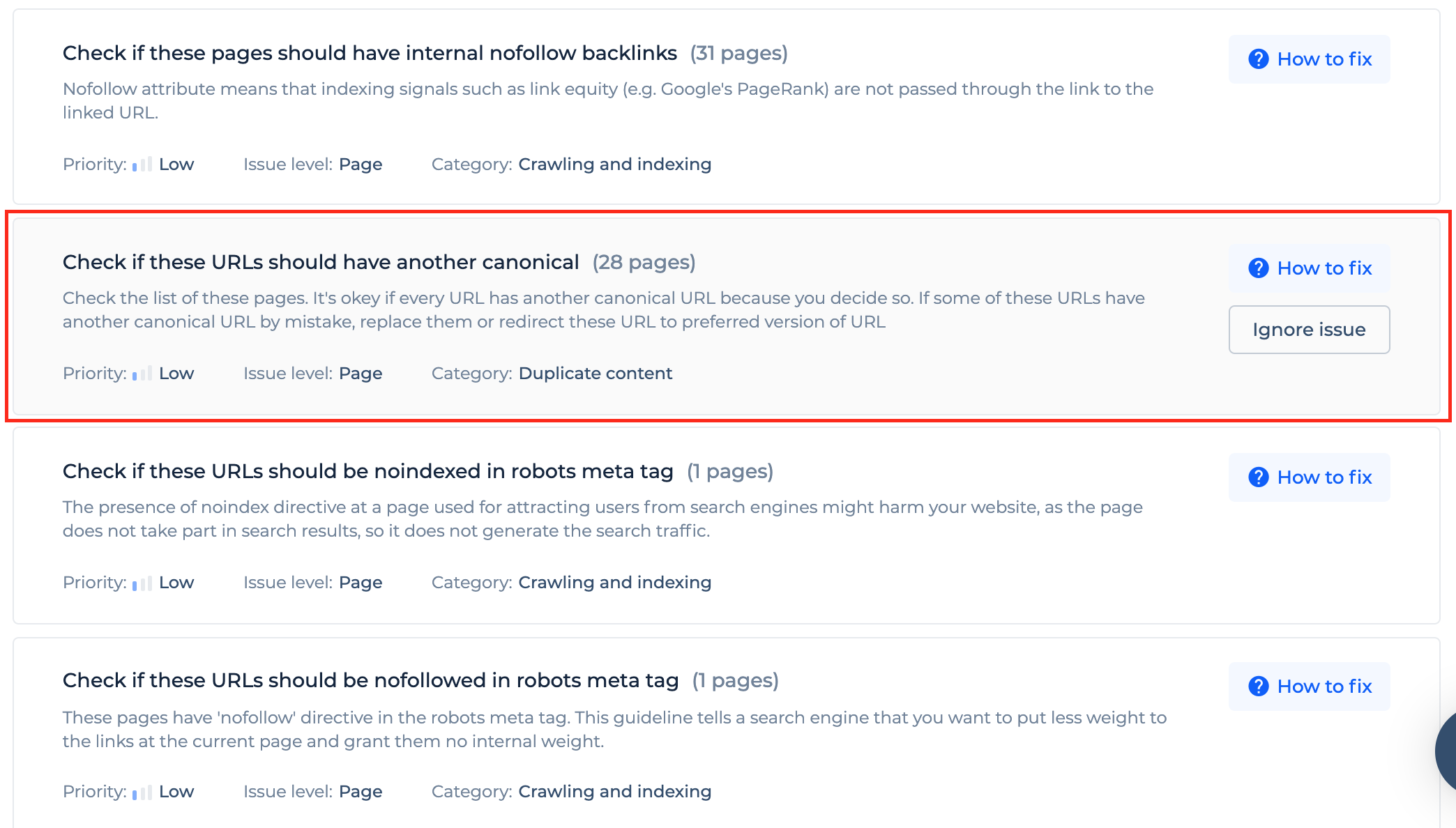

That is, canonical is a recommendation for the bot, one of the signals. You need to use it but remember about other signals as well. If you have pages without the canonical tag or pages with a different canonical tag than their URL, Sitechecker will find this during the site audit and tell you what to do with them.

11. There are no broken links and redirect chains on the site

The process of working on a site is often accompanied by deleting pages, changing their URLs, merging pages. These actions lead to the fact that appears on the site broken links and redirects.

Broken links are links to non-existent or broken pages. These can be both links to deleted pages, and mistakes made when writing the link address.

Redirects are redirecting a user from the old page address to a new one.

Both broken links and redirects with small numbers are not particularly dangerous from the point of view of Googlebot. Their main danger lies in the deterioration of the user experience: with broken links, the site visitor cannot get to the page he was promised, and with long chains of redirects, he is forced to spend more time waiting for the page to load.

Broken links and redirect chains become a big problem, including from the point of view of Googlebot, in two cases.

- When they are on high-value, high-traffic pages. In this case, Google will eventually remove the page from the search results and it will not be possible to get it back instantly.

- When there are a lot of them on the site. Firstly, it makes it difficult to crawl, since Google, without crawling some pages, will not get access to others that are linked from the first. Secondly, such pages eat up the crawling budget, and, accordingly, other valuable pages do not receive it.

Therefore, just like the availability of the home page, it is important to constantly monitor the appearance of new broken links and redirects in order to instantly fix critical errors.

12. The site is loading fast

Google back in 2010 published the news that the site speed parameter was included in the ranking formula. But our practical experiences and the statement of Google employee Gary Illyes in 2020 showed that the influence of this factor is insignificant.

The main value of high site speed is that it helps to better convert those users who have already visited your site, and not overtake other sites in the search results.

Regardless, you still need good speed if you are going to compete for the first place. The more traffic you have, the more profitable it is to invest in website acceleration, as this directly affects the number of conversions and income.

For Mobify, every 100ms decrease in homepage load speed worked out to a 1.11% increase in session-based conversion, yielding an average annual revenue increase of nearly $380,000. Additionally, a 100ms decrease in checkout page load speed amounted to a 1.55% increase in session-based conversion, which in turn yielded an average annual revenue increase of nearly $530,000.<...>

Use these tools to troubleshoot speed problems.

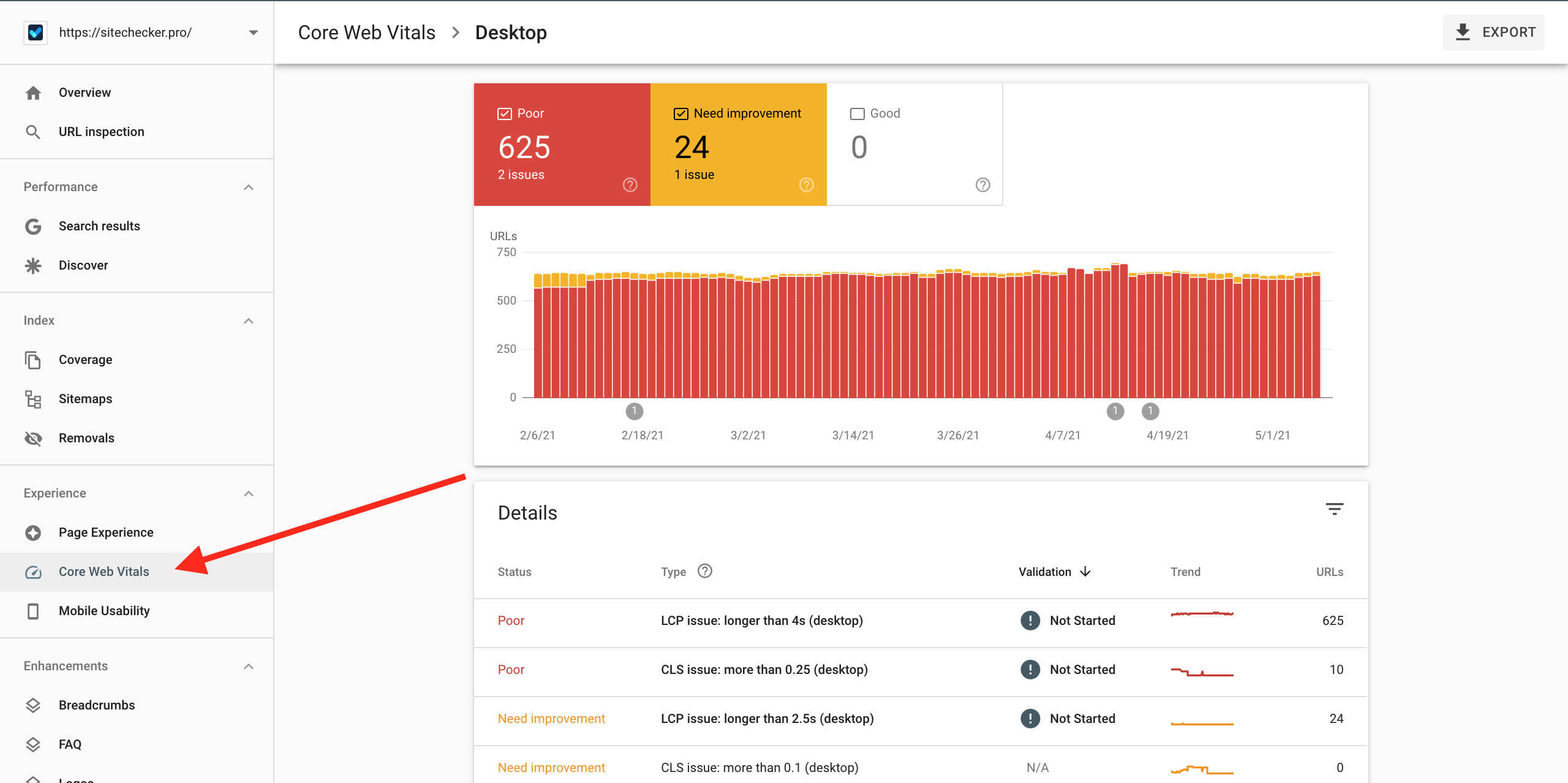

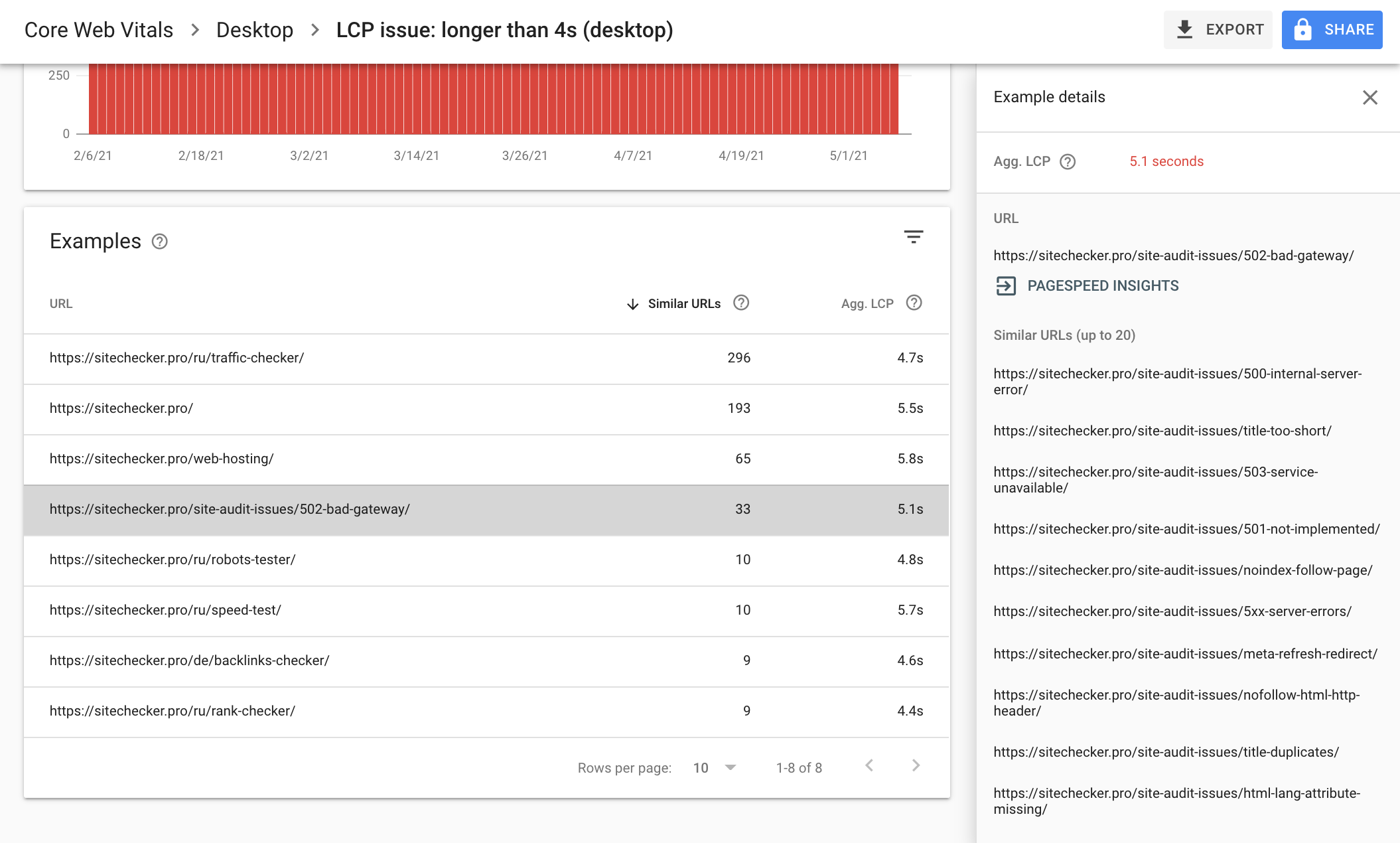

Core Web Vitals report in Google Search Console

This report is especially useful because the tool divides problem pages into groups. So you can immediately guess what general characteristics of all pages from one group need to be optimized in order to improve their speed.

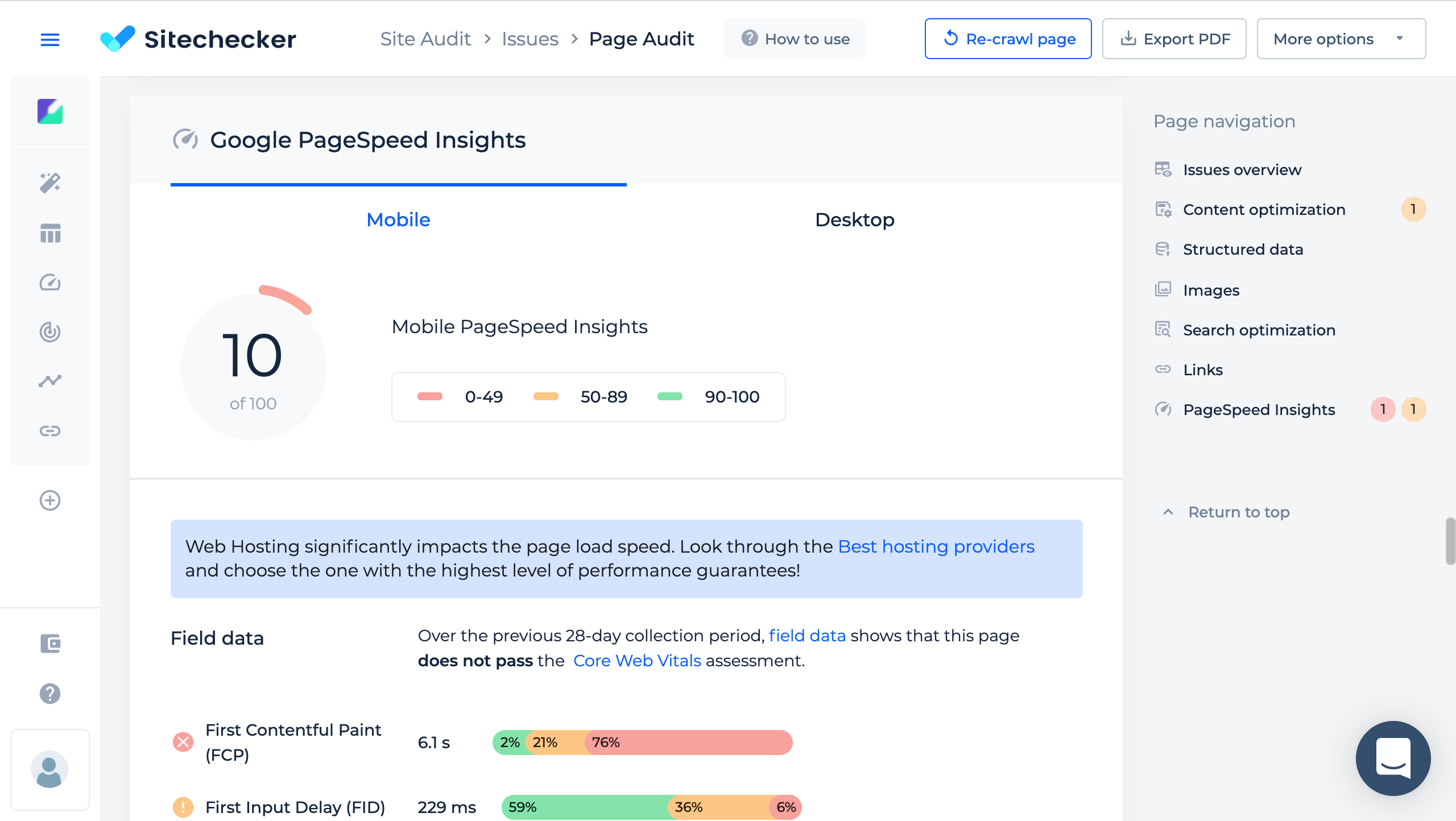

Google PageSpeed Insights

PageSpeed Insights report is valuable because it contains accurate information about images, scripts, styles, and other files that negatively affect page load speed, and suggests what to do with these files to speed up page load …

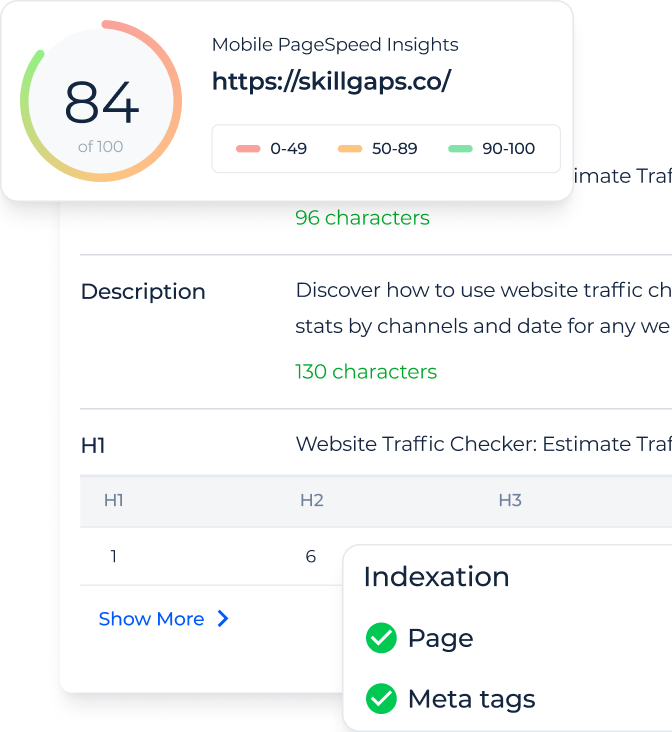

We use the Google PageSpeed Insights API in On-Page SEO Checker, so you can investigate speed issues along with other page issues in one place.

13. Friendly URLs are configured on the site

Friendly URLs – are the page addresses that help users understand what content is on the page, even before visiting it. This is especially valuable when, in addition to the address of the page, the user does not have any additional information about it: no title, no description, no picture.

In addition, friendly URLs help:

- Memorize valuable pages and easily return to them;

- Navigate websites faster;

- It is more convenient to share the link with other people.

Creating a page address based on keywords also helps the search bot to recognize the content of the page faster, but does not give an increase in rankings.

An example of a long and incomprehensible URL:

https://sitechecker.pro/folder1/89746282/48392239/file123/

An example of a friendly URL:

https://sitechecker.pro/site-audit-issues/twitter-card-incomplete/

Thus remember that page addresses in Cyrillic, although understandable to the user, not all applications and sites recognize them.

In the search results and in the browser bar, the address of your page may look like this:

https://ru.wikipedia.org/wiki/Менеджмент

And when you copy a link and send it by mail or in a messenger like this:

https://ru.wikipedia.org/wiki/%D0%9C%D0%B5%D0%BD%D0% B5%D0%B4%D0%B6%D0%BC%D0%B5%D0%BD%D1%82

Therefore I recommend for pages with Cyrillic content still use URLs in Latin.

14. Site has convenient navigation for users

Convenient navigation on the site is expressed in the following:

- the user can quickly find information that interests him about a service, product, company;

- the user understands by navigation what opportunities await him on the site.

Depending on the niche, the convenience of navigation can be increased by a variety of elements: a search bar, a drop-down menu with product categories, breadcrumbs, placement of the most important pages in the header, convenient filtering in online stores, and the like.

An audit of the site’s user-friendliness is a separate large area. It is usually used to search for conversion improvement points, but it is also important as part of an SEO audit of a site. If the navigation on the site is poor, then after switching to the site from the search, users will often return back to the search results to find sites with more convenient navigation. And a return to search results is a signal for the algorithm that the site does not solve the user’s problem and its position needs to be lowered.

To appreciate the ease of navigation on the site, put yourself in the user’s shoes and try to achieve the desired result on your site and on the sites of competitors. Write down what hindered and helped you in this process on each site. This will give you the first list of improvements. Additionally, order this service from professionals on freelance exchanges.

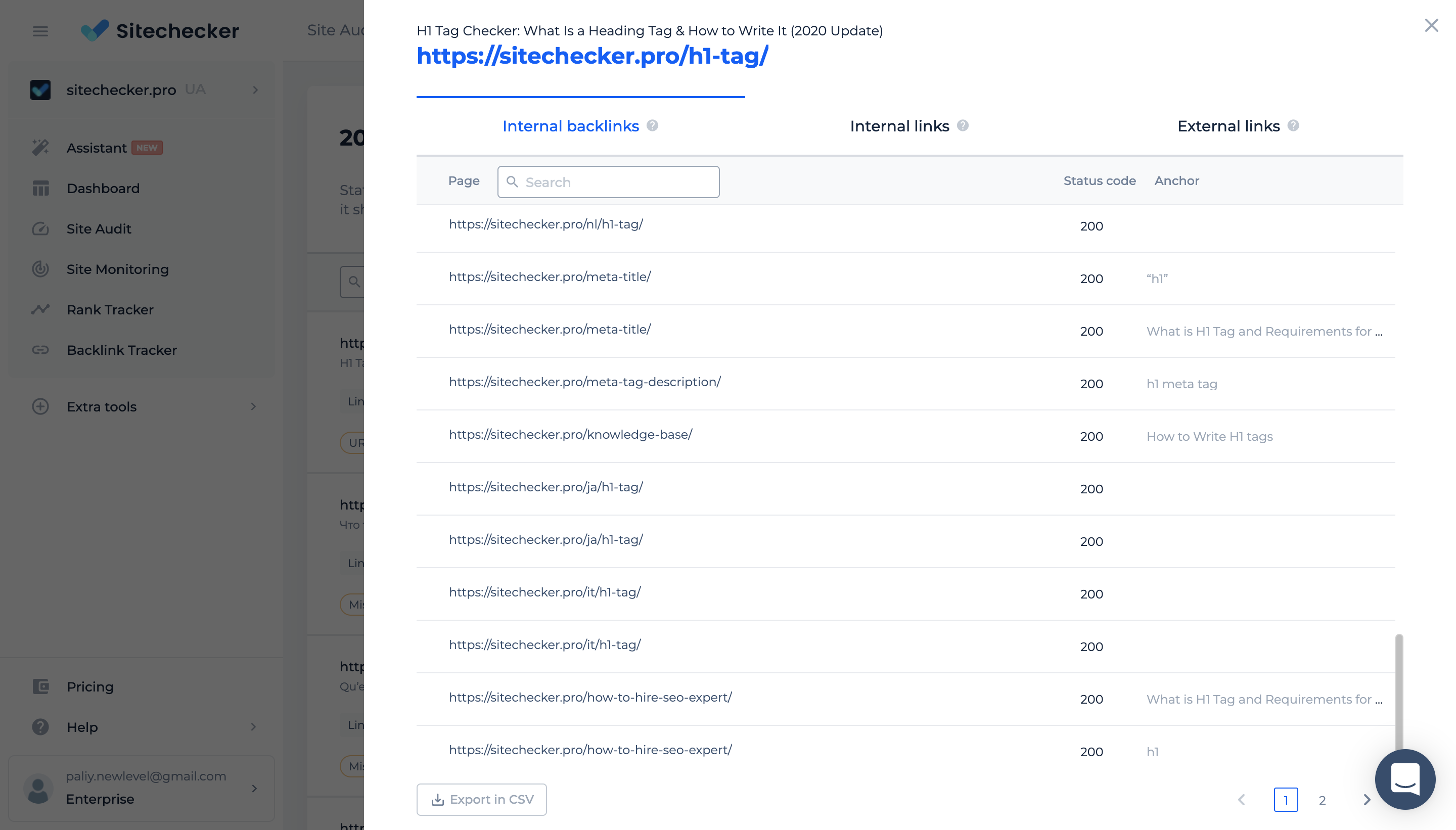

15. The site has a well-organized link structure for the Googlebot

A good link structure includes several rules.

The presence of internal links to all pages that we want to index.

If you have pages that do not have any internal links from other pages, then the Googlebot may skip them and not crawl. Internal links are not the only source of information about all existing pages for the bot (there is also a sitemap.xml file and backlinks from external sites), but it is the most reliable way to tell the bot about the existence of a new page and the need to crawl it regularly.

Pages without incoming internal links are also called orphan pages. Our Site Audit crawler will help you find them. If there are some pages in the sitemap, but there are no internal links to them, then we will place them in the Orphan URLs category. Such pages must be deleted if you still want to leave them out of the index, either directly or internally.

Placing the most valuable pages closer to the home page and more internal links to the valuable pages than other pages.

This is important because the Googlebot measures the link weight of a page based on these factors. If the low-value pages have the highest link power, then the high-value pages will rank lower in the SERP than they would if you directed the bulk of the link weight to them.

In Site Audit, we have the ability to view the link weight of all pages (Page Weight). To calculate it, we use the standard PageRank formula from Google, but without taking into account anchors and external links.

Use this data to check if the correct pages on your site have the highest weight.

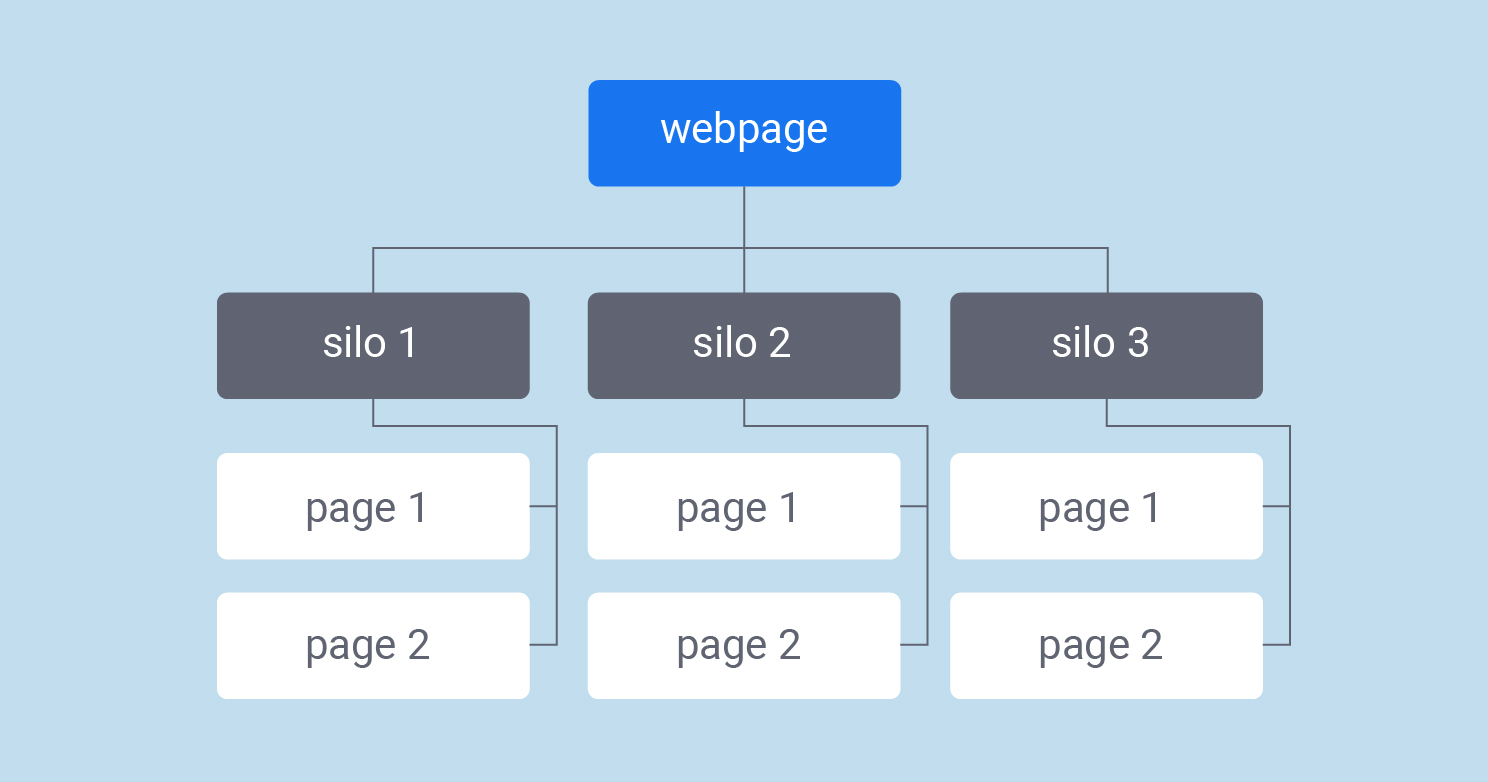

The third, but not mandatory, the point is a special linking structure – Silo. This is such interlinking, in which we create clusters of pages isolated from each other on the site, and pages from one cluster never link to pages from another cluster.

This method is relevant for sites that contain content on different topics, and not just one specialized one. Bruce Clay considers this to be one of the most overlooked techniques in website optimization. You can use his checklist to implement such a structure on your site.

16. Both users and the Googlebot understand the content of the page

Understanding the page by the Googlebot is expressed in the fact that it receives enough information about what problems the user can solve visiting the page and ranks it for those keywords for which we have optimized the page.

The user evaluates the content of the page in three stages:

- by the snippet in the search results, social network, messenger;

- according to the information on the first screen when visiting the page;

- reading all the content on the page.

Let’s take a look at the key elements that help communicate the content and purpose of a page to search bots and people.

Title and description tags

Title tag and description tag are some of the ranking factors. The Google bot places more weight on words in these tags than words in content when it evaluates a page’s relevance.

Also, Google most often forms a snippet of a page in search results from these tags. Therefore, a good title and description is a short promise to the user that should grab their attention and show what they will get if they click on the page.

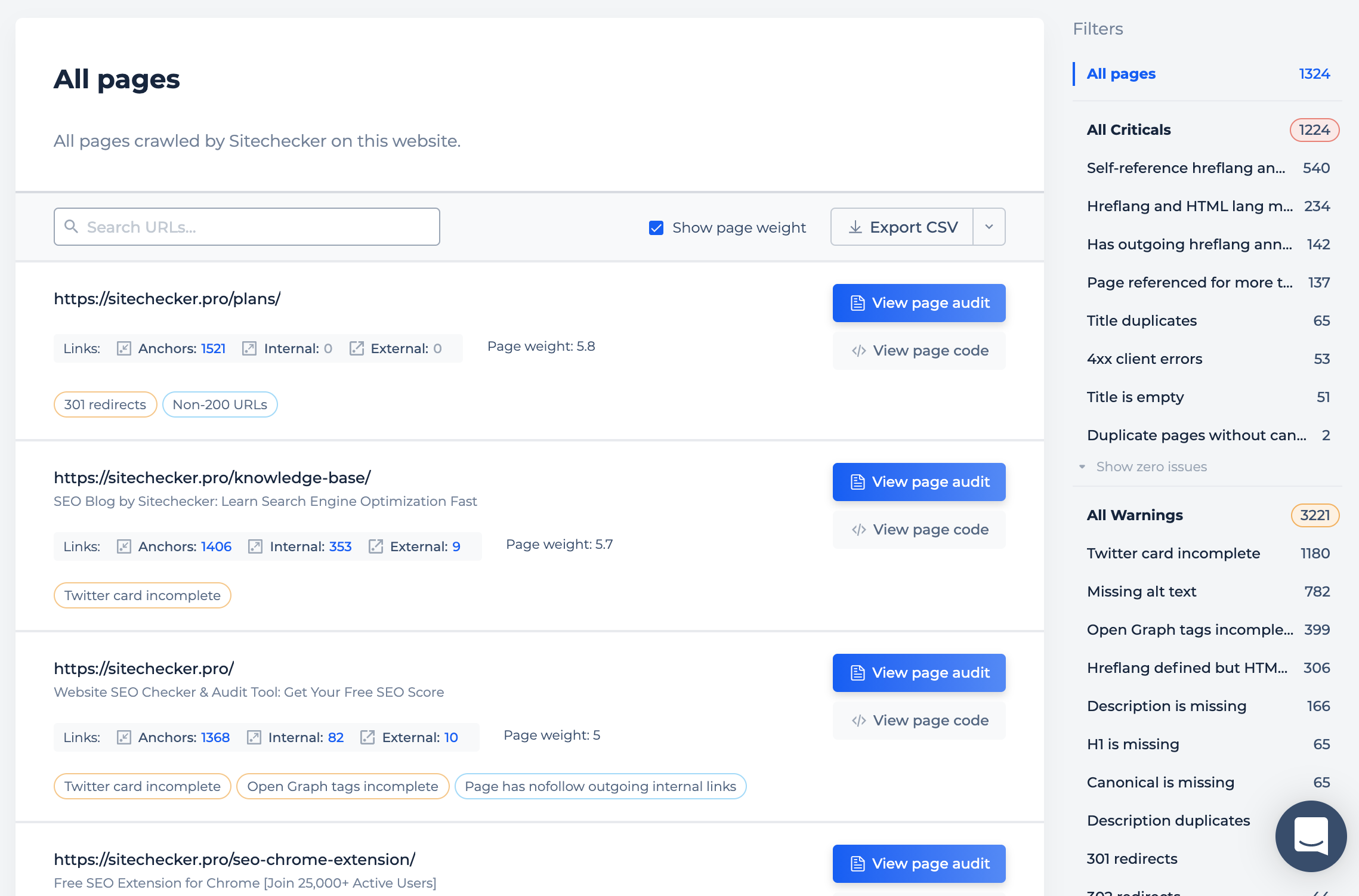

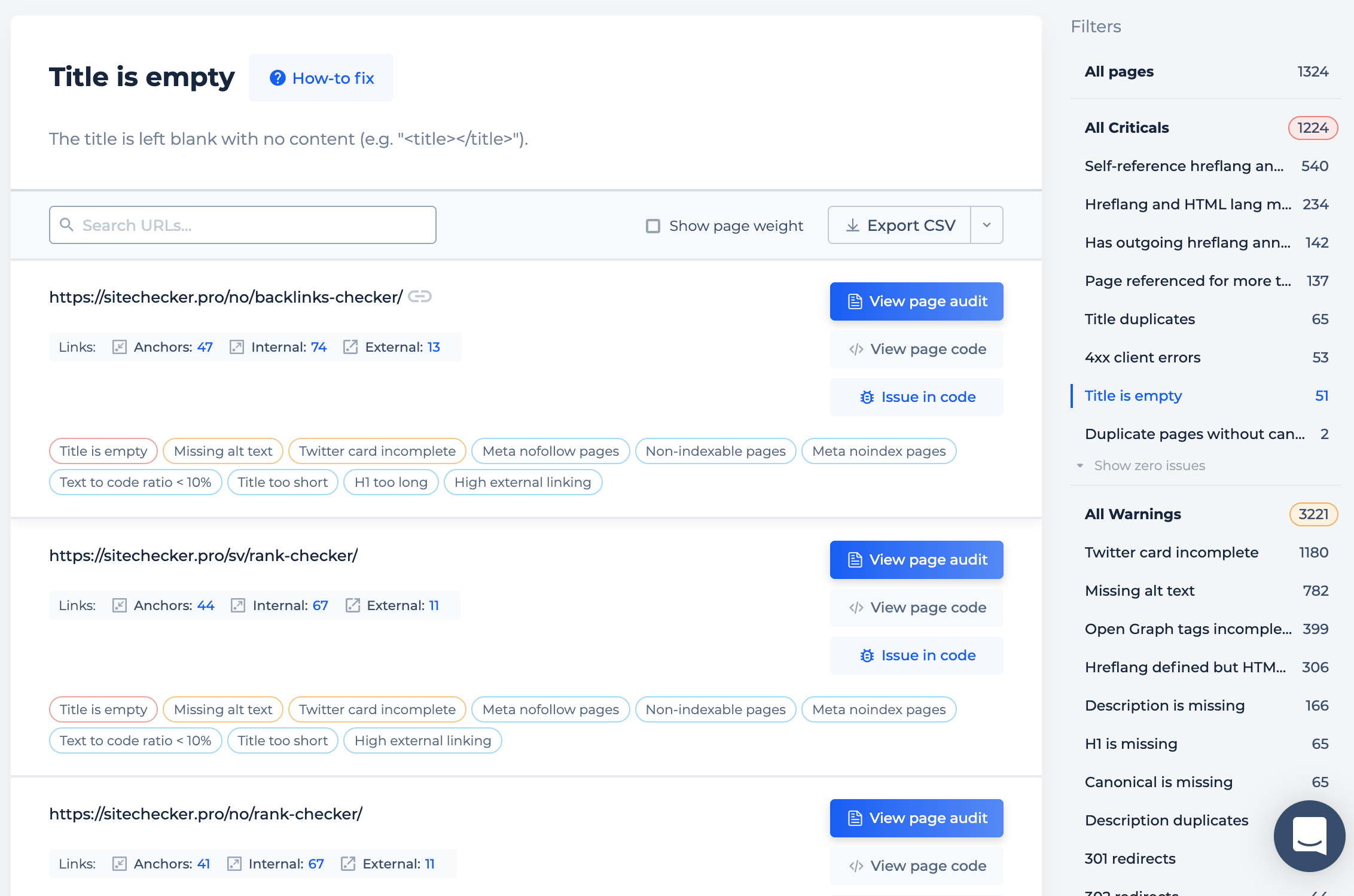

Our Site Audit will help you find pages where these tags are missing, or they are empty, duplicated, too long, short.

Headings

Headings can be visually highlighted, but not tagged for importance to Google, and vice versa. Visual highlighting helps the user to scan the text faster and see the semantic separation of the text. The markup for Google also helps him better understand the content of the page. Keywords that are in tagged headings are given more weight in assessing page relevance than in regular text.

The H1 heading is the most important heading close to the title tag. Next are the h2-h6 headers. Each next heading is less important than the previous one.

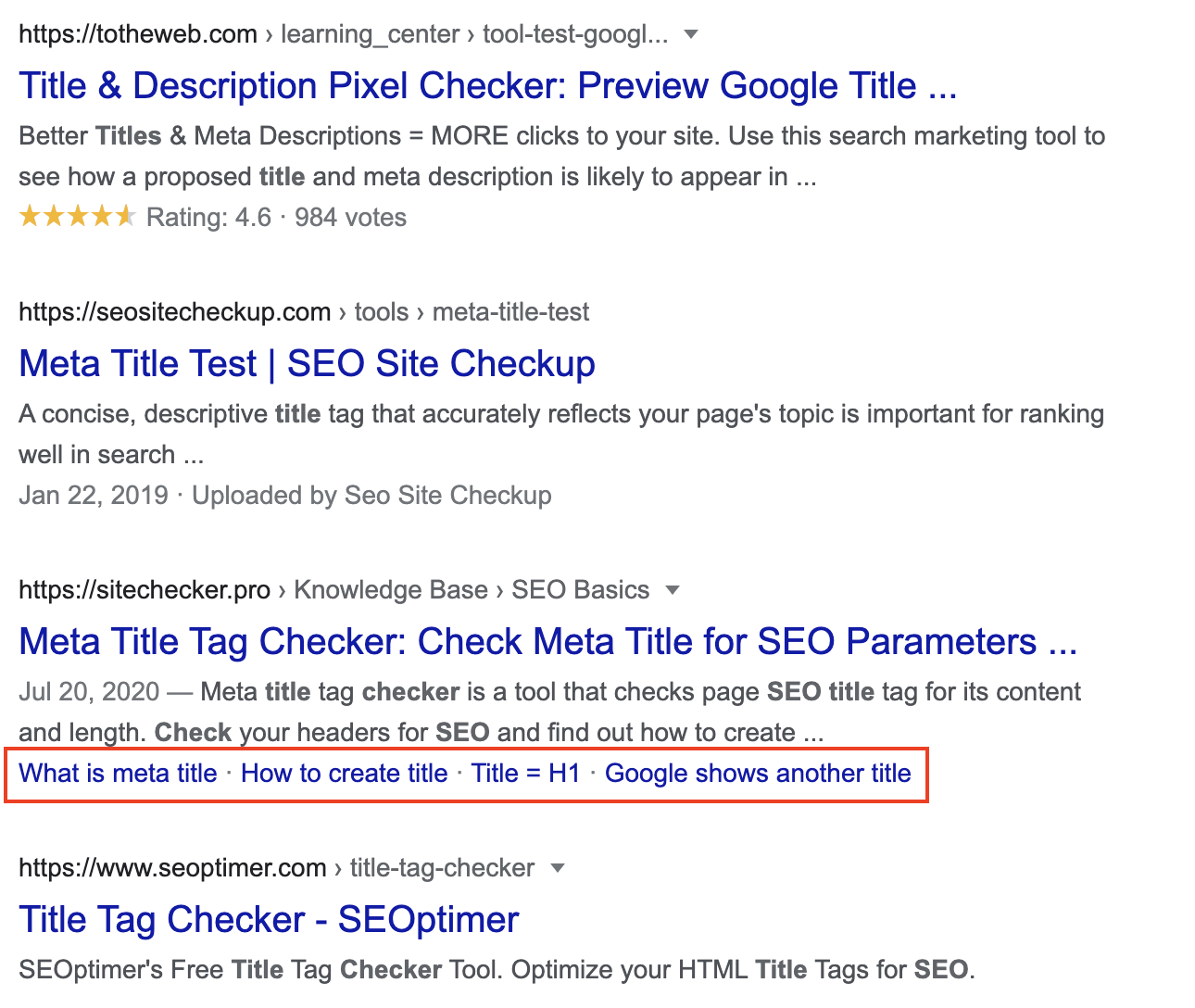

It is good practice to publish the list of subheadings on the page at the very beginning, like in a book. This allows the user to assess whether the information he needs is on the page and go directly to it in one click. As an added bonus, Google sometimes displays such quick links in the page snippet, which visually enlarges your snippet and gives more information to the user.

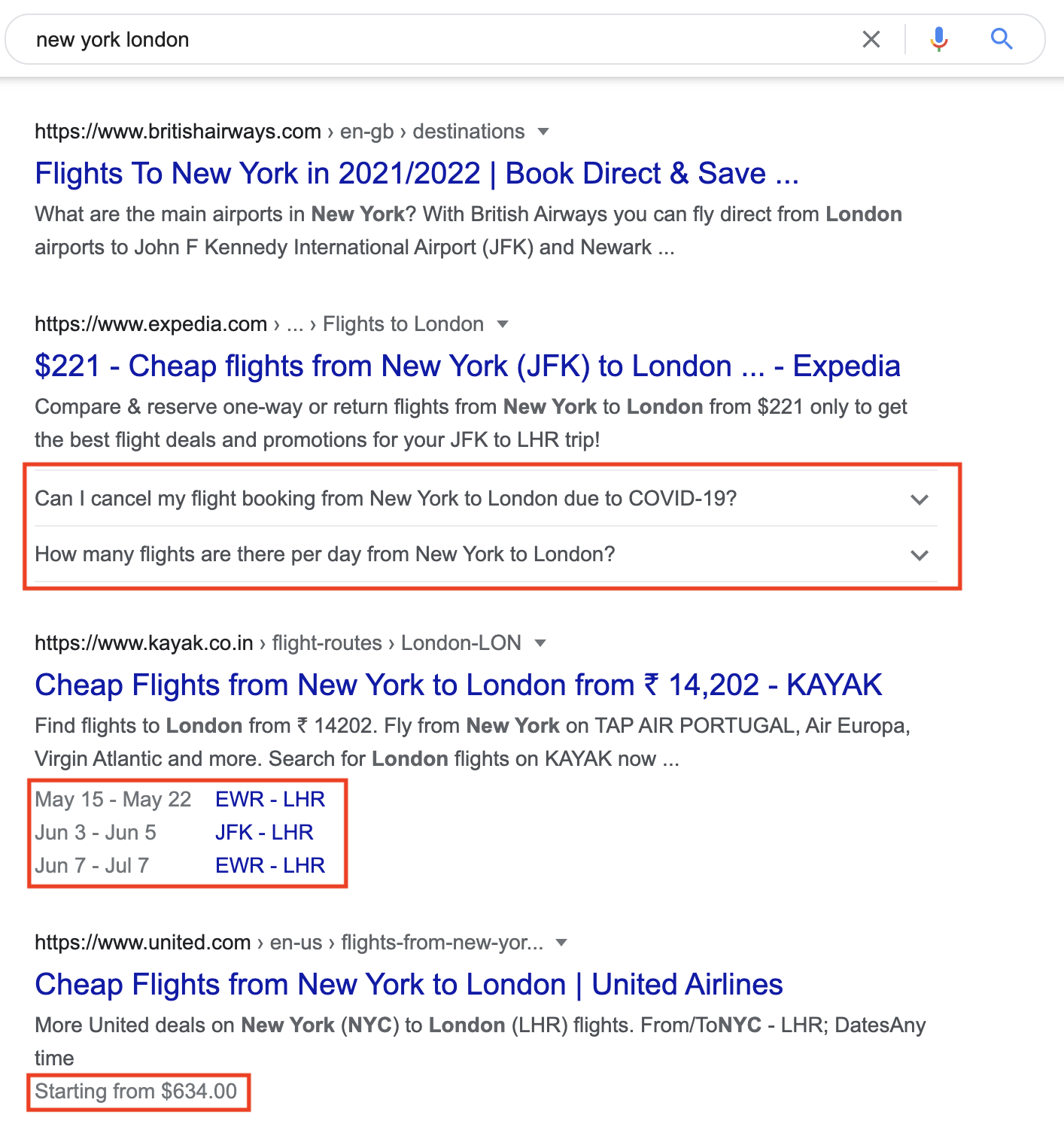

Structured markup

Structured data helps the Googlebot to better understand your page content and form additional snippet elements from the markup. For example, the screenshot below shows an example of using the FAQ markup, events, and prices.

Use this guide from Google to understand how structured data works and choose the markup elements that are valuable for your site. And bookmark the tool Google Rich Results Test to check the markup for errors after implementation.

Social media tags

These tags include the Open Graph Protocol and Twitter Cards. They help to make the snippet of a page on social networks and instant messengers more attractive, which directly affects its click-through rate. The share of social networks in the time spent by users on the Internet is growing. Therefore, it makes sense to add these tags to all valuable pages.

You can compare below the attractiveness of the snippet, where the picture was pulled up, and where it is not.

Anchor text

Anchor text is one of the key ranking factors, as it helps Google better understand the content of the page. Use phrases in the anchor text that concisely convey the content of the linked page. This is valuable not only for the bot but also for users since they understand in advance what information is behind the page and whether it will be of interest to them.

Our Site Audit will help you find links without anchor text or with monotonous, irrelevant text.

You also can check most of all the listed parameters on a page in one click using the Sitechecker SEO Chrome Extension.

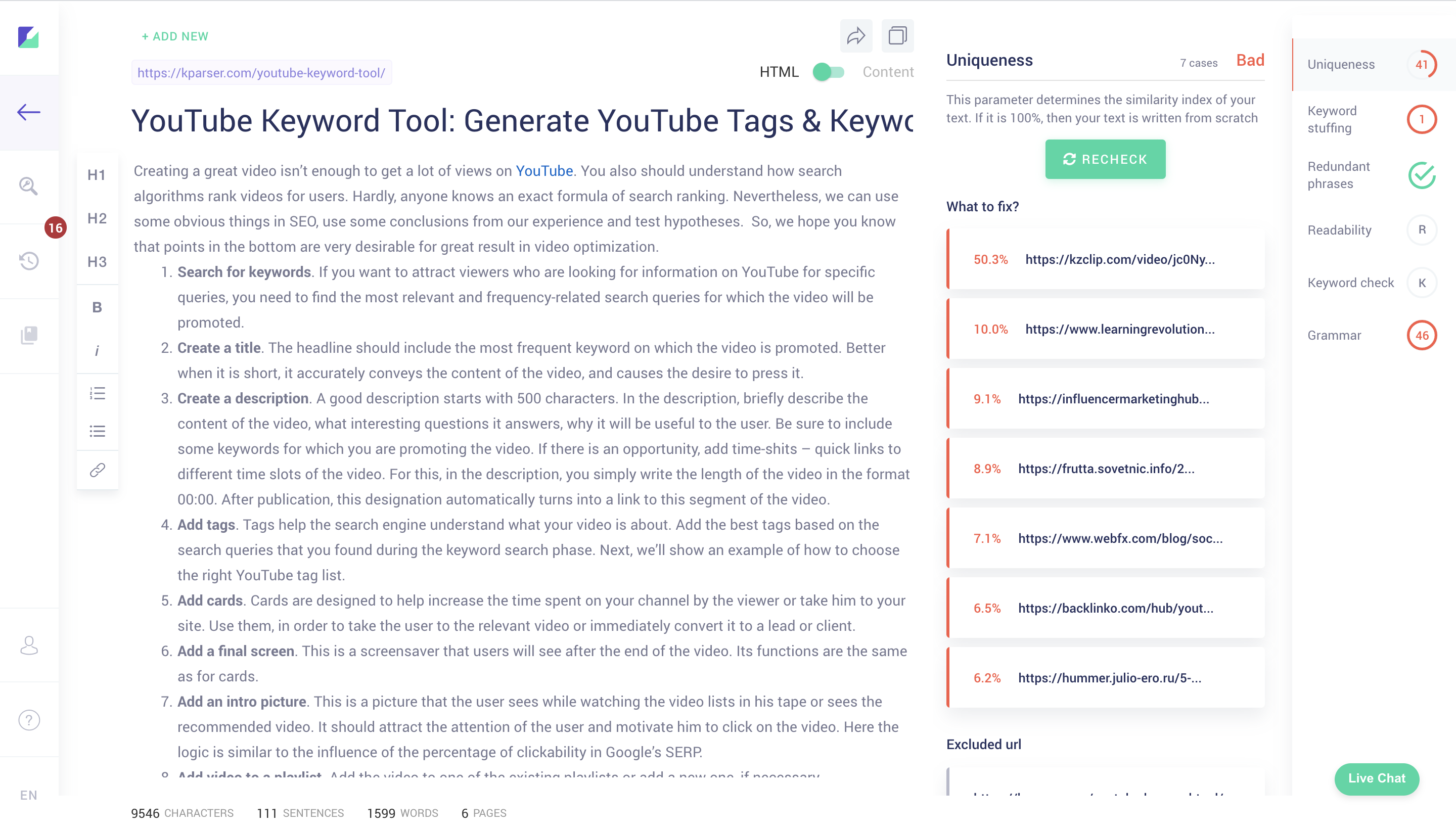

17. Checking text for uniqueness, readability, grammar, keyword stuffing

Good text is the text that brings traffic from organic search and converts users well into valuable business action. These are the parameters you need to check to make sure you have good text.

Uniqueness

It is important that you have unique pages not only within your site but also in comparison to other sites on the Internet. Someone can take your text for themselves and Google will not consider your site as the original source. This often happens, since Google does not have a well-functioning mechanism for determining the primary source.

Readability

Helps to check how easy the text is for users to understand. The main value of good readability is the best conversion to targeted action.

Grammar

John Mueller in one of his videos clarified that Google does not check the text for grammar and does not decrease site rankings for grammatical errors. But grammar is important in the eyes of the user. Therefore, for the key pages that bring you traffic and conversions, it makes sense to check it.

Keywords usage

Problems with keywords usage can be of two kinds:

- keyword stuffing when you use too many keywords;

- insufficient number and variety of keywords that relate to the topic of the page.

All these parameters can be checked in our other tool – Copywritely. You can insert both text and just the URL of the published page and get a list of all content errors in one place.

18. Search for unpromising and cannibalized pages

Unpromising pages

These are usually pages with little content or pages that Google, for some reason, does not rank for the keywords we need, even if there is enough content on the page.

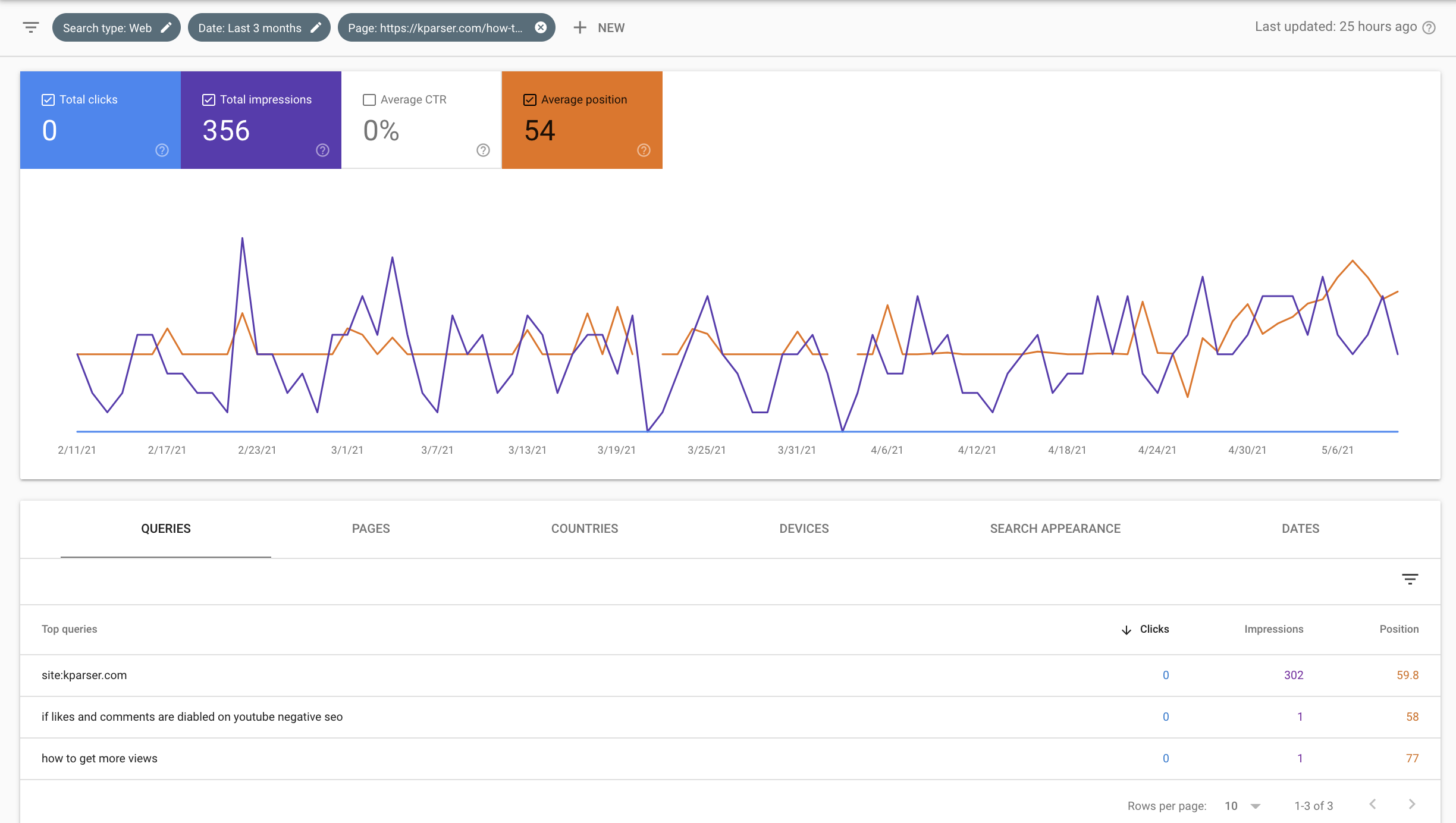

Here is a screenshot of a typical hopeless page – https://kparser.com/how-to-get-more-views-on-youtube/. The content on it is poorly designed and has not been updated for a long time. It makes sense to either redirect it to a page with a similar theme or completely rewrite the content.

To find such pages in your Google Search Console, go to the search results report and sort the pages by the number of impressions. Among the pages with the lowest number of impressions, there will be weak pages from the point of view of Google. Pay attention to how long a page exists, how many impressions it has collected, and how many keywords it ranks for. If it has been in the index for more than 3 months, but ranks only for 1-2 keywords and has few impressions, then most likely the page is problematic.

It makes sense to work on such pages after the statements of a Google employee about the impact of weak pages on the overall ranking of the site.

I ran this experiment on one of the sites. I removed, re-corrected, or merged all the weak pages and this really gave the result in the form of an increase in rankings for strong pages.

Cannibalized pages

These are pages that rank for the same keywords. If you have several such pages and they both are at the top of the search results, then great. But if there are more of these pages, and none of them ranked first, it is possible that Google cannot determine which one is more relevant.

An additional danger of such pages is the division of the link weight into two pages. Someone will put links to one page, someone to another, and thus the authority of each page will be lower than one single page could have.

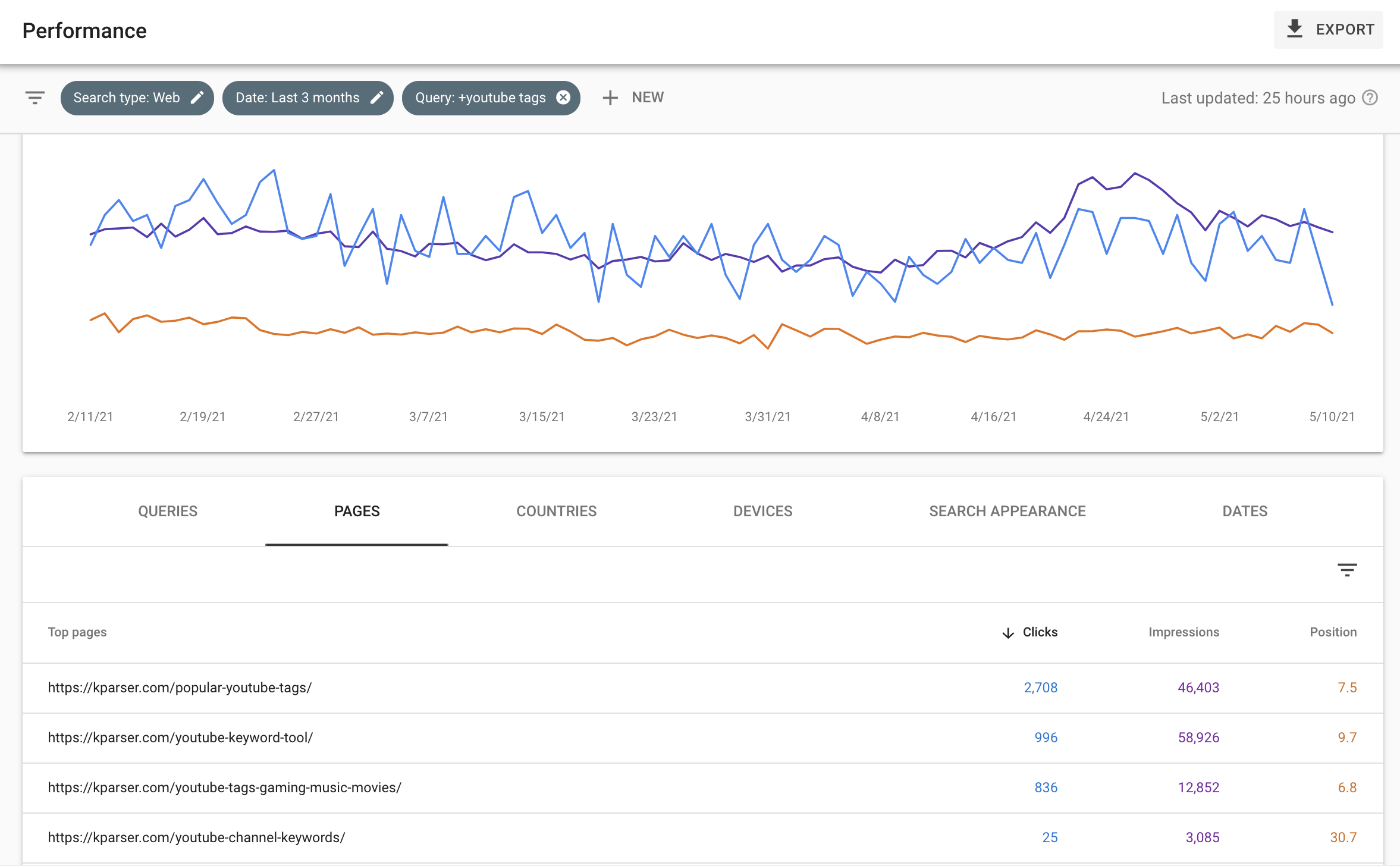

To find such pages, type a keyword into a filter inside Google Search Console and see which pages are ranking for it. If there are more than two pages and all of them are behind 10-20 positions, it makes sense to research the content of these pages, their internal and external backlinks. It is likely that they are competing with each other.

If you saw that there is a problem, then weaker pages should either be redirected to a stronger one, or their content should be rewritten so that they rank for other queries.

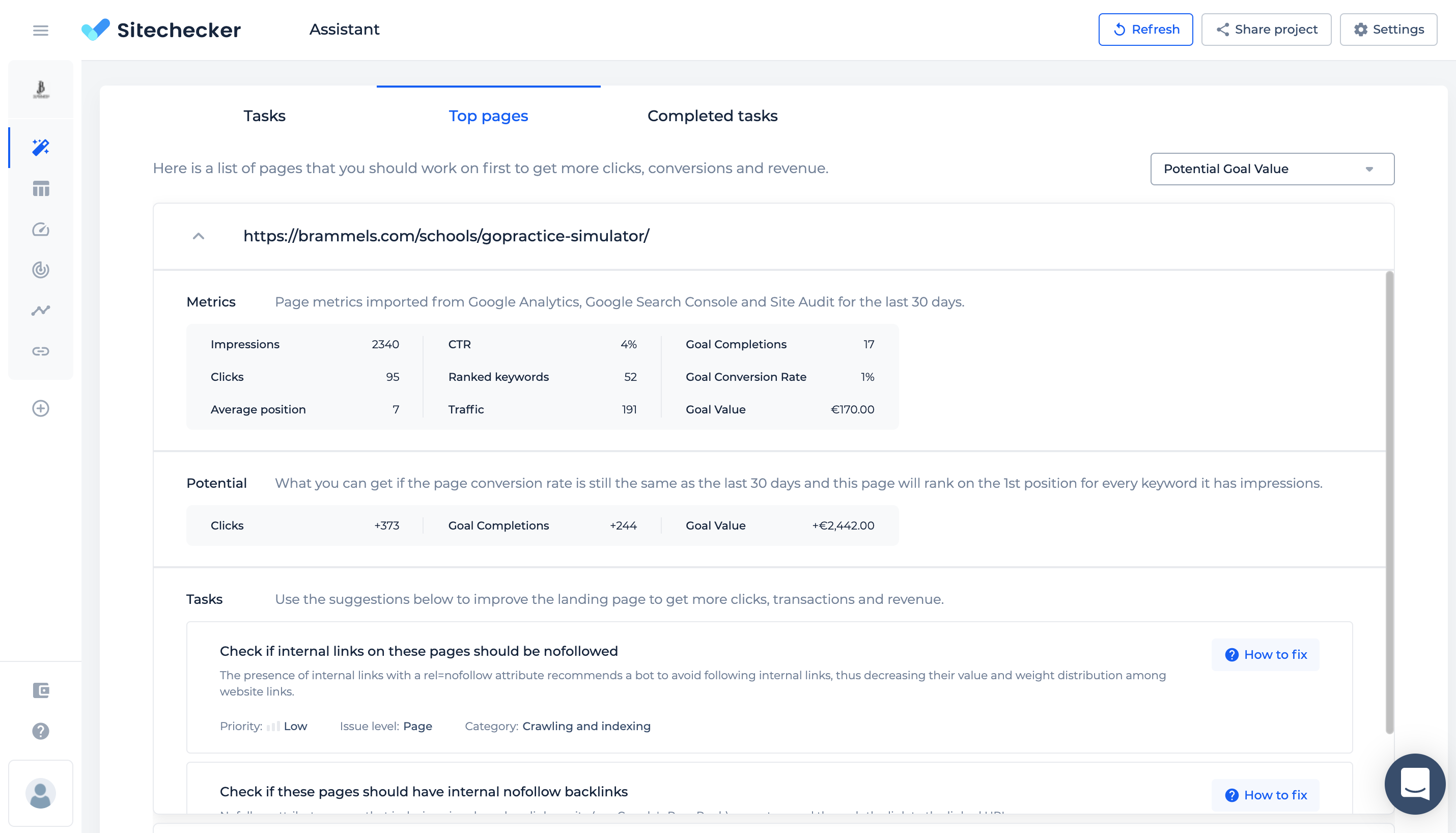

19. Search for the most valuable pages

The most valuable pages can be identified in different ways depending on the type of site:

- by income, if Ecommerce Revenue or Goal Value tracking is configured in Google Analytics;

- by conversions in the form of Transactions or Goal Completions;

- by the amount of traffic (relevant for media, blogs, or sites where conversion tracking is not configured)

- by the number of impressions and proximity to the top of the search results, if the pages have no clicks yet, but only impressions.

In any case, you need to upload the data accumulated over the past period to Google Analytics and Google Search Console and build a forecast.

It can be done:

- hands-on the table, which takes a long time;

- using my templates in Google Data Studio (this will require certain skills from you);

- use Top pages report in Sitechecker Assistant (the easiest way).

What’s next

This is not an exhaustive list of SEO audit steps, but these points will be valuable for any website.

In addition to universal solutions, there are also points that depend on your CMS, type of business, site size, and so on. To identify the specific CMS a website uses, you can use a CMS Detector to tailor your SEO strategy accordingly.

For WordPress sites, you need to pay special attention to the security and speed of plugins. In local businesses, you need to focus on optimizing Google My Business and Google Maps. For large sites, you should pay attention to Google’s crawling budget and look for ways to optimize it.

For multilingual sites, you need to check the correct setting of the hreflang tags.

Website security is a crucial component, regardless of site type. Incorporating SSL Certificate Monitoring ensures that your SSL certificates remain valid and secure, protecting user data and maintaining strong SEO performance by avoiding security-related penalties.

Highlight such parameters and look for highly specialized recommendations for optimizing this type of site.