What is a Website Speed Test?

The Website Speed Test from Sitechecker is a tool that evaluates the performance of web pages by analyzing various metrics such as FCP, FID, LCP, CLS, and more. These metrics assess how quickly content is visually displayed and how responsive a page is to your interactions.

Powered by the Google PageSpeed Insights API, this tool rates website speed on a 0 to 100 scale, with higher scores indicating superior performance. It facilitates testing for both desktop and mobile versions of a site, providing insights to pinpoint and resolve factors contributing to site latency.

How can the tool help you?

Performance Grading: Utilizes Google PageSpeed Insights API to score a website’s speed on a scale from 0 to 100.

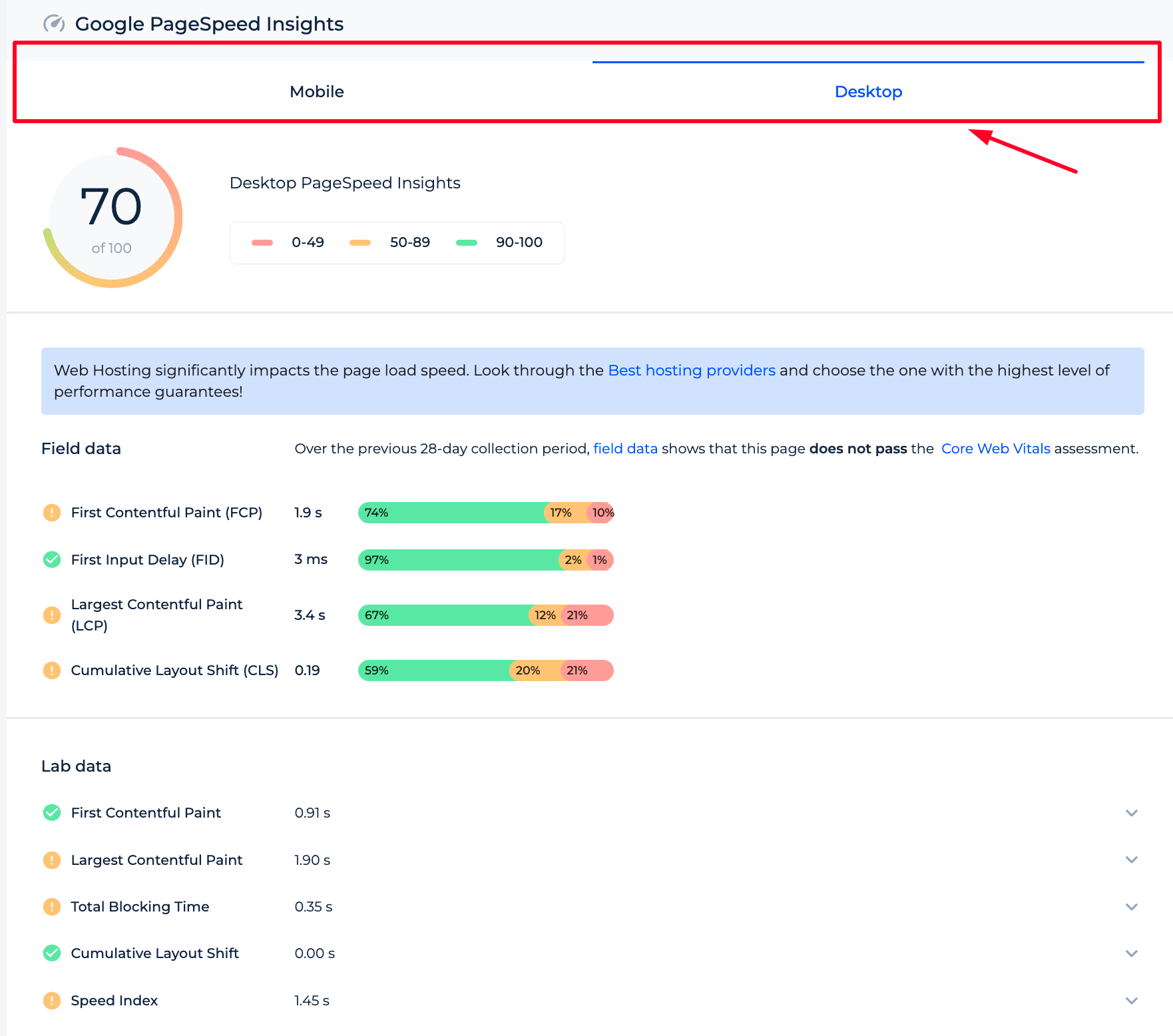

Desktop and Mobile Testing: Offers the ability to analyze performance for desktop and mobile site versions.

Core Web Vitals Assessment: Evaluates critical user experience metrics such as FCP, FID, LCP, and CLS.

Recommendations for Resolving Page Loading Speed Issues: Provides detailed reports and suggestions for improving page load times and overall website performance.

Real-time Results: Delivers quick scanning and immediate feedback on website performance metrics.

Key Features of the tool

Unified Dashboard: A centralized platform where you can view comprehensive analytics and reports on their website’s SEO performance.

User-Friendly Interface: An intuitive design that simplifies navigation and makes it easy for users of all skill levels to access and utilize SEO tools.

Complete SEO Toolset: A robust collection of tools for keyword research, site auditing, rank tracking, backlink analysis, and more, providing a full suite of resources for optimizing a website’s search engine presence.

How to Use the Tool?

Get detailed information about loading rate and page size, or find pages with speed issues for the entire site. A speed test is a very convenient way to get test results and compare them with the indicators after fixing bugs on your site.

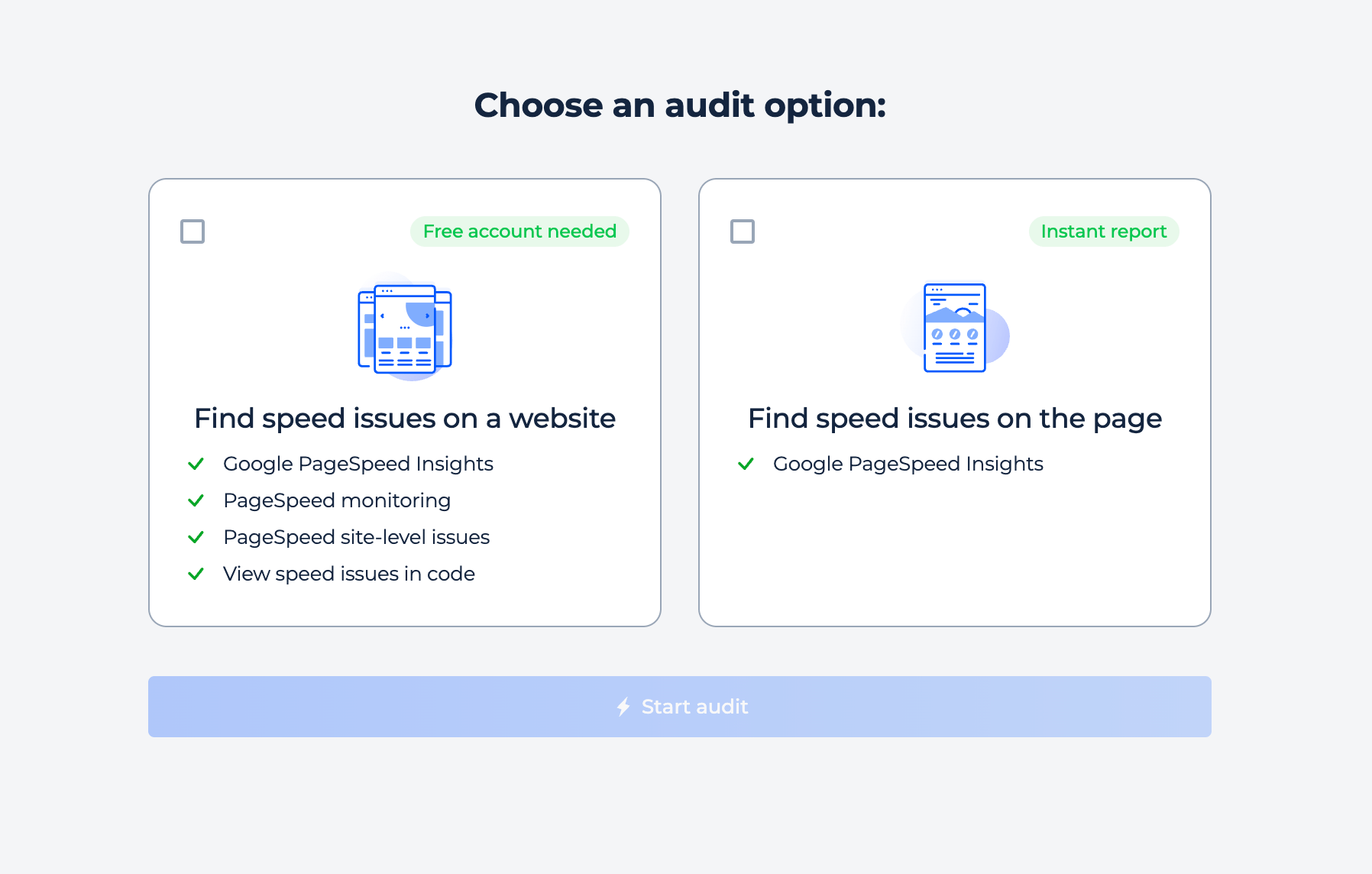

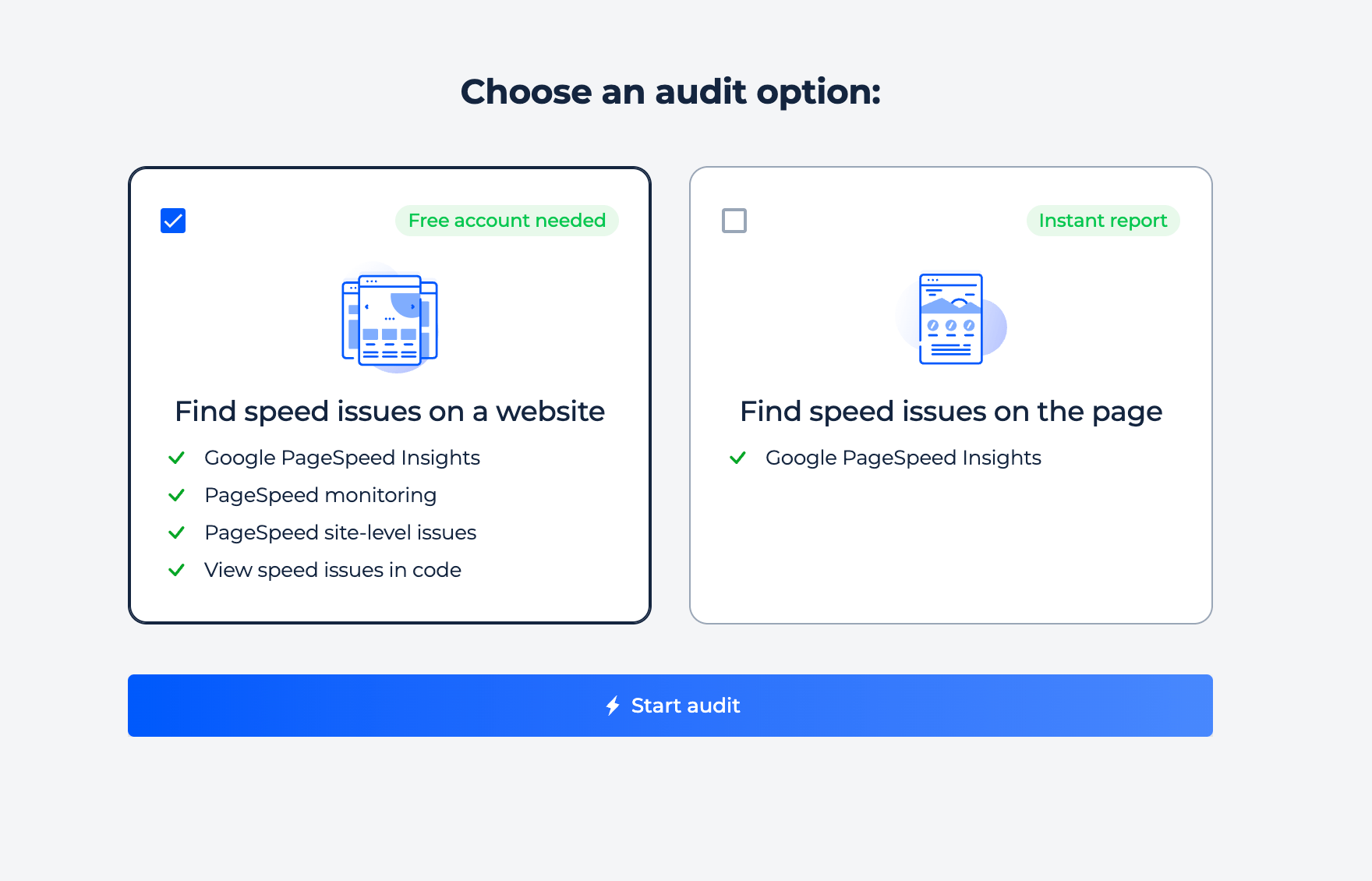

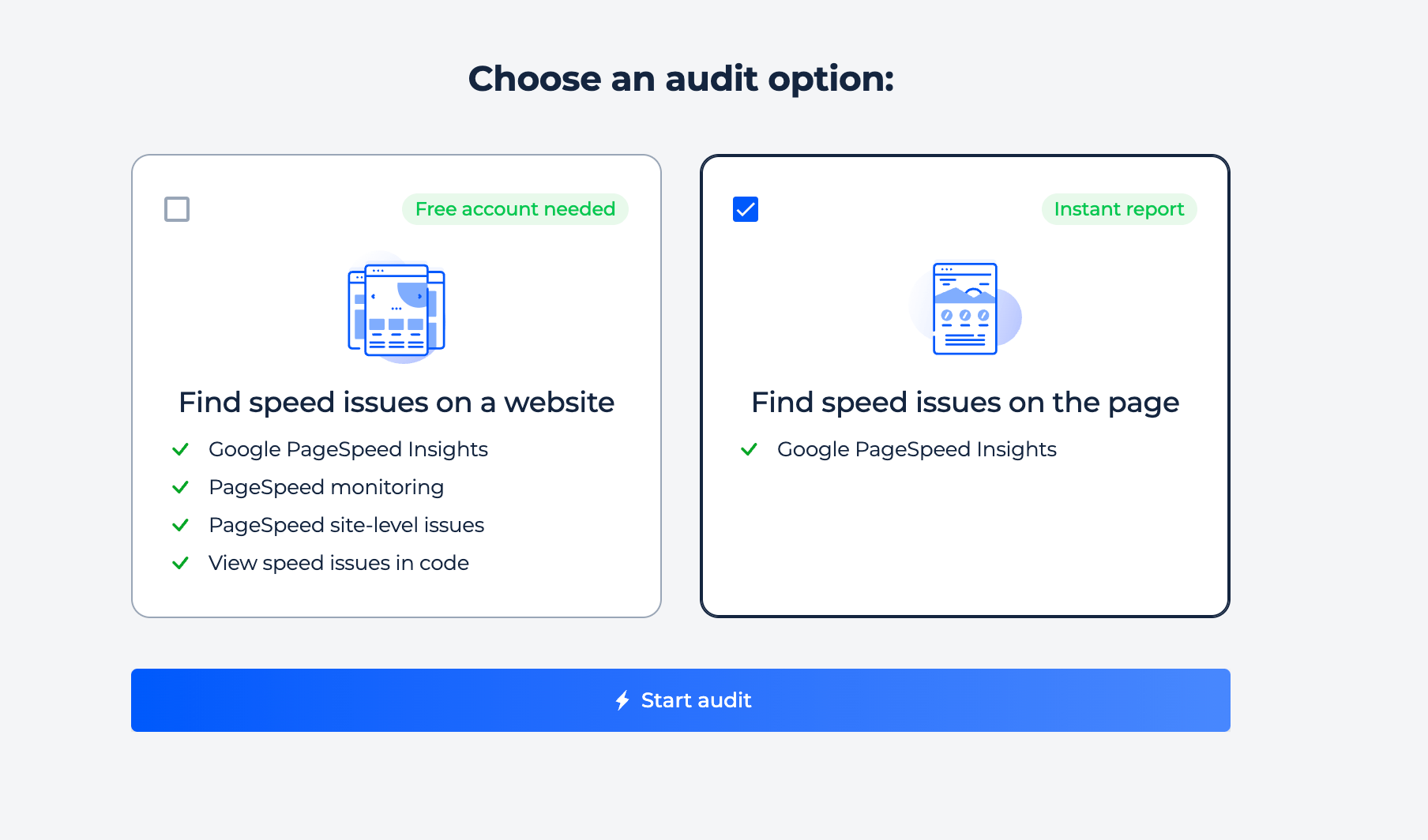

Speed Checker can be used two ways: by testing website speed or a specific page. Choose the option you need, paste the URL or domain, and check. After that, the tool will start scanning data. You can see how to start the scanning process below.

Speed Test for Website

Step 1: Insert your domain

If you want to check a domain, press the “check domain” button. Enter your domain name into the field below, hit the button, and you’re ready to go!

Step 2: Get the result

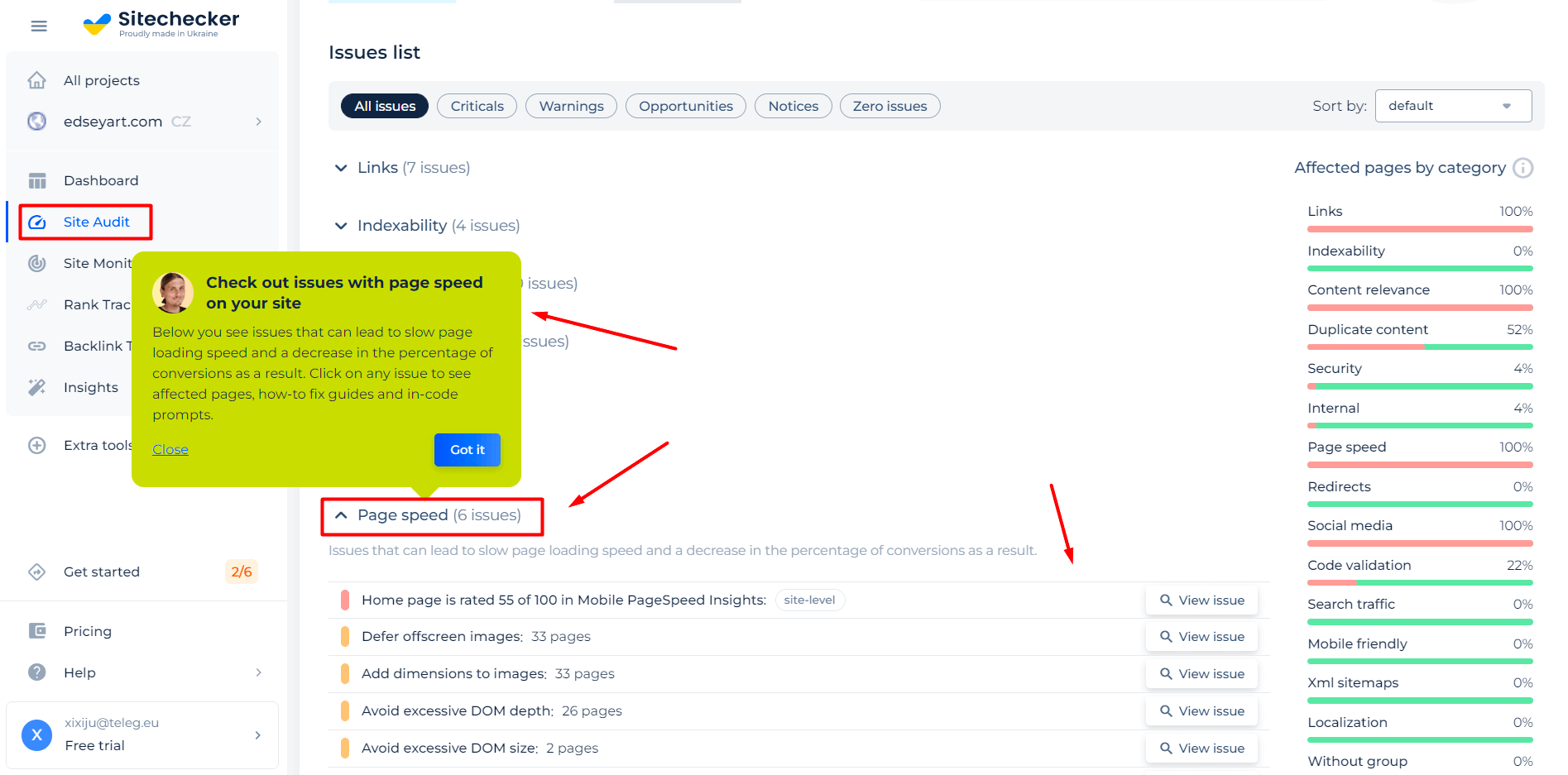

Scanning a domain will create an audit of the site for that domain, revealing numerous problems with page speed, such as a low page speed insight score, a large page size of more than 4 MB, CSS file sizes greater than 15 KB, and so on. You may discover which pages are affected by clicking on any given problem and get a detailed page speed insight report. This way, you can quickly identify and correct any existing flaws.

Additional features of speed test in case of checking domain

Furthermore, after you’ve completed the domain verification, you’ll be given a comprehensive website audit report that will assist you in finding any problems on your site. The study will also include suggestions for how to address the identified concerns.

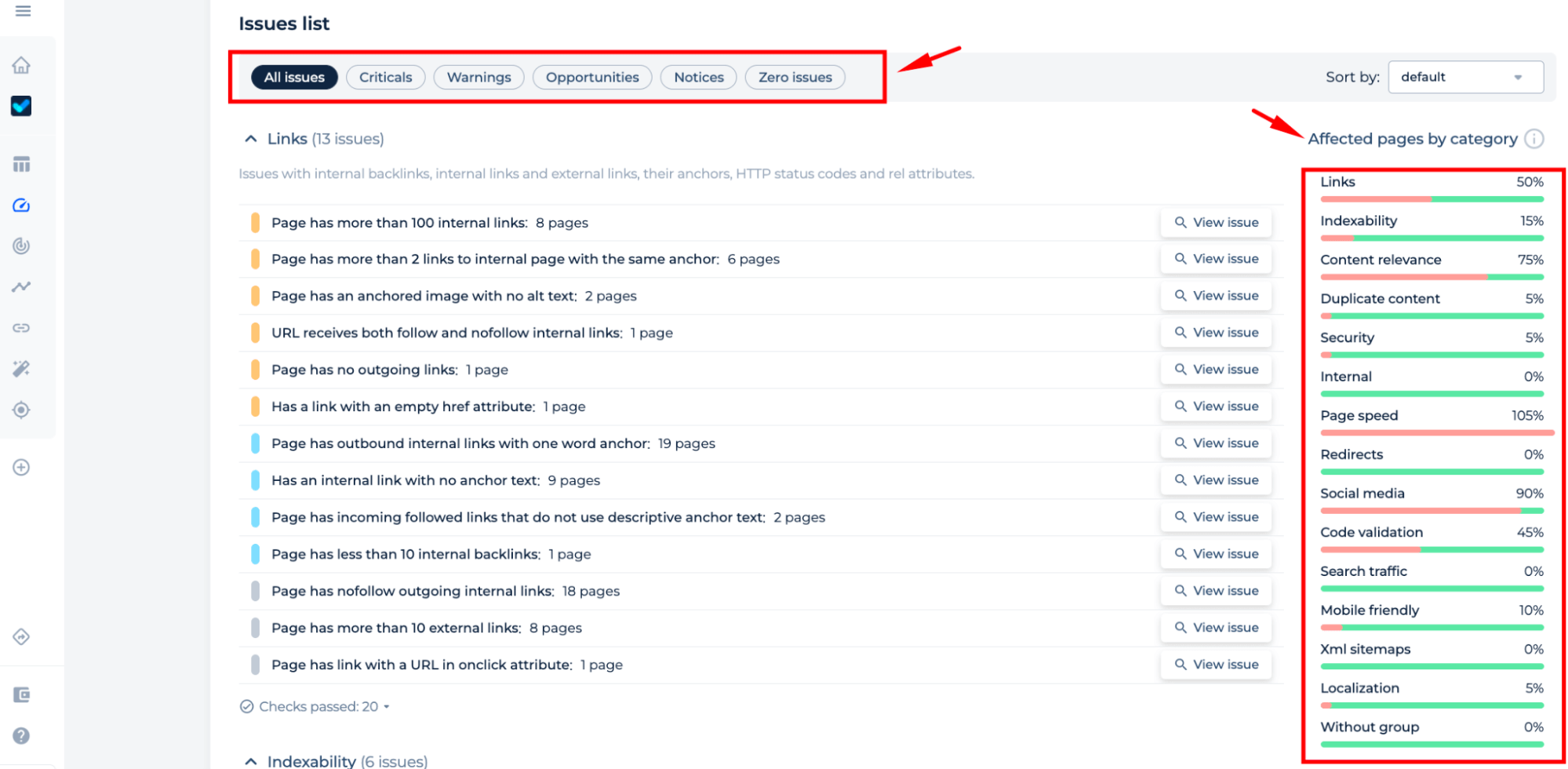

The report categorizes issues by type, such as Criticals, Warnings, Opportunities, Notices, and Zero issues, or by category, such as Links, Indexability, Content Relevance, etc. You can also utilize the Page Counter to track the total number of pages on your site and ensure that all identified issues are addressed for each page. This feature helps you pinpoint and resolve the concerns most relevant to your website’s success.

Page Speed Test

Step 1: Insert your URL

If you want to check a specific page, press the “check page” button. Enter your URL into the field below, and hit the button.

Step 2: Analyze the result

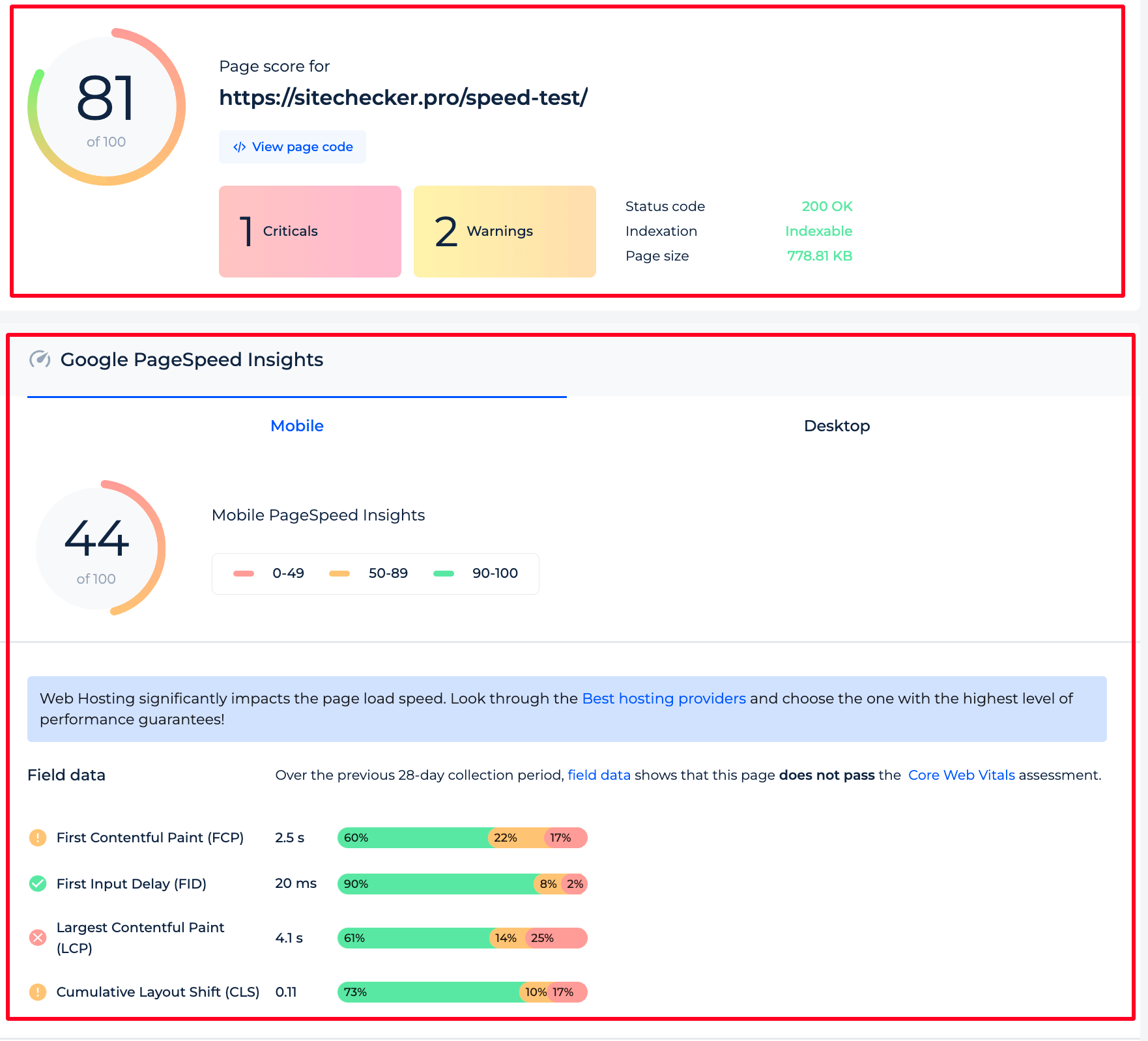

We will scan your page and detect the page load speed. It will take just a few seconds, and you will get the results for your URL immediately.

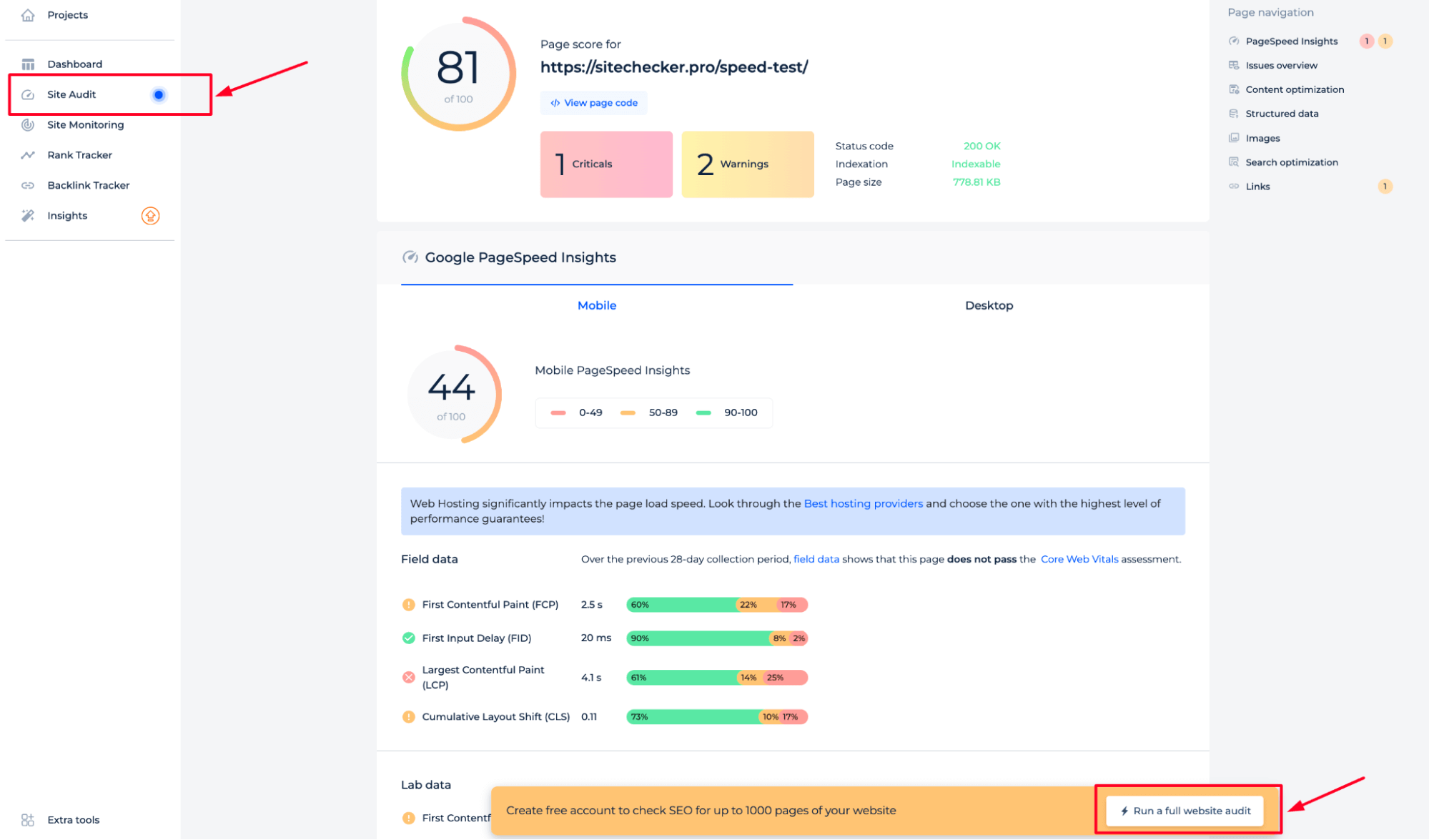

The results page displays the overall score of the page and, below it, the Google Speed Index alongside the load speed metrics.

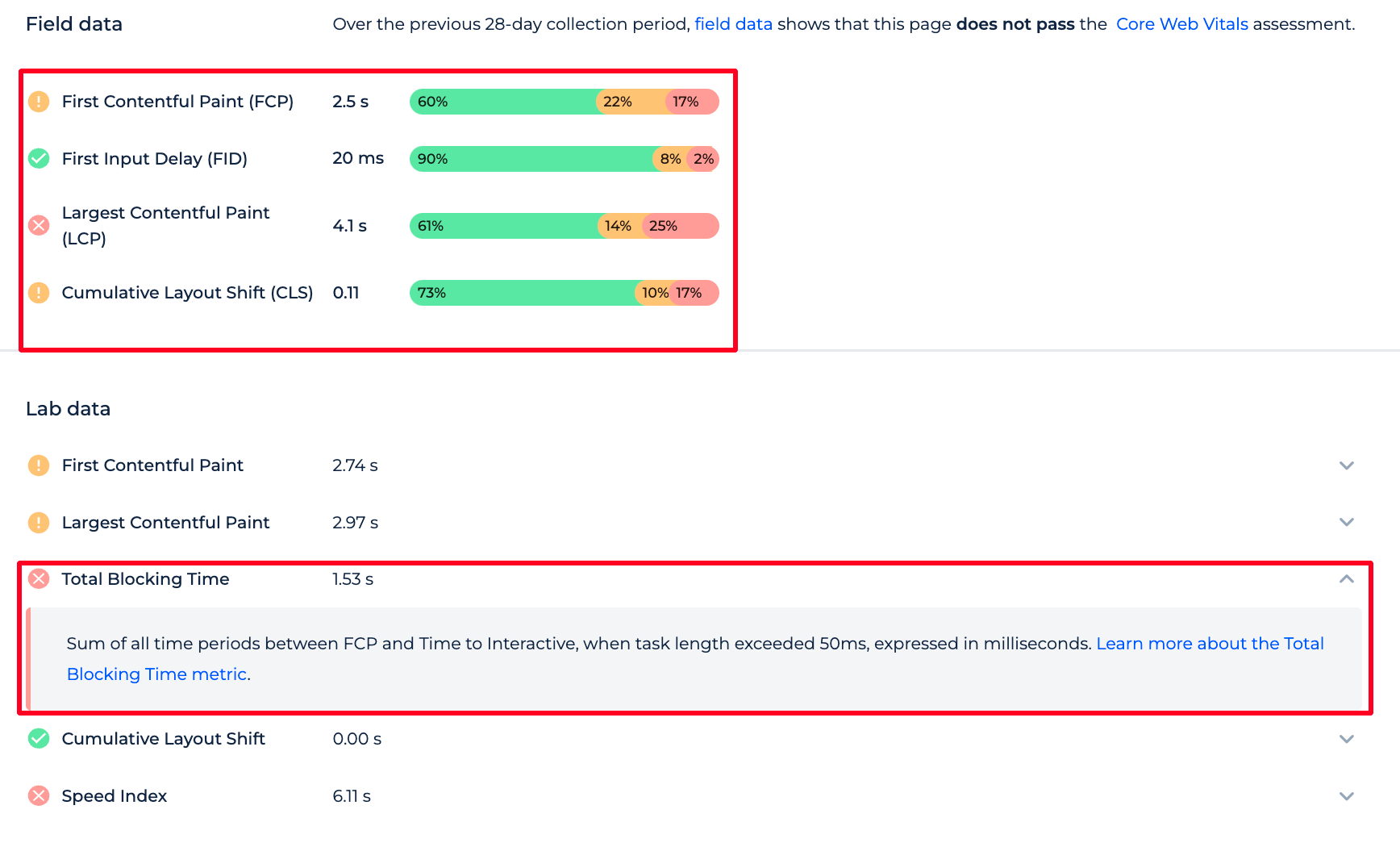

The Page Speed Checker tool displays a website’s performance metrics. ‘Field data’ indicates a failure to meet Core Web Vitals, detailing loading times and stability. ‘Lab data’ reveals real-time interaction delays and visual load speed, guiding optimization efforts.

The Page Speed Checker provides you with useful tips on how to speed up your site’s pages and what mistakes you need to make to do so.

Also, you can easily switch between the Desktop and Mobile page speed results. That will help you improve the page speed and optimize it for all kinds of devices.

For a comprehensive understanding of the Website Speed Test tool’s capabilities after scanning a single URL, consider selecting “Run a full website audit.” You may also refer to the manual’s initial section on domain check to identify all speed-related issues across your site.

Additionally, an interactive demo project is available to demonstrate the practical benefits of using this tool.

Within the ‘Site Audit’ section, the tool presents a sample project that allows you to familiarize yourself with its features and learn how to leverage them for your website’s SEO assessment.

Additional features

The Page Loading Speed Tester presents a detailed audit of page performance, highlighting critical insights within a streamlined interface.

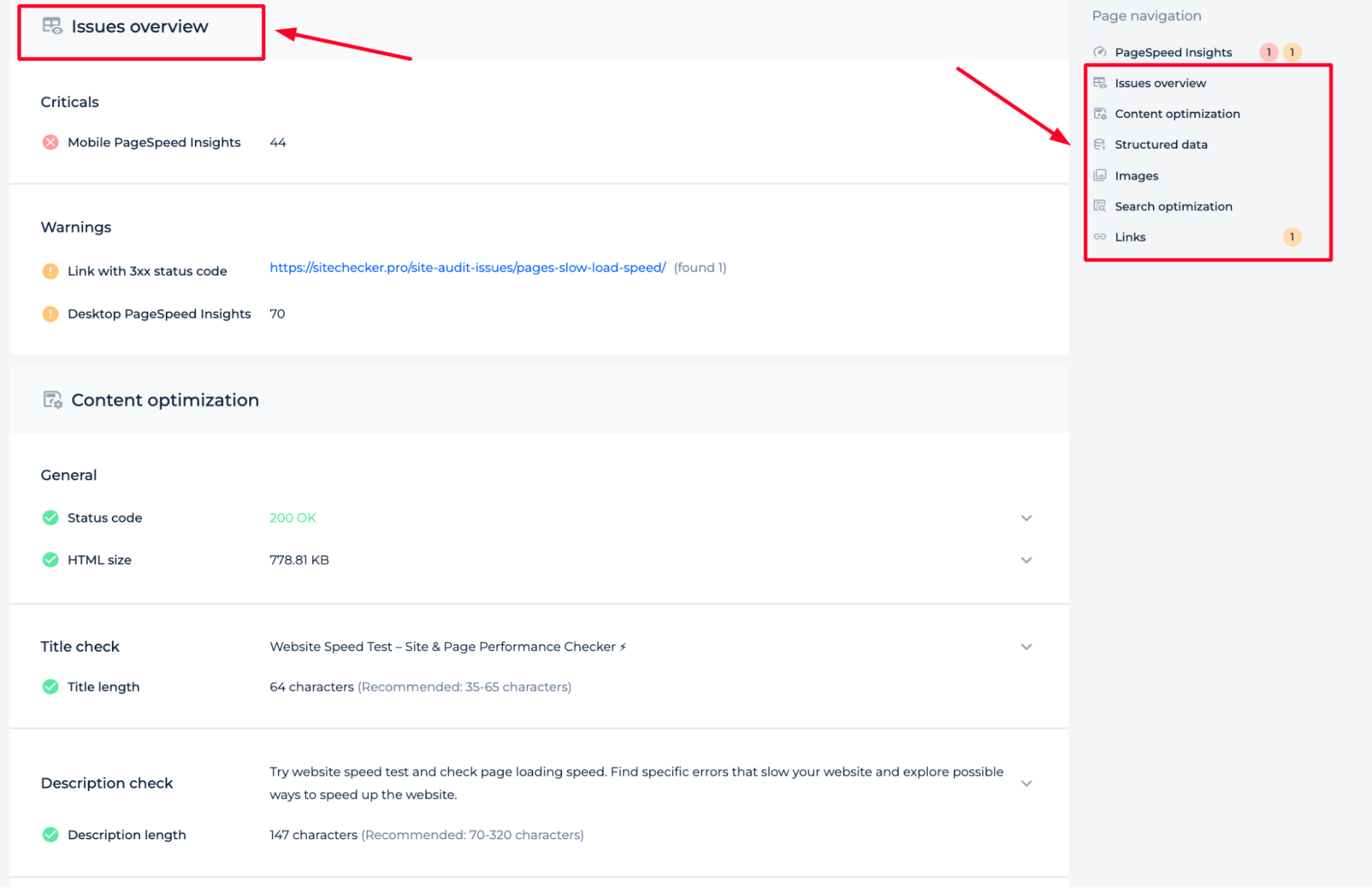

In the Issues Overview section, you can immediately grasp the severity of problems categorized under “Criticals” and “Warnings”.

The Page Navigation bar on the right allows quick access to various sections of the report, including Content Optimization, Structured Data, Images, Search optimization, and Links, enabling you to navigate efficiently through the analysis.

The report also evaluates performance-related issues, where enabling GZIP Compression can significantly enhance your site’s speed. Compressing text data reduces load times and improves user experience, which directly impacts SEO To ensure your site is easily accessible to search engine bots, you can use the Crawlability Checker to identify any issues preventing your pages from being properly crawled and indexed, enhancing your overall site optimization strategy.

Final Idea

The Website Speed Checker is a comprehensive tool that measures web page performance using metrics like FCP, FID, LCP, and CLS, offering performance grading and actionable insights for both desktop and mobile sites. Its key features include a unified dashboard, a user-friendly interface, and a complete SEO toolset. For usage, it provides free tests for domains or specific pages, with the option of a full site audit. The tool also furnishes detailed audits, identifies issues, and suggests optimizations to enhance SEO performance, all accessible through an intuitive interface.