Sitechecker SEO Blog

Stay ahead in SEO with our Sitechecker SEO Blog. Explore expert tips, latest trends, and powerful strategies to boost your website’s visibility!

How to Find & Fix Broken Links in Google Search Console

Roman Rohoza

May 14, 2025

Show more posts

SEO

What is Error 500 "Internal Server Error": Meaning and How to Fix Issue

Ivan Palii

Jul 27, 2023

What Is Localhost? Function, and Their Impact on SEO. Ways to Troubleshoot Common Localhost Errors

Ivan Palii

Oct 3, 2023

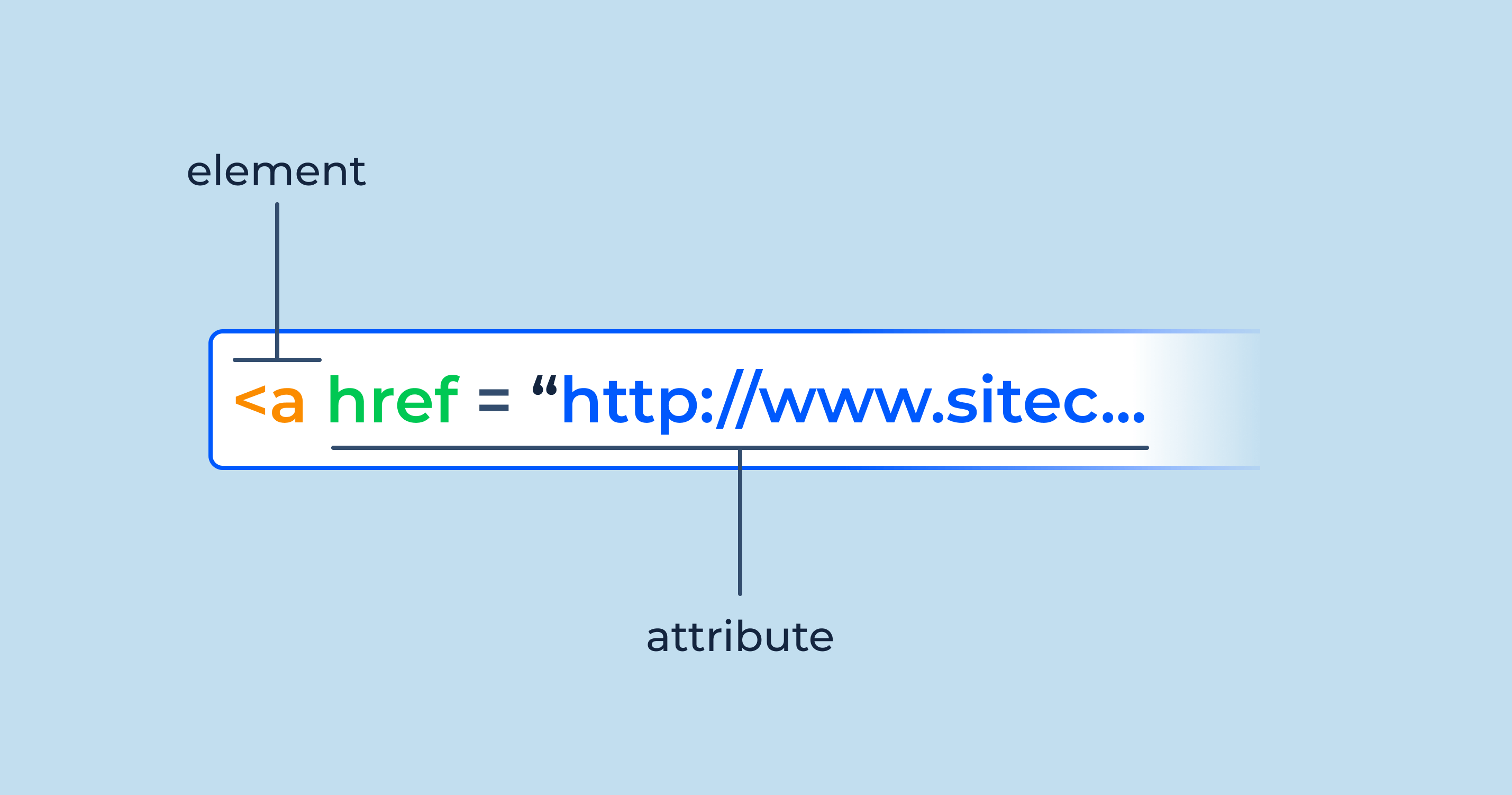

Absolute URL vs. Relative URL. What is the Difference Between Them.

Ivan Palii

Oct 3, 2023

What Is Google Indexing Process. Tips on How to Check and Monitor Indexation

Ivan Palii

Sep 18, 2023

Interviews

View More Posts

Interview

Nik Vujic on the Webflow vs WordPress for SEO and Running B2B SaaS Agency

Ivan Palii

Sep 17, 2024

Interview

SEO Tips from Spencer Haws, SEO Blogger and Founder of NichePursuits.com

Ivan Palii

May 17, 2021

Marketing

View More Posts

What is a Website Builder? Understand the Pros and Cons of Website Builders

Ivan Palii

Dec 11, 2023

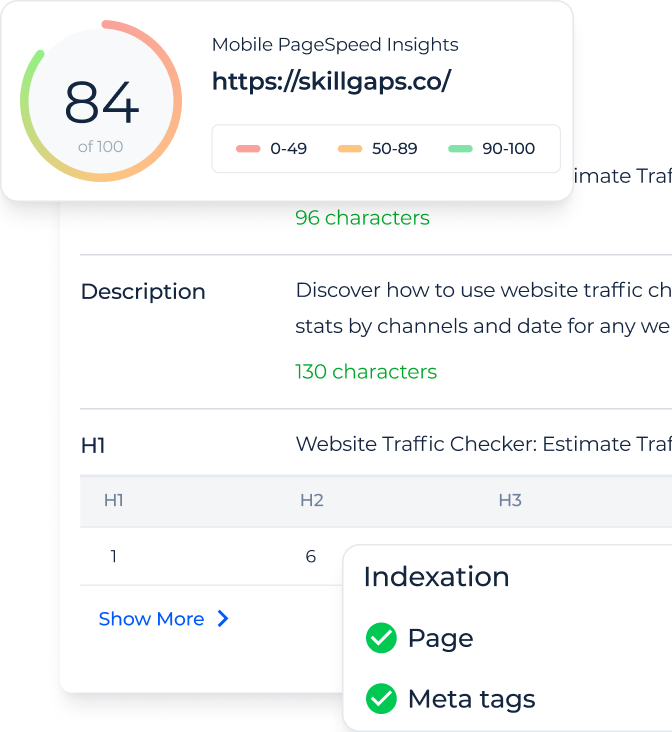

Optimize your site's health & unlock potential with our SEO Checker & Audit Tool

Improve website’s on-page and technical SEO

Monitor all your website’s changes 24/7

Monitor your website rankings

Monitor and analyze all the backlinks

Product & Studies

Web Hosting Reviews

View More Posts

Web Hosting

Interserver.net Reviews: What Points You Should Consider for SEO

Iryna Krutko

Mar 17, 2021