A robots.txt file is a text file placed on websites to inform search engine robots (like Google) which pages on that domain can be crawled. If your website has a robots.txt file, you may carry out verification with our free Robots.txt generator tool. You can integrate a link to an XML sitemap into the robots.txt file.

Before search engine bots crawl your site, they will first locate the site’s robots.txt file. Thus, they will see instructions on which site pages can be indexed and which should not be indexed by the search engine console.

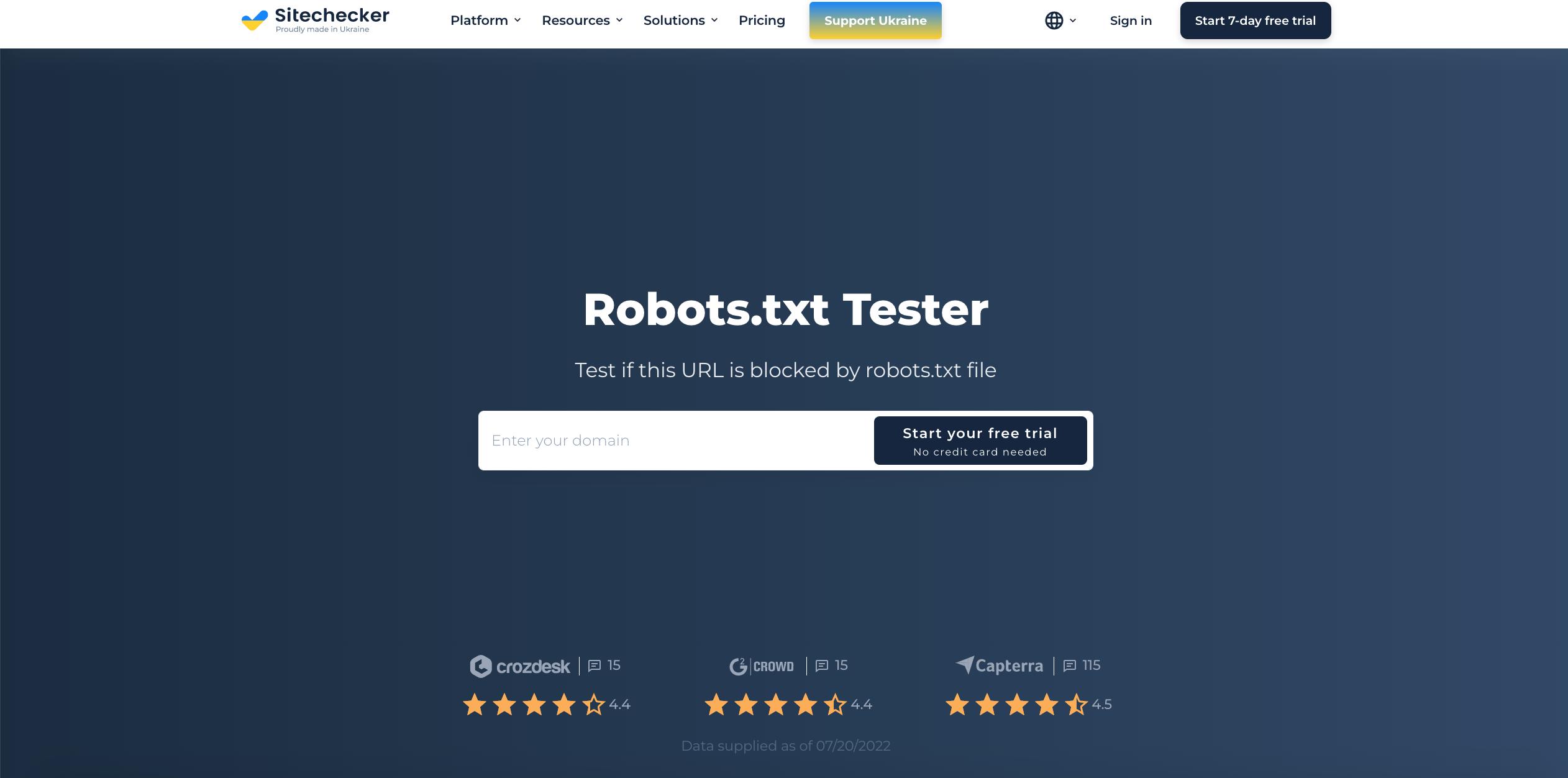

With this simple file, you can set crawling and indexing options for search engine bots. And to check if Robots.txt file is configured on your site, you can use our free and simple Robots.txt Tester tools. This article will explain how to validate a file with the tool and why it’s important to use Robots.txt Tester on your site.

Robots.txt Checker Tool Usage: a Step-by-Step Guide

Robots.txt testing will help you test a robots.txt file on your domain or any other domain you want to analyze.

The robots.txt checker tool will quickly detect errors in the robots.txt file settings. Our validator tool is very easy to use and can help even an inexperienced professional or webmaster check a Robots.txt file on their site. You will get the results in a few moments.

Step 1: insert your URL and start free trial

You can sign up for a free trial with us without having to provide a credit card. All you need to do is confirm your email address or use your Google account. Getting started is quick and easy.

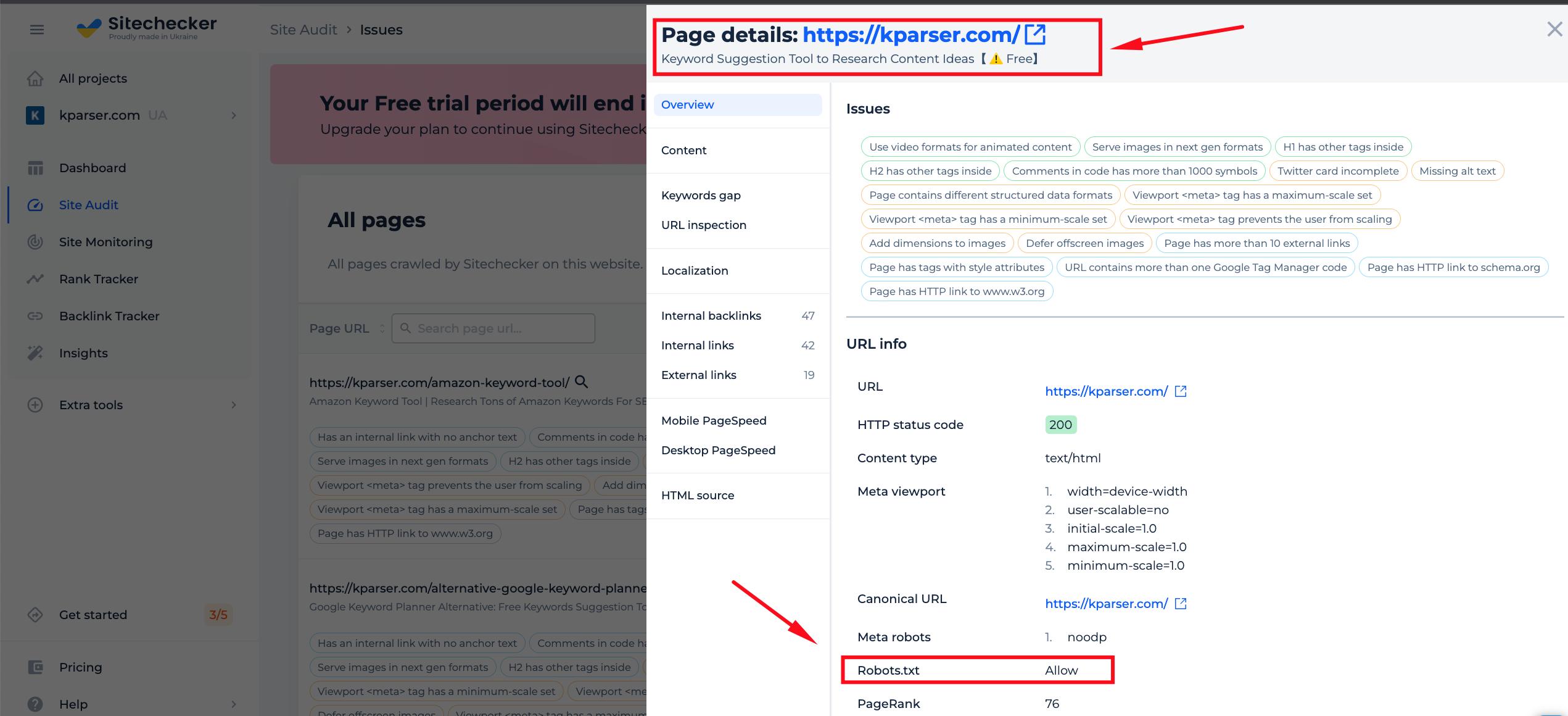

Step 2: Get the Robots.txt tester results

After you add a URL to our tool, we will quickly crawl the site. In just seconds, you will get results that include page details and data on robots.txt directives for the specific page. The audit also includes an overview of all issues present on each web page.

Features of Robots.txt Checker

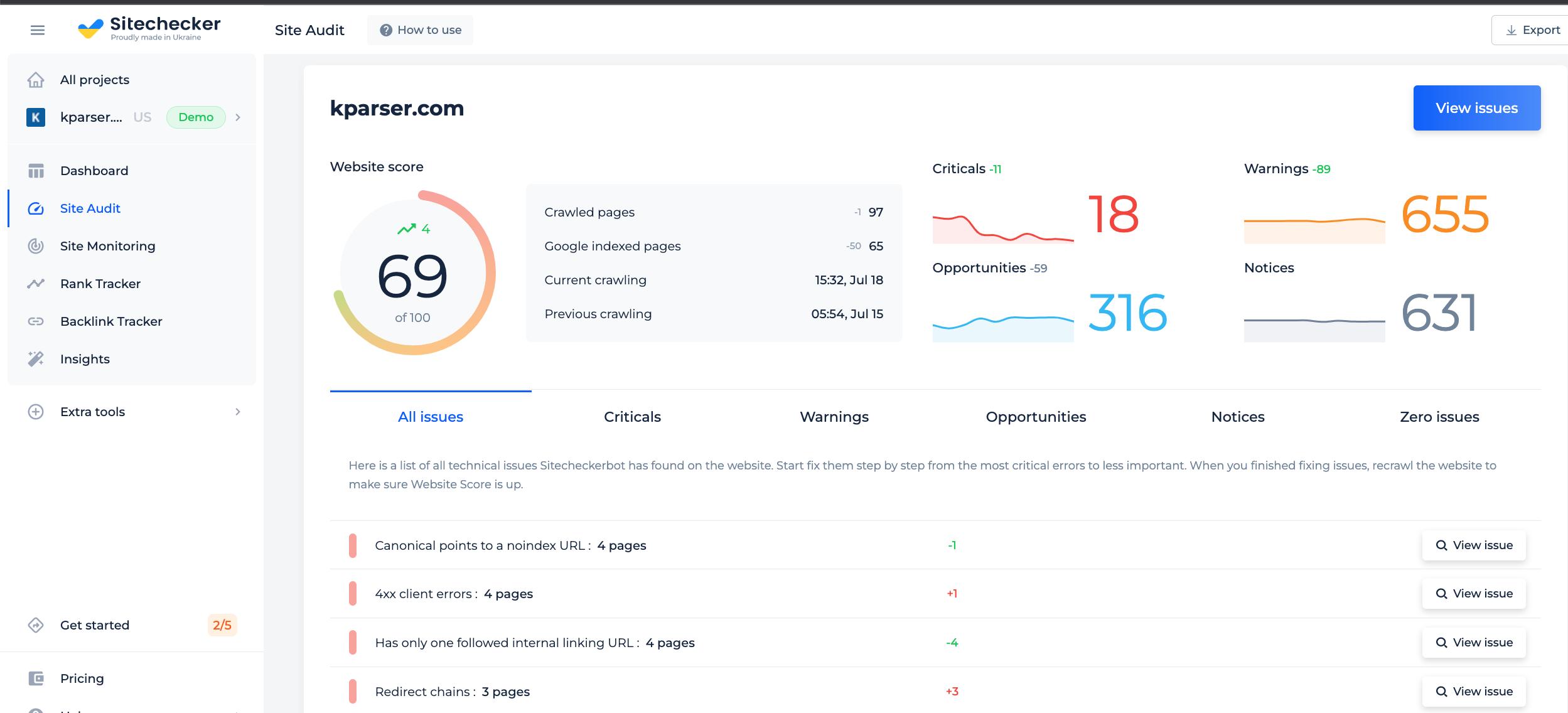

Once you’ve created your trial account, a full-site audit can identify various types of problems and list the URLs where those issues occur. Additionally, we provide instructions and video guides on how to fix the identified issues. This is an invaluable service that can help improve your website’s performance and ranking.

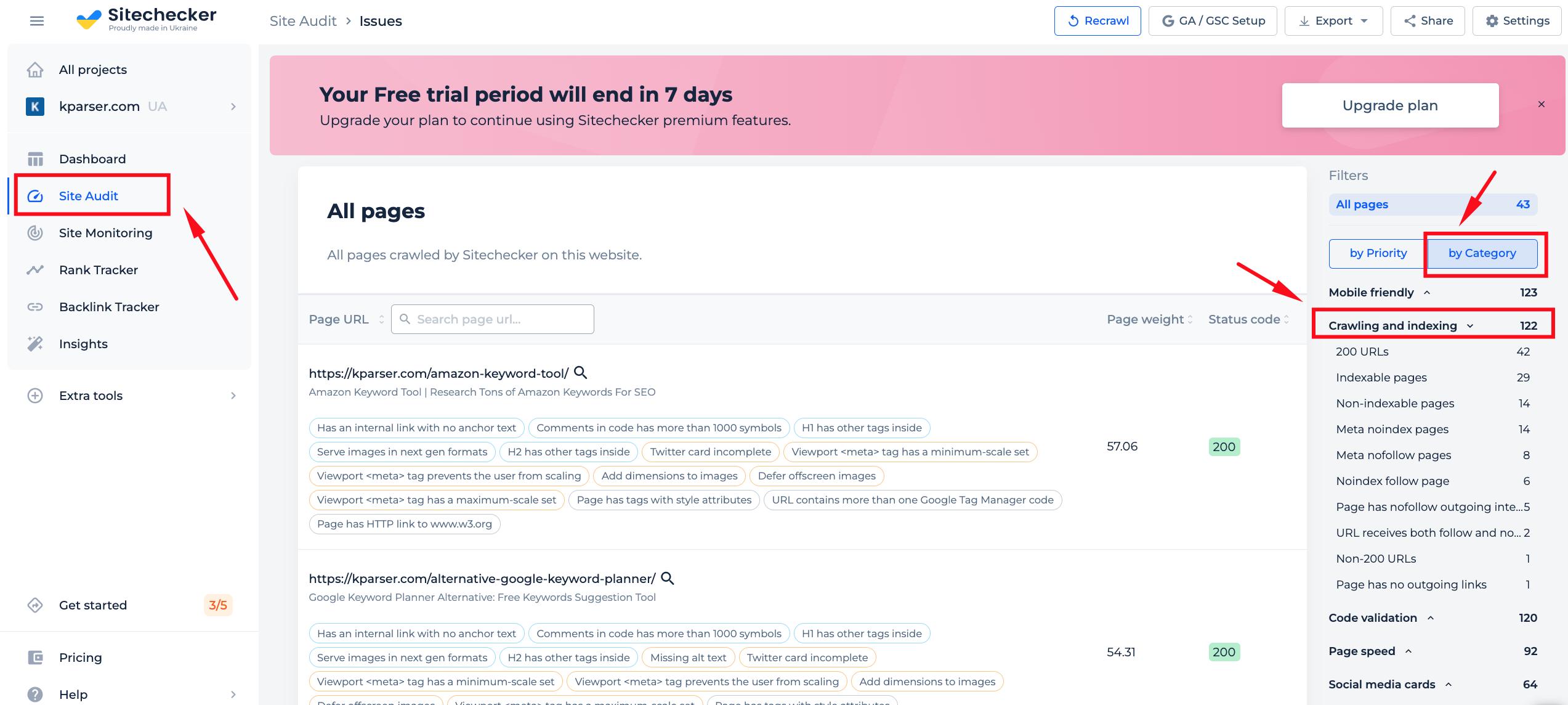

The “Crawling and Indexing” section will help you spot any indexing issues on your site so they can be quickly fixed. You’ll also receive notifications if these problems arise in the future with new pages added!

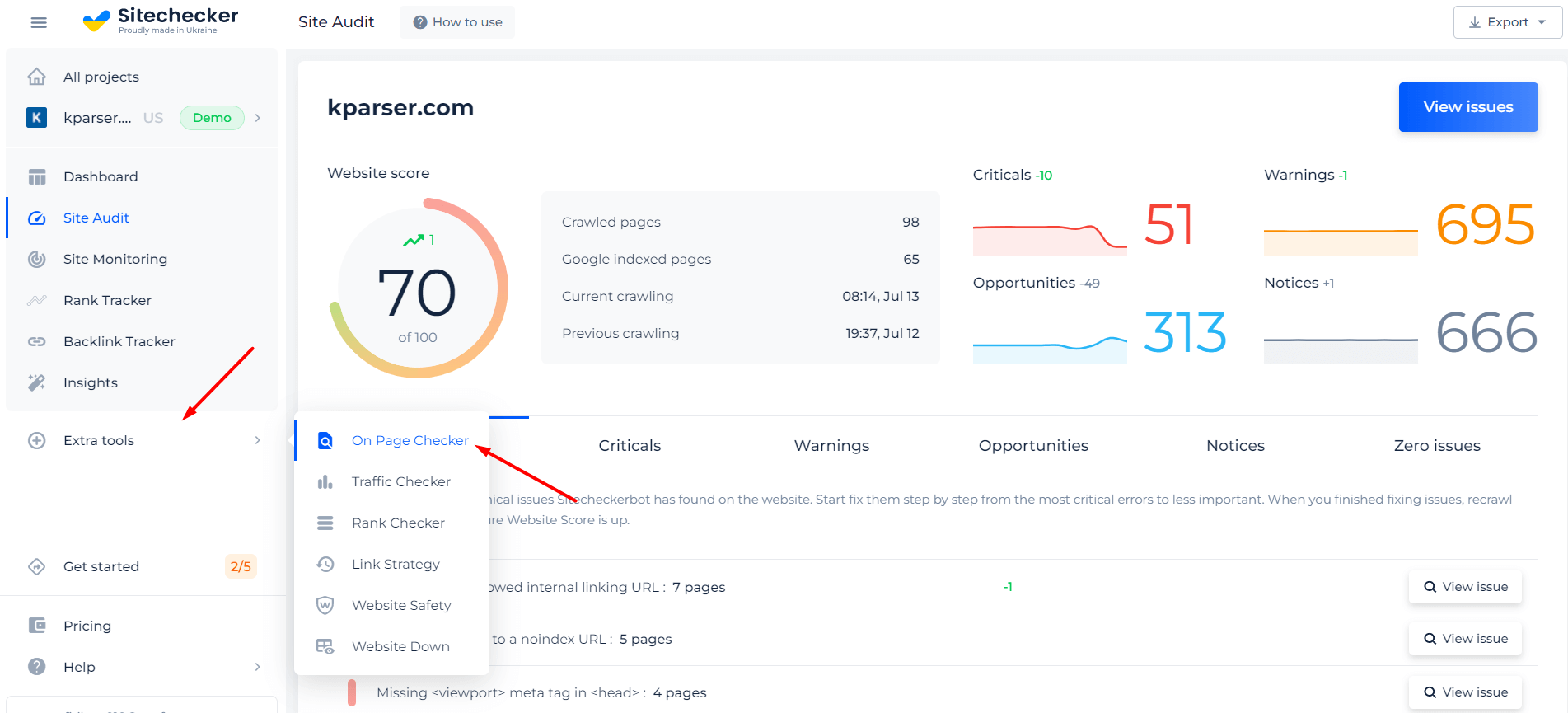

If you want to avoid any potential issues with the robot.txt directive on a particular page, you can use our on-page checker tool in your account. This will help ensure that everything is set up correctly and avoid any potential problems down the line.

Find all pages with indexing issues right now!

Make a full audit to find out and fix robots.txt issues in order to improve your technical SEO.

Cases When Robots.txt Checker is Needed

Issues with the robots.txt file, or the lack thereof, can negatively affect your search engine rankings. You may lose ranking points in the SERPs. Analyzing this file and its meaning before crawling your website means that you can avoid trouble with crawling. Also, you can prevent adding your website’s content to the index exclusion pages that you don’t want to be crawled. Use this file to restrict access to certain pages on your site. If there’s an empty file, you can get a Robots.txt not Found Issue in SEO-crawler.

You can create a file with a simple text editor. First, specify the custom agent to execute the instruction and place the blocking directive like disallow, noindex. After this, list the URLs you are restricting crawling. Before running the file, verify that it’s correct. Even a typo can cause Googlebot to ignore your validation instructions.

What Robots.txt Checker Tools Can Help

When you generate robots.txt file, you need to verify if they contain any mistakes. There are a few tools that can help you cope with this task.

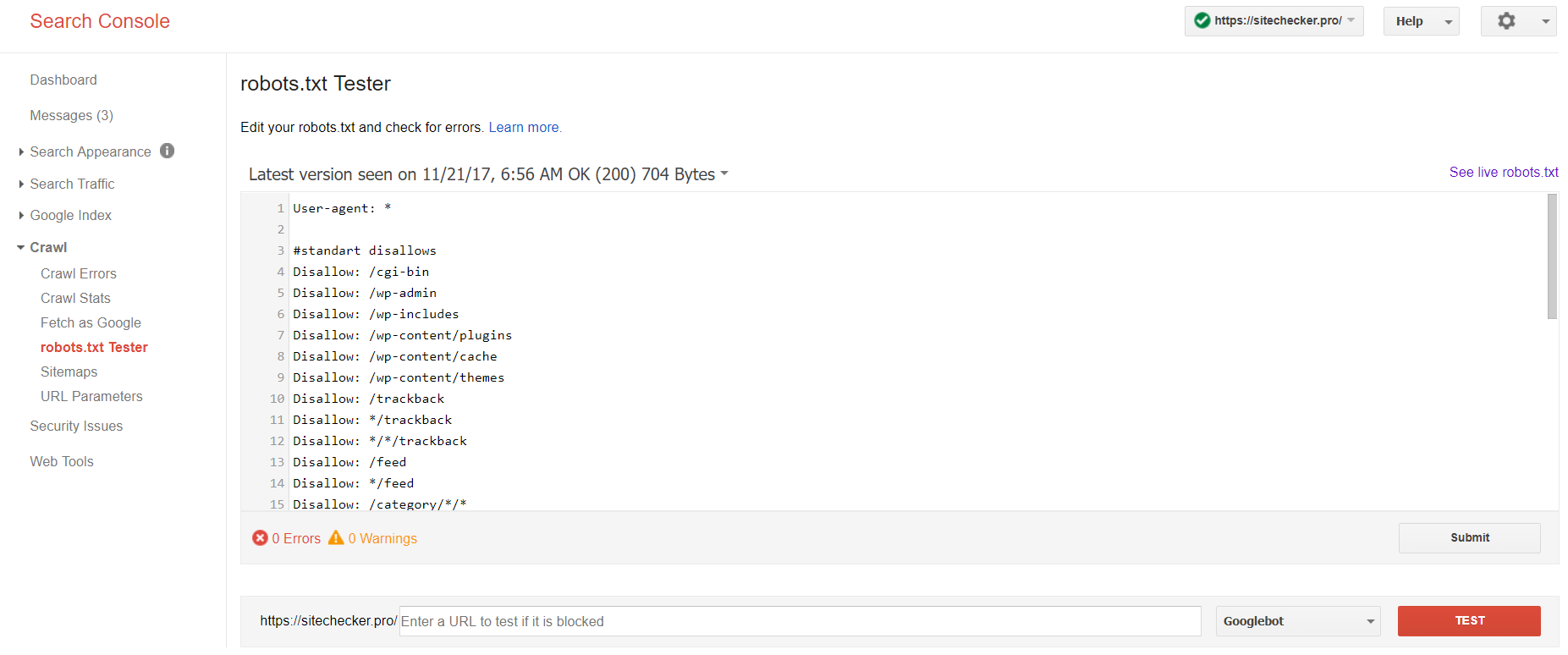

Google Search Console

Now only the old version of Google Search Console has tool to test robots file. Sign in to account with the current site confirmed on its platform and use this path to find validator.

Old version of Google Search Console > Crawl > Robots.txt Tester

This robot.txt test allows you to:

- detect all your mistakes and possible problems at once;

- check for mistakes and make the needed corrections right here to install the new file on your site without any additional verifications;

- examine whether you’ve appropriately closed the pages you’d like to avoid crawling and whether those which are supposed to undergo indexation are appropriately opened.

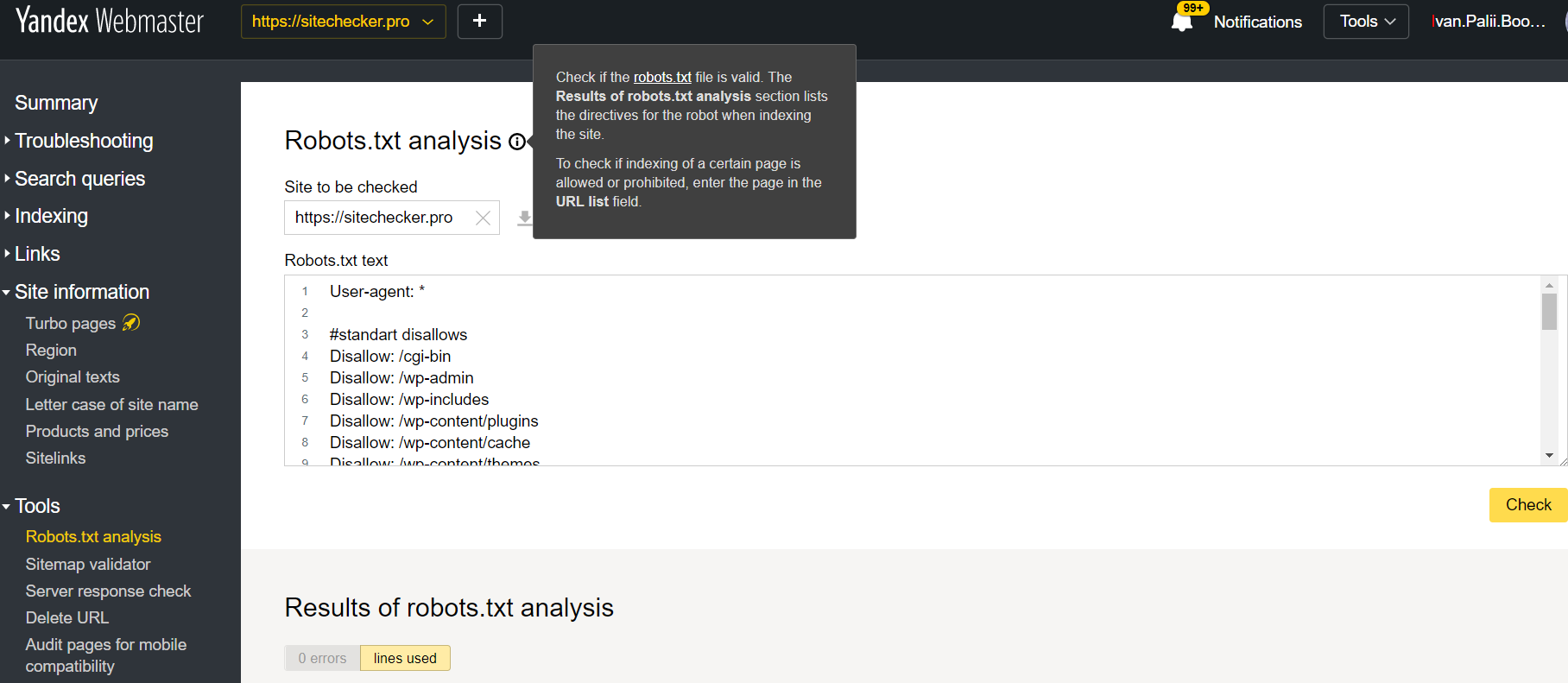

Yandex webmaster

Sign in to Yandex Webmaster account with the current site confirmed on its platform and use this path to find the tool.

Yandex Webmaster > Tools > Robots.txt analysis

This tester offers almost equal opportunities for verification as the one described above. The difference resides in:

- here you don’t need to authorize and to prove the rights for a site which offers a straightaway verification of your robots.txt file;

- there is no need to insert per page: the entire list of pages can be checked within one session;

- you can make certain that Yandex properly identified your instructions.

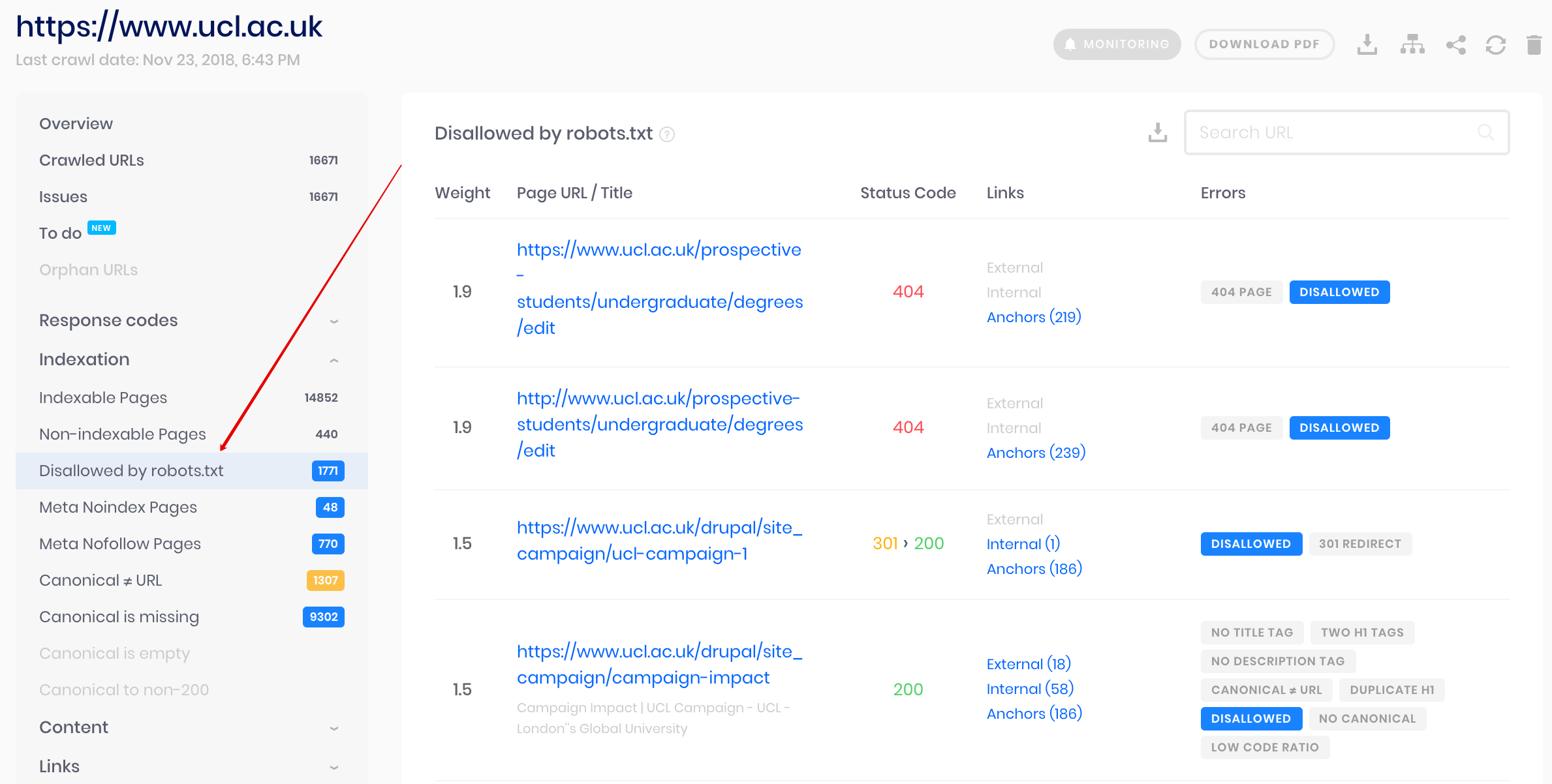

Sitechecker crawler

This is a solution for bulk check if you need to crawl website and permanent monitor robots.txt. Our crawler helps to audit the whole website and detect what URLs are disallowed in robots.txt and what of them are closed from indexing via noindex meta tag.

Take attention: to detect disallowed pages you should crawl the website with “ignore robots.txt” setting.

Detect and analyse not only robots.txt file but also other kind of SEO issue on your site!

Make a full audit to find out and fix your website issues in order to improve your SERP results.

Why Do I Need to Check My Robots.txt File?

Robots.txt shows search engines which URLs on your site they can crawl and index, mainly to avoid overloading your site with queries. Checking this valid file is recommended to make sure it works correctly.