What is a 5xx server error in Google Search Console?

A 5xx server error in Google Search Console means that Googlebot tried to access a page on your site, but your server couldn’t complete the request. These server-side issues – like crashes, timeouts, or overloads – stop Google from viewing your pages correctly.

Common causes of 5xx errors

- Server overload due to traffic spikes or limited hosting resources

- Bugs or errors in server-side code (e.g., PHP, Node.js)

- Misconfigured firewall or security rules blocking Googlebot

- Timeout issues caused by slow scripts or heavy database queries

- CMS or plugin conflicts, especially after updates

- Temporary maintenance or server downtime

- Misconfigured caching or content delivery settings

How 5xx errors impact SEO

- Pages with 5xx errors can’t be indexed by Google, which means they won’t appear in search results

- Frequent errors may signal to Google that your site is unreliable

- Your crawl budget can be wasted on failed requests, slowing down the indexing of new content

- Important pages might temporarily disappear from the index, hurting visibility

- Persistent server issues can lead to drops in rankings over time

- Poor server performance can also affect user experience, especially if users face the same errors

Launch Sitechecker’s GSC Dashboard to boost your Search Console reporting!

Expand GSC Data Limits

Bypass Google’s 1,000-row cap and unlock up to 36 months of Search Console history in a single dashboard.

Step-by-step guide to fix 5xx errors

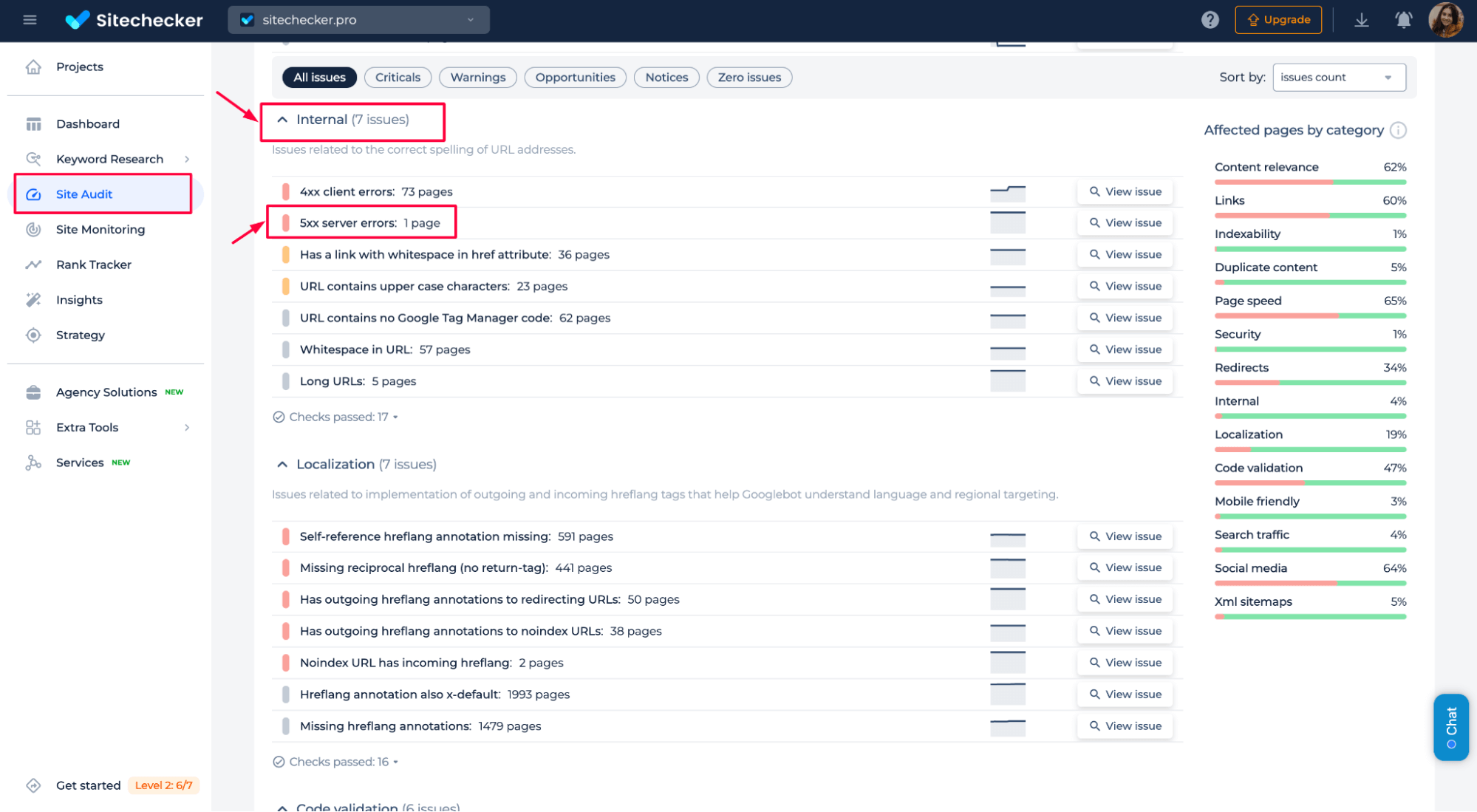

Step 1: Identify affected pages

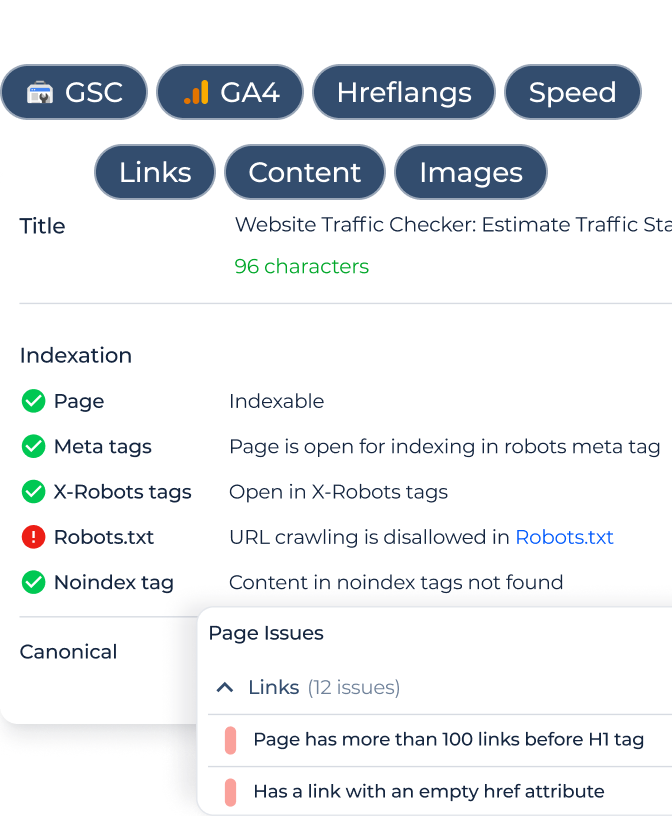

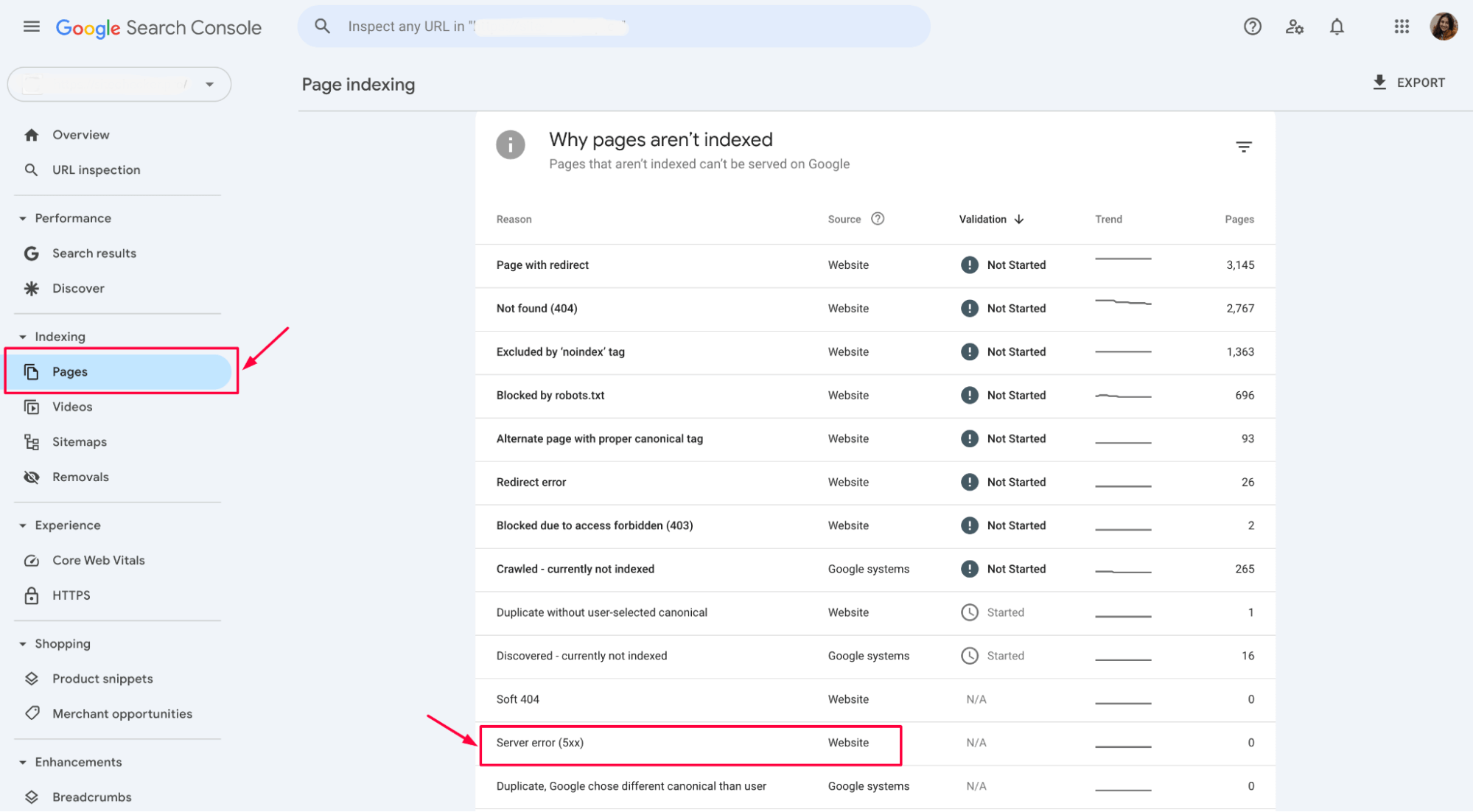

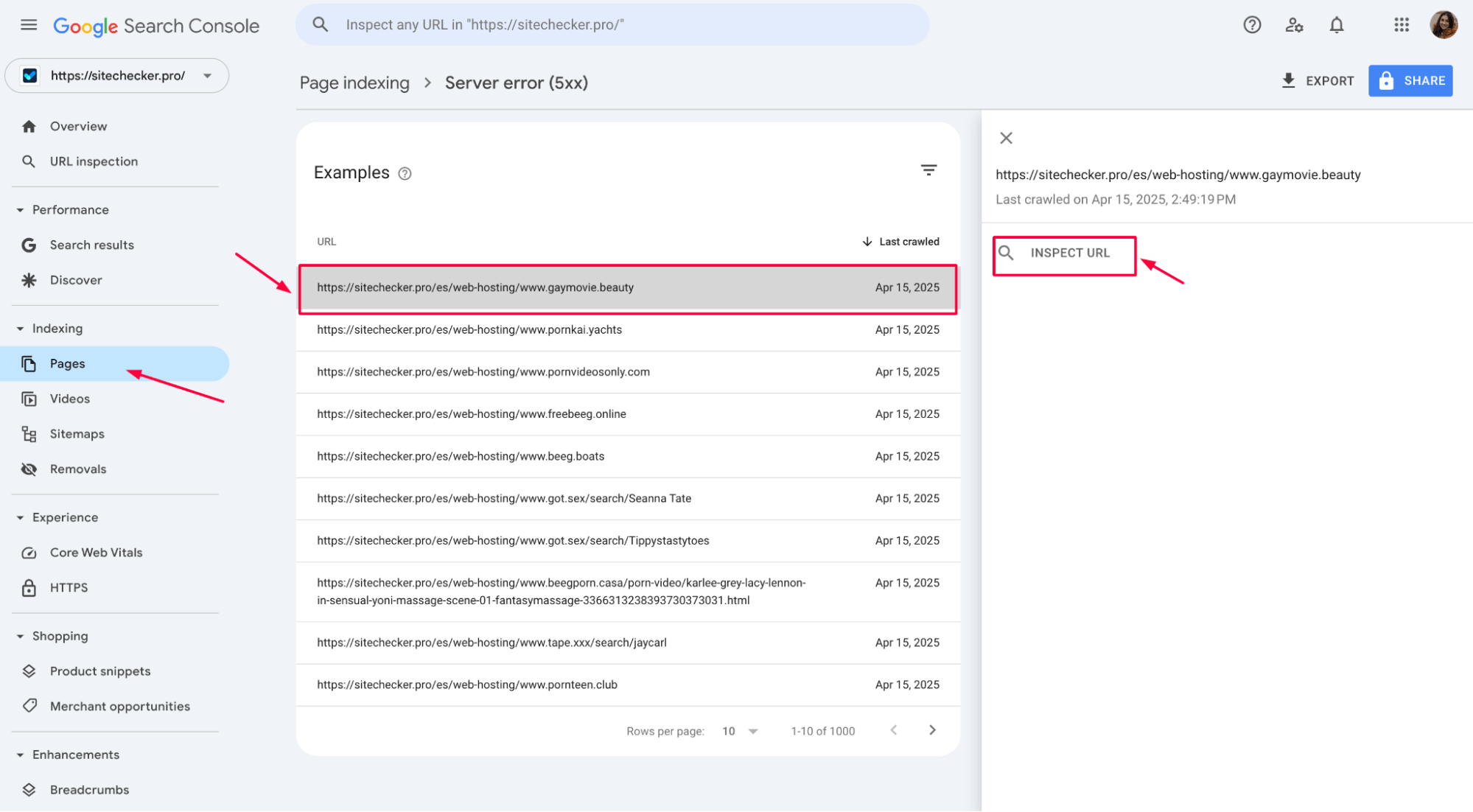

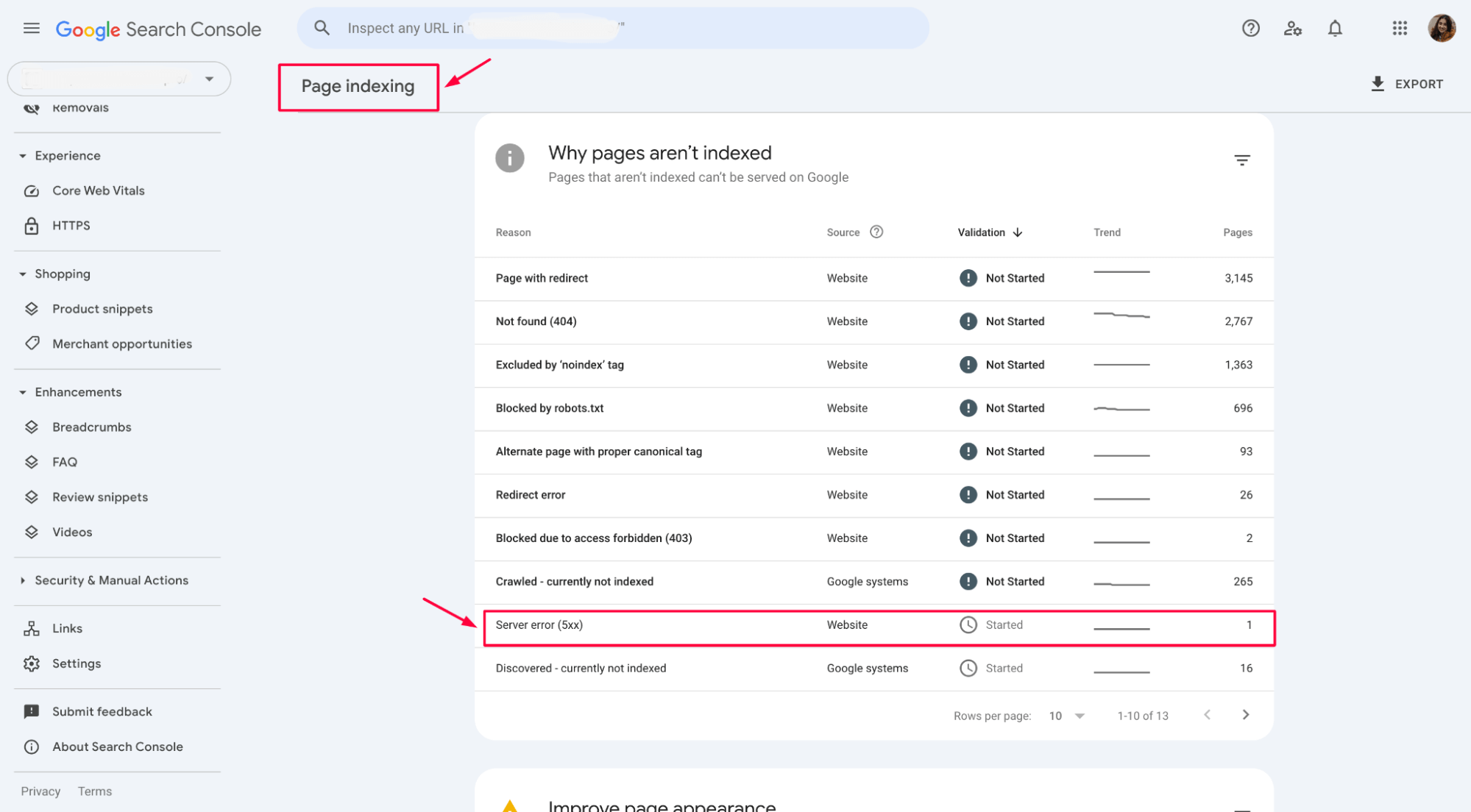

Check the “Pages” report in Google Search Console to see which URLs return 5xx errors.

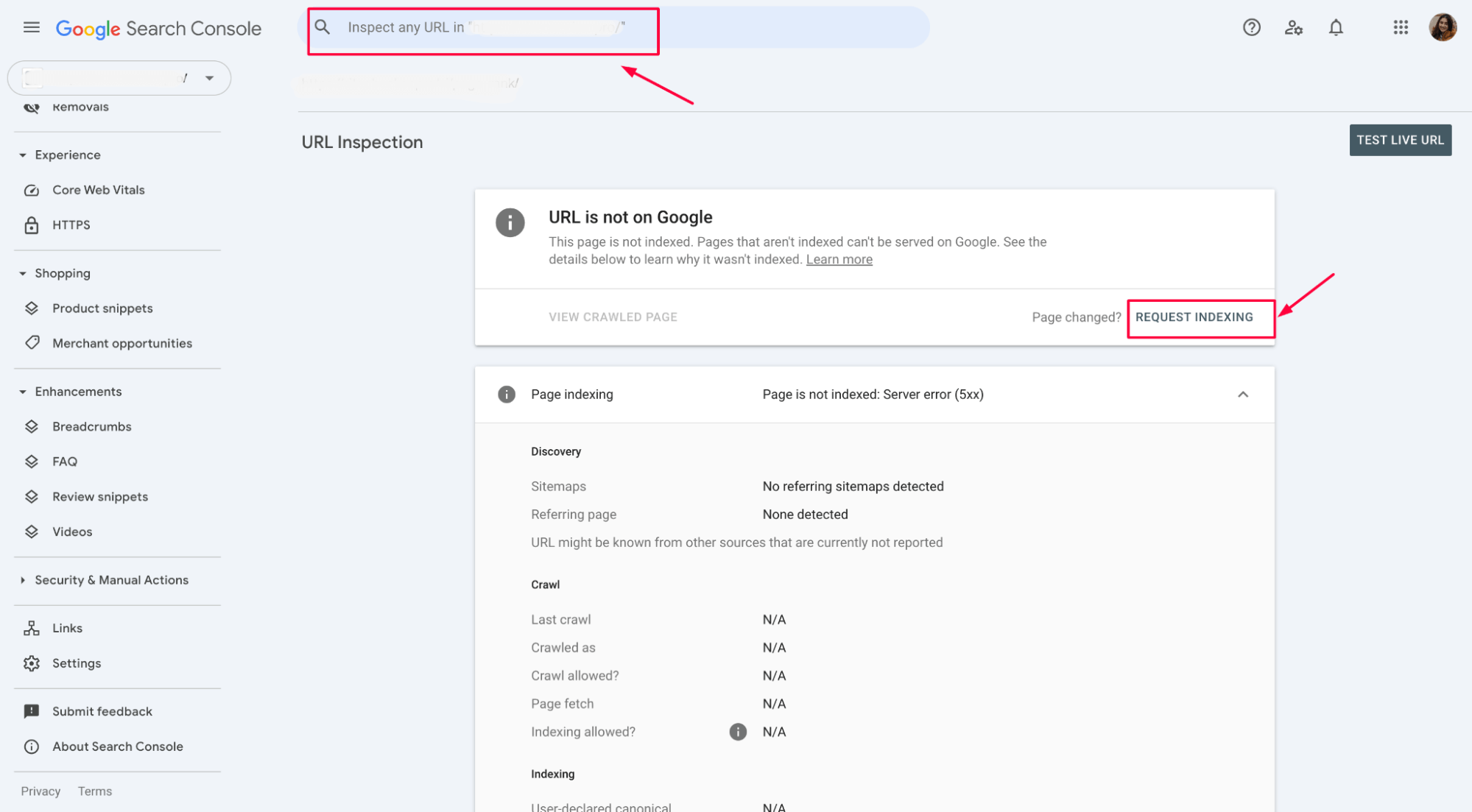

Use the URL Inspection Tool for more details:

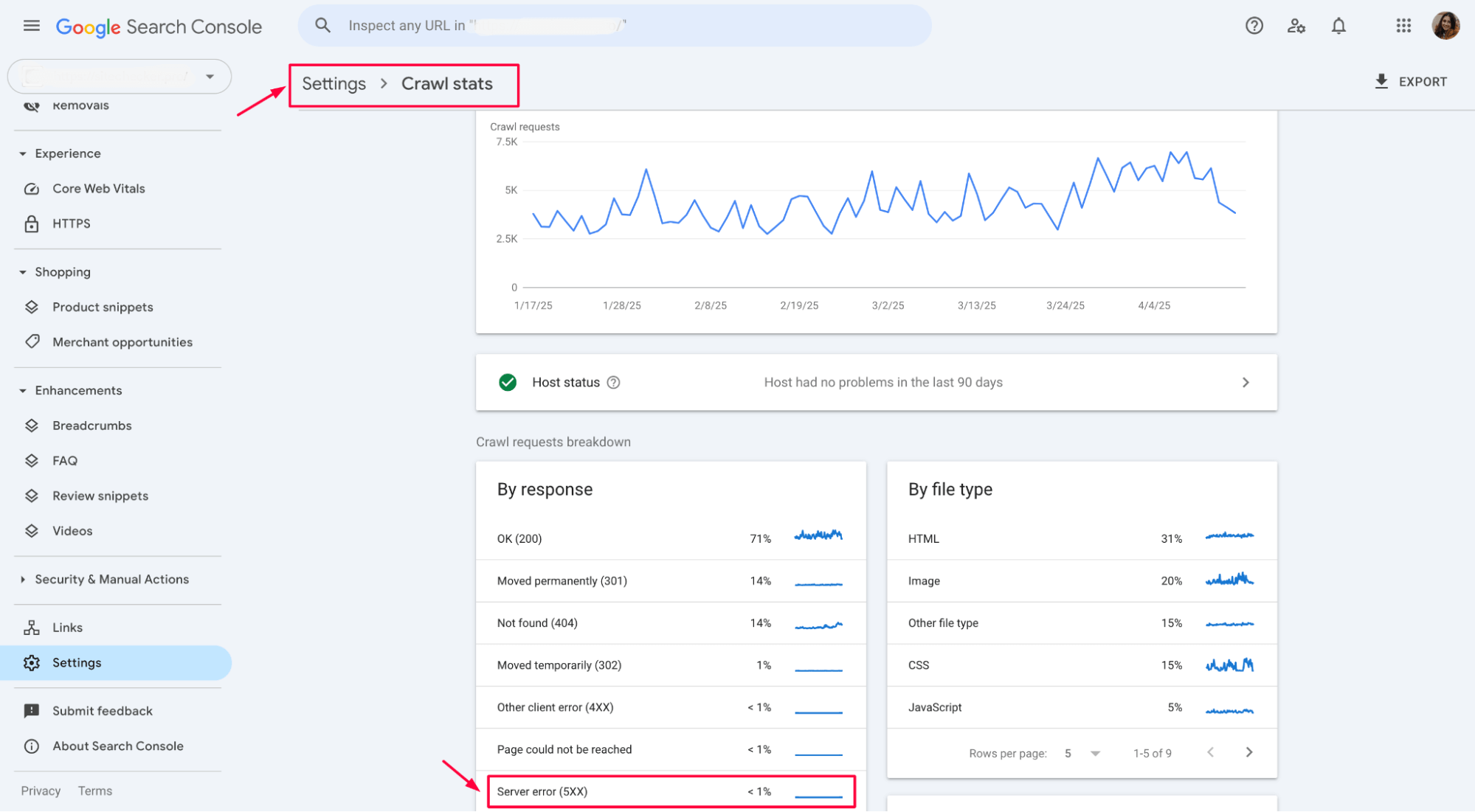

To dig deeper, go to the Crawl Stats report under Settings.

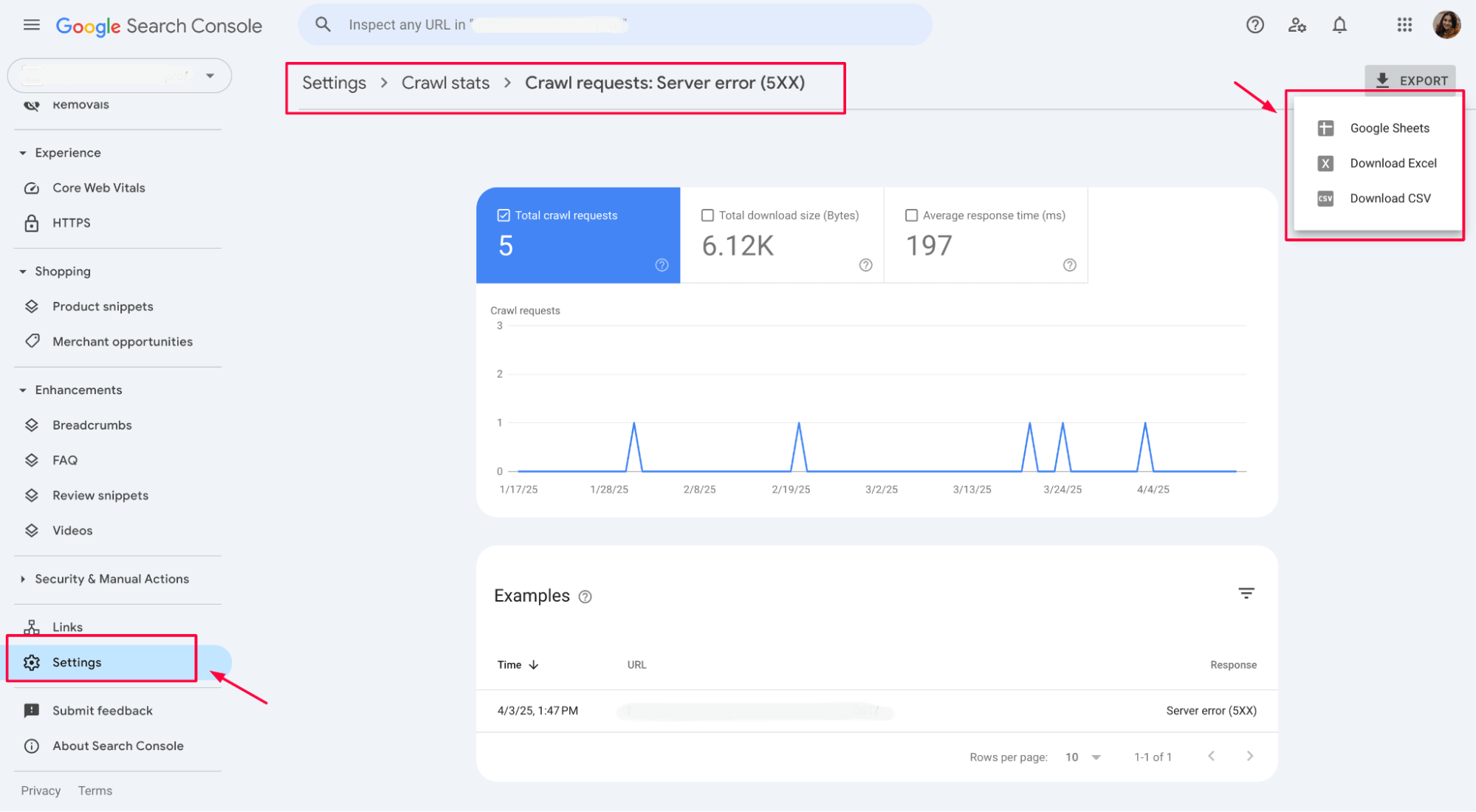

This report shows how often Google hits your site and what response codes it receives. If you notice spikes in 5xx errors or a drop in crawl requests, that’s a strong signal that something’s wrong on the server side.

You can export both lists to organize your troubleshooting workflow better and prioritize the most important pages.

This helps you quickly spot the URLs where the issue matters most. You can export the list of URLs to streamline and organize your troubleshooting process.

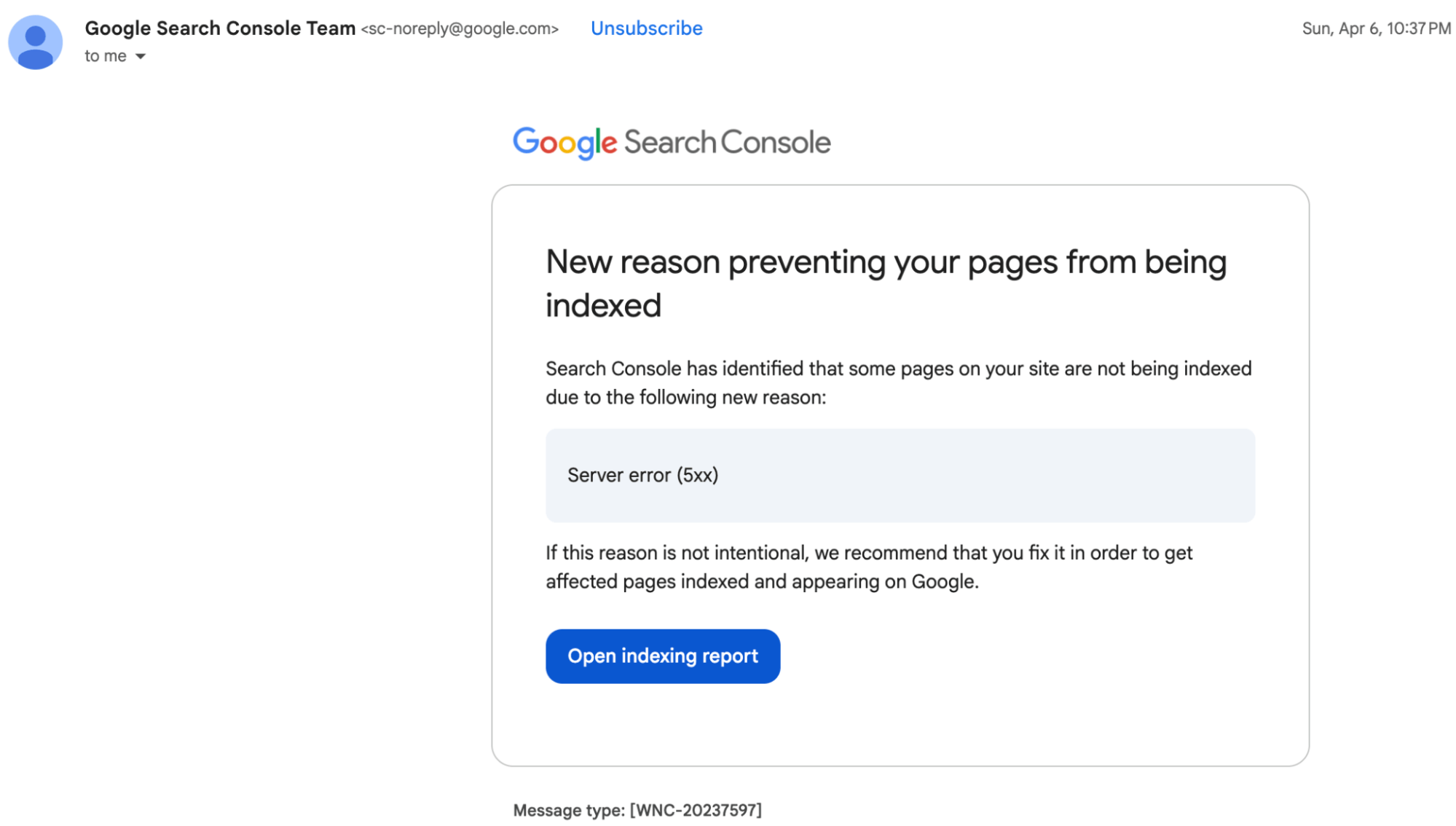

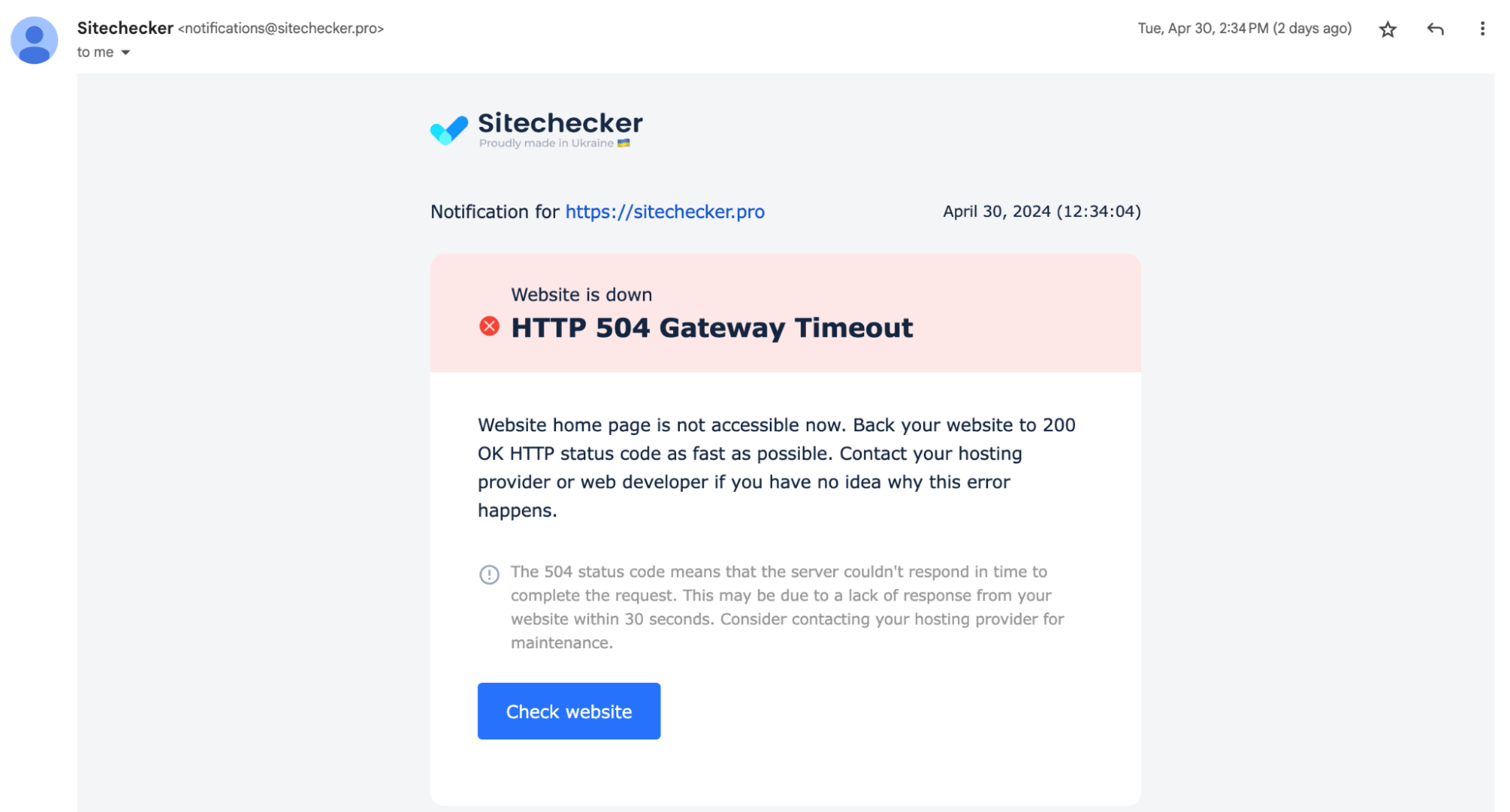

Alternatively, Website Availability Monitoring makes it easy to track the appearance of 5xx errors in real time, receive instant email alerts, and act before your site becomes unavailable.

While Search Console shows the error after Googlebot detects it, Sitechecker provides faster visibility and proactive monitoring, helping you prevent downtime and protect your site’s SEO performance.

Step 2: Review server logs

Open your server logs (typically access.log or error.log) to find out what’s happening behind the scenes when Googlebot hits those URLs. These logs are usually in your server’s /var/log/ directory or accessible via your hosting control panel.

Look for entries that match the URLs flagged in Search Console. Pay attention to:

[Date/Time] "GET /example-page HTTP/1.1" 500

In this example, 500 is the server error code. The date and time will help you match the error with a specific event or traffic spike.

If you’re using Apache, search your error log like this:

grep "500" /var/log/apache2/error.log

For Nginx:

grep "500" /var/log/nginx/error.log

Also, look for timeout errors, memory limits, or permission issues – these often hint at the root cause. If you see the same mistake across multiple URLs, that’s your clue: the problem might be site-wide or tied to a particular script or plugin.

Step 3: Check server health

If your server constantly returns 5xx errors, it could struggle to keep up. Start by checking your hosting dashboard or server monitoring tool (like htop, top, or your provider’s metrics panel) for key indicators:

- CPU usage – if it’s close to 100%, your server might be overloaded

- RAM usage – high memory consumption can cause processes to crash

- Disk space – a full disk can block critical operations and logging

- Server uptime – look for unexpected restarts or long downtimes

On a Linux server, run:

top

or

htop

to monitor CPU and memory in real time.

If you see consistently high usage or spikes during traffic peaks, upgrading your hosting plan or optimizing your backend code and database queries might be time-consuming. For shared hosting, limited resources can easily trigger 5xx errors – consider moving to a VPS or cloud server if the issues persist.

Step 4: Fix the root cause

Once you’ve pinpointed the issue, it’s time to fix what’s breaking. The solution depends on what you found in the logs or server metrics:

1. Code bugs. If a specific script causes the error, debug or roll back the change. Use error traces from the logs to find the faulty file or function.

2. Timeouts. Increase timeout settings in your server config. For Nginx, add or adjust:

proxy_read_timeout 300;

For Apache:

Timeout 300

3. Memory limits. If PHP scripts are running out of memory, increase the limit in php.ini:

memory_limit = 256M

4. Plugin conflicts. Disable recent CMS plugins or themes to see if one is causing the crash. In WordPress, you can do this via FTP by renaming the plugin folder.

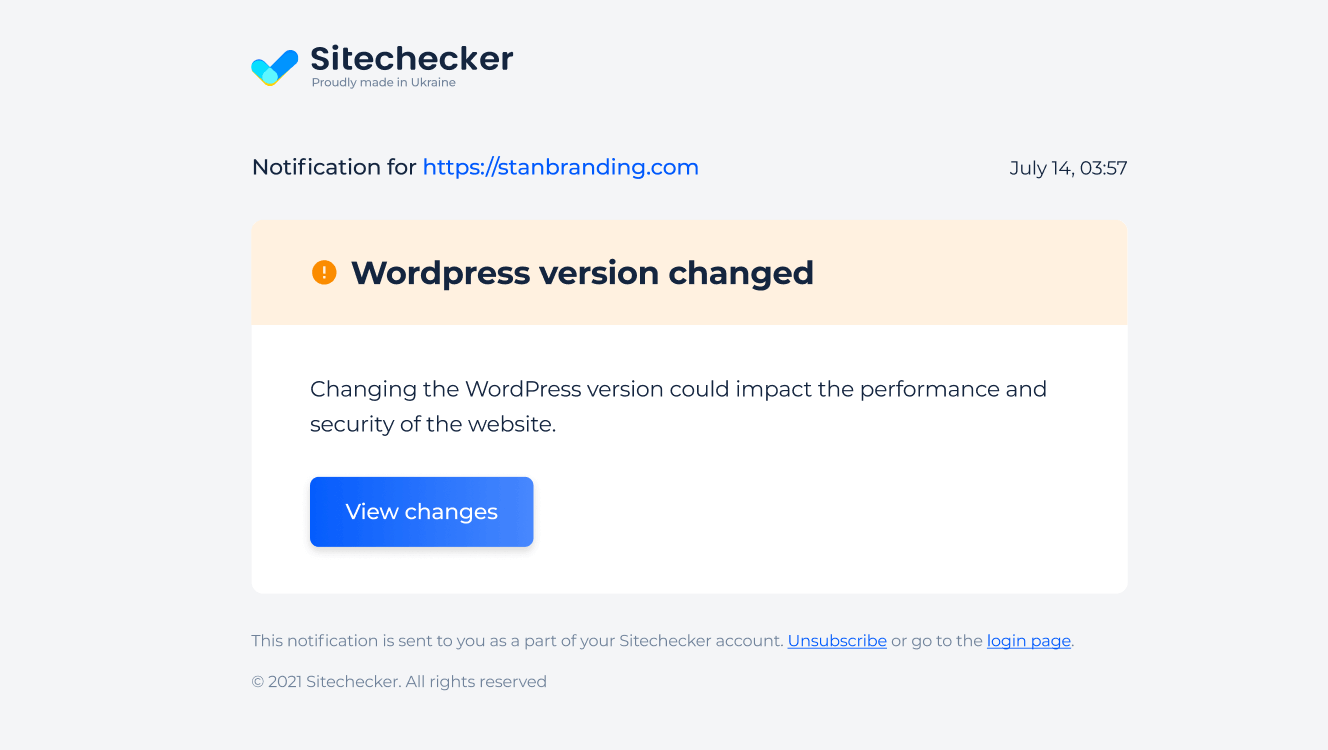

Theme and plugin updates can unexpectedly break parts of your site or trigger server errors. The WordPress Site Monitoring tool alerts you when design elements break or pages stop responding after an update. It’s a simple way to avoid issues caused by plugin conflicts or layout shifts – before they impact your users or rankings.

Use Sitechecker to Catch 5xx Errors

Monitor and fix server issues before Google penalizes your site.

5. Firewall or bot-blocking rules. Make sure your security setup isn’t mistakenly blocking Googlebot. Check .htaccess, robots.txt, or firewall logs.

Sometimes, security settings mistakenly block Googlebot, leading to 5xx errors. To prevent this, check your .htaccess and robots.txt files for rules that might deny access.

Here’s what not to have in .htaccess:

Deny from Googlebot

Or something like this in robots.txt:

User-agent: Googlebot

Disallow: /

Instead, you should allow Googlebot by default:

User-agent: Googlebot

Disallow:

If you’re using a firewall or security plugin (like Wordfence or Cloudflare), review its logs and bot-blocking settings. Make sure Google’s IPs aren’t being blocked or rate-limited. You can also test access using the URL Inspection Tool in Search Console – if it says, “Page cannot be indexed due to server error,” it could be a blocked bot issue.

Always test fixes on a staging environment first, especially for live websites. Once you’re confident they’re stable, deploy them to production.

Step 5: Test the fix

Once you’ve made your changes, it’s time to verify that they worked. The quickest way to do this is to use tools that check HTTP status codes.

Start with the HTTP Status Code Checker. Simply enter your URL, and it will return the current status – whether it now shows 200 OK (success) or throws a 5xx error. This tool is invaluable for spot-checking specific pages after you’ve deployed a fix.

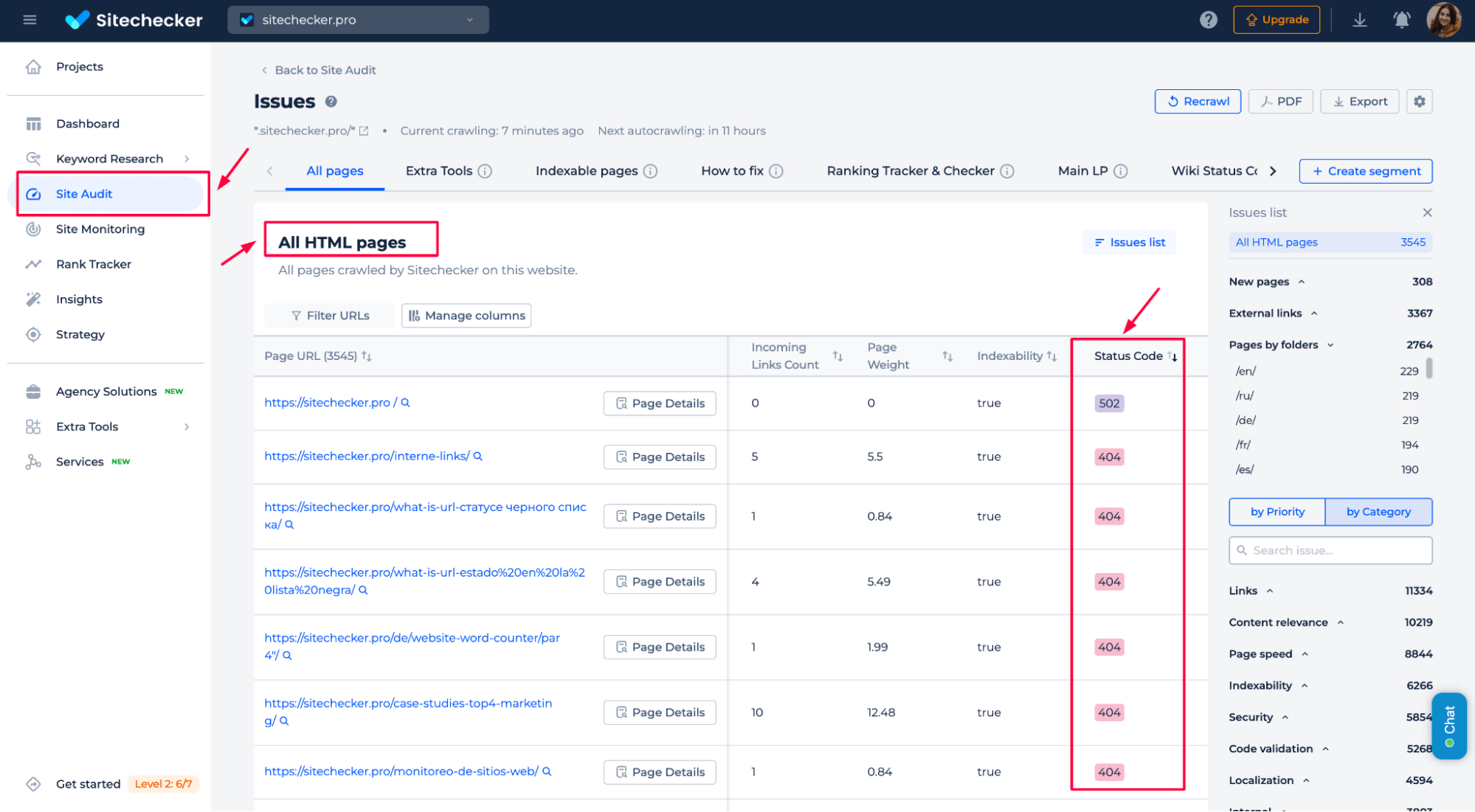

You can also use Sitechecker’s Site Audit feature to monitor the list of affected URLs. Head to the “All HTML pages” tab and sort by Status Code to see if the 5xx entries have cleared.

You’re good if the status has changed to 200 or a proper redirect (301/302). If it still shows 5xx, you may need to dig deeper or wait for your server cache to reset.

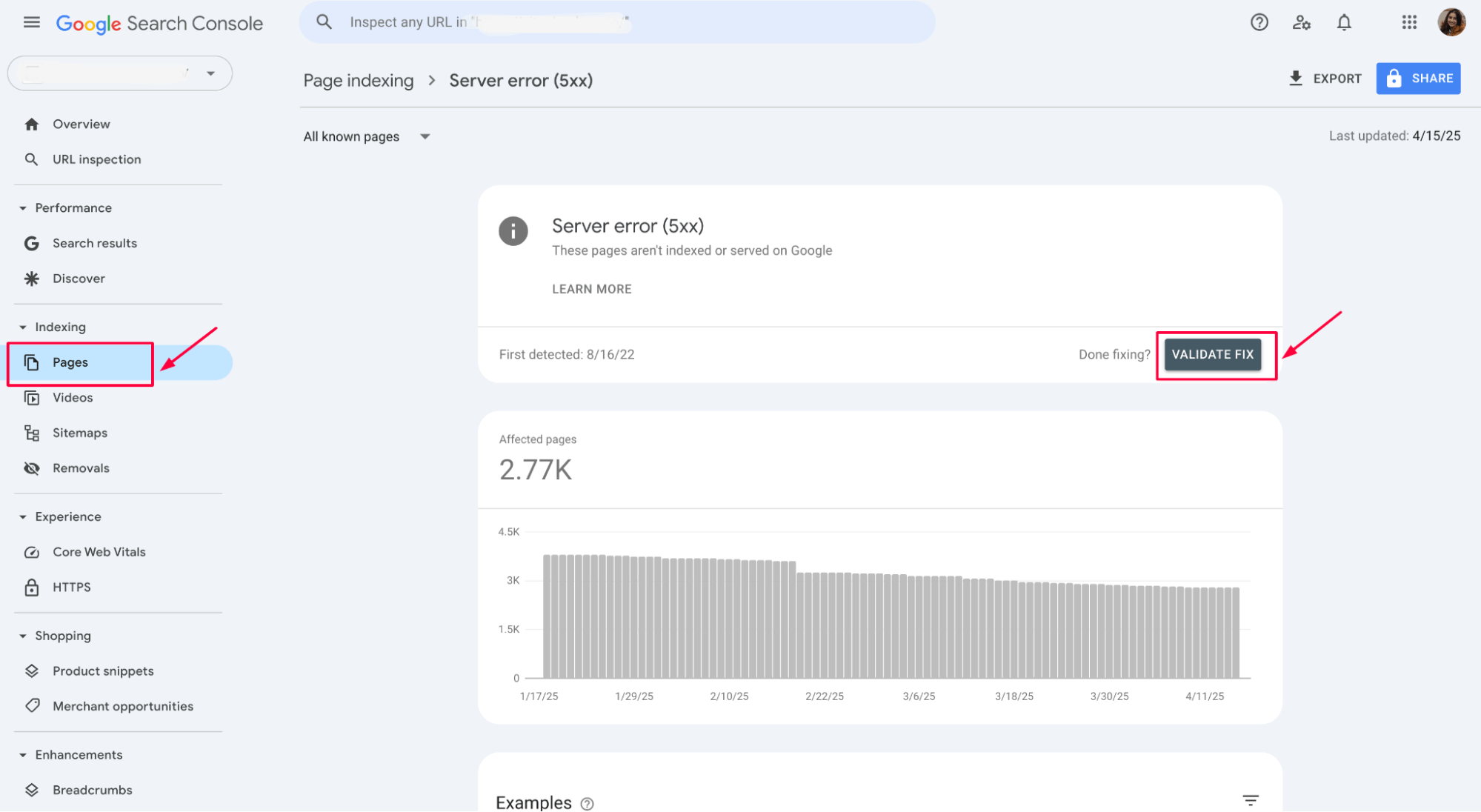

Step 6: Validate in Google Search Console

After confirming that your pages are accessible and no longer returning 5xx errors, head back to GSC to notify Google. In the Pages report under Indexing, locate the error cluster labeled “Server error (5xx)”, click on it, then hit “ Validate Fix.”

This prompts Google to recrawl the affected URLs and recheck their status. The issue will gradually move to the “Validated” status if everything works correctly. Depending on your site’s size and crawl frequency, this process can take a few days.

Until validation is complete, monitor the URLs to ensure the problem doesn’t return. If it does, Google will stop the validation and flag the issue again.

Step 7: Request reindexing

If the error affected key pages, use the URL Inspection Tool to request indexing so Google can re-crawl them faster.

Here’s a quick video from Google Search Console Training that shows how to use the URL Inspection Tool to request indexing after fixing a 5xx error.

Conclusion

5xx errors in Google Search Console signal server-side issues that block Googlebot from accessing your pages. They hurt indexing, crawl budget, and SEO performance. To fix them, identify affected URLs, check logs, assess server health, and resolve the root cause – whether it’s a code bug, plugin conflict, or firewall misconfiguration.

Use tools like Sitechecker and Google’s URL Inspection Tool to test fixes, validate in Search Console, and request reindexing. Regular monitoring can help you catch future issues early and protect your rankings.