What does the “Couldn’t fetch sitemap” error in the GSC mean?

The ‘Couldn’t Fetch Sitemap’ error in Google Search Console means Googlebot can’t retrieve or process your sitemap, preventing proper indexing and impacting SEO.

This error can appear in different forms, including:

- “Sitemap could not be read” – Googlebot encountered parsing errors or issues with the file.

- “Sitemap couldn’t fetch” – The sitemap URL is inaccessible or returns an invalid response.

- “Google Search Console can’t read my sitemap” – There may be formatting issues or permission restrictions.

Common causes include incorrect sitemap URLs, server errors, misconfigured robots.txt settings, or slow server response times. If left unresolved, this can limit Google’s ability to discover and index your pages efficiently. In the next sections, we’ll explore the most frequent reasons behind this error and how to fix them effectively.

Launch Sitechecker’s GSC Dashboard to boost your Search Console reporting!

Expand GSC Data Limits

Bypass Google’s 1,000-row cap and unlock up to 36 months of Search Console history in a single dashboard.

Common causes of the “Couldn’t fetch sitemap” error

The “Couldn’t Fetch Sitemap” error in Google Search Console can stem from a variety of technical issues, preventing Googlebot from accessing and processing your sitemap correctly. Identifying the root cause is crucial for resolving the error and properly indexing your website.

Some of the most common reasons for this error include:

| Incorrect Sitemap URL | The submitted sitemap link may be incorrect, outdated, or inaccessible. |

| Sitemap Format Issues | The sitemap file may contain syntax errors, incorrect XML structure, or unsupported encoding. |

| Server Response Errors (404, 403, 500, 503) | Issues with the website’s hosting, permissions, or downtime can block Googlebot from fetching the sitemap. |

| Slow Server Response Time | If your server is slow or experiencing high load, Google may time out when trying to fetch the sitemap. |

| Blocked by robots.txt | Incorrect directives in your robots.txt file may prevent Googlebot from accessing the sitemap. |

| Sitemap Too Large | Google has a limit of 50MB or 50,000 URLs per sitemap; exceeding this may cause fetch failures. |

| CMS or Plugin Misconfiguration | If using WordPress or other CMS platforms, incorrect settings in SEO plugins like Yoast SEO or RankMath can prevent proper sitemap generation. |

| Incorrect Sitemap Path | The sitemap should be placed in the root folder of your WordPress installation. An incorrect sitemap path can make it inaccessible to Googlebot. Ensure the sitemap URL follows the correct structure, such as https://yourwebsite.com/sitemap.xml. |

Each of these issues requires specific troubleshooting steps, which we will cover in detail in the following sections to help you resolve the error efficiently.

Advanced troubleshooting

If you’ve tried the basic fixes and Google Search Console still can’t fetch your sitemap, it’s time for advanced troubleshooting. The updated Google Search Console offers detailed diagnostics to help troubleshoot sitemap errors. By reviewing error logs and server response codes, you can determine if the issue is due to Google’s processing or a problem with your sitemap itself.

The following steps will help diagnose deeper technical issues and ensure that Googlebot can properly access and process your sitemap. Sometimes, the error may indicate a pending status where Google has not yet processed the sitemap.

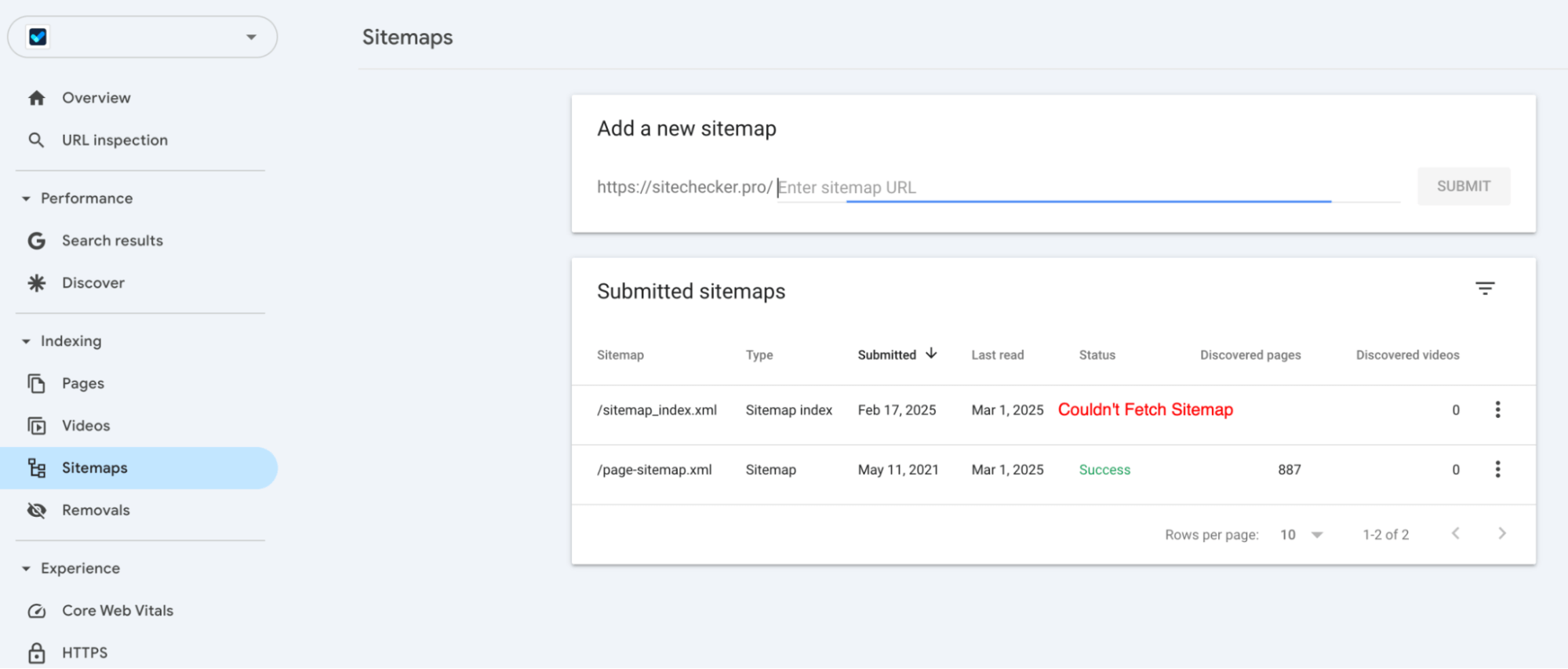

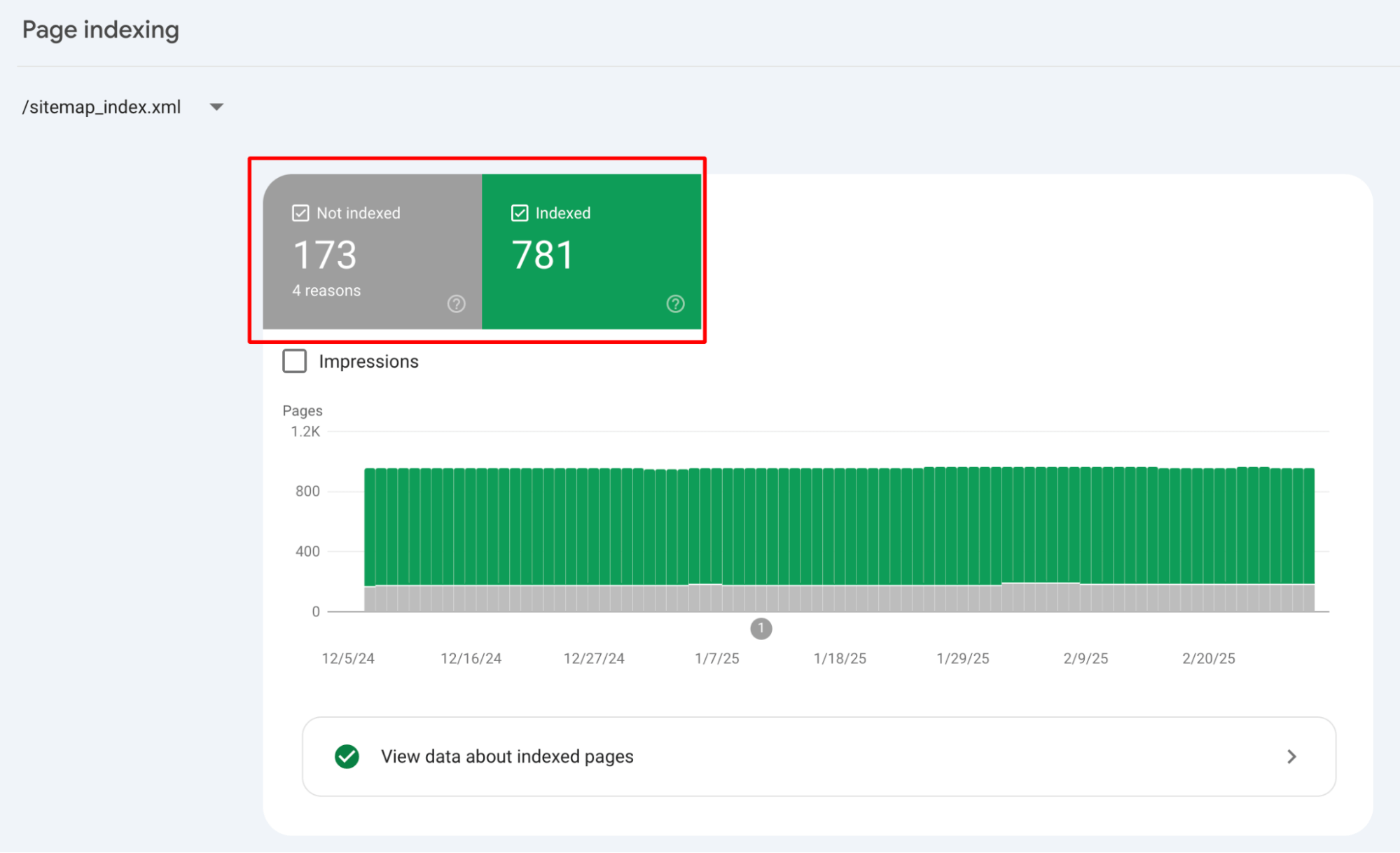

1. Check crawl errors in Google Search Console

Go to Google Search Console, navigate to Indexing > Sitemaps, and check for any error messages. Look for warnings such as “Couldn’t fetch,” “Sitemap could not be read,” or “Submitted URL not found (404).” Click on the error details to view additional insights and suggested fixes.

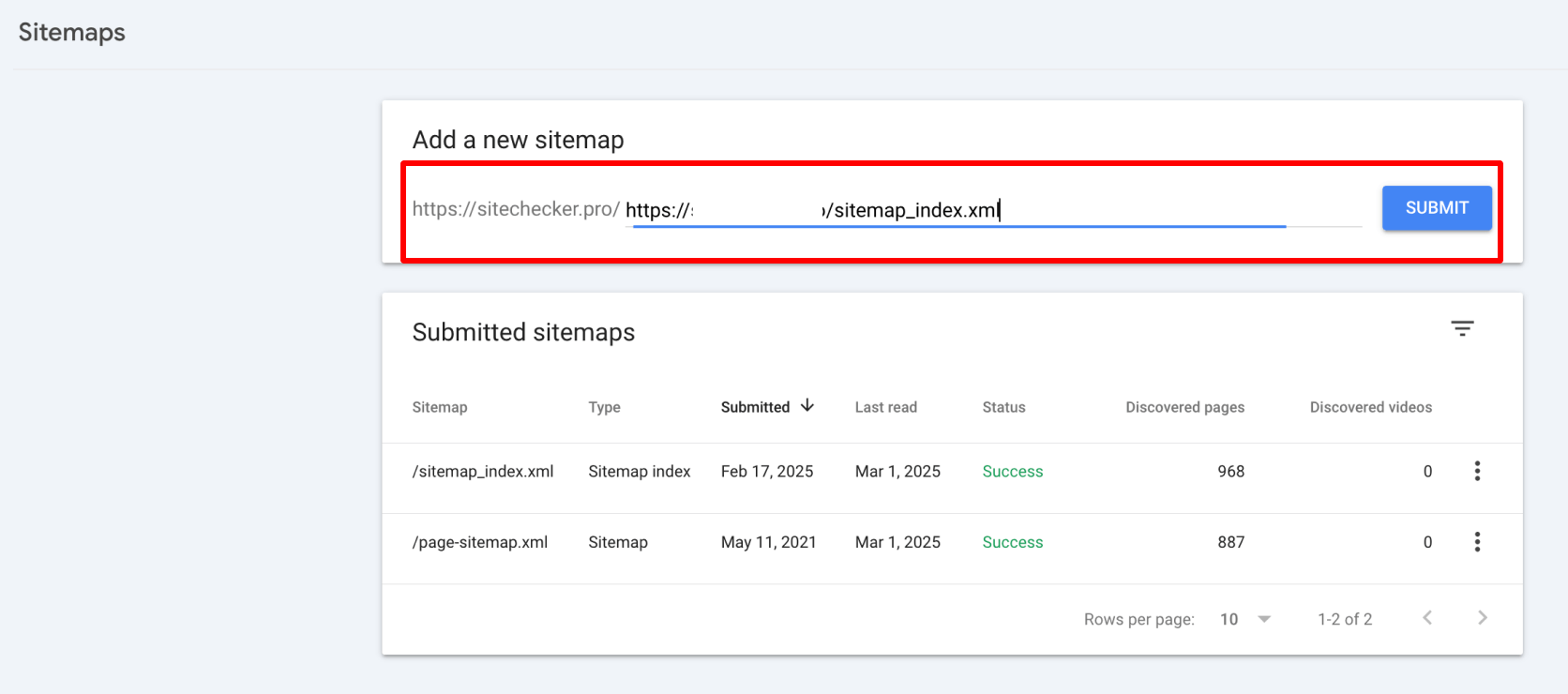

To ensure proper submission, enter the correct sitemap URL in the “Add a new sitemap” field. This helps prevent common issues like the “Couldn’t fetch” error and improves sitemap processing.

Review crawl logs to identify any additional issues that may not be immediately visible in the Google Search Console.

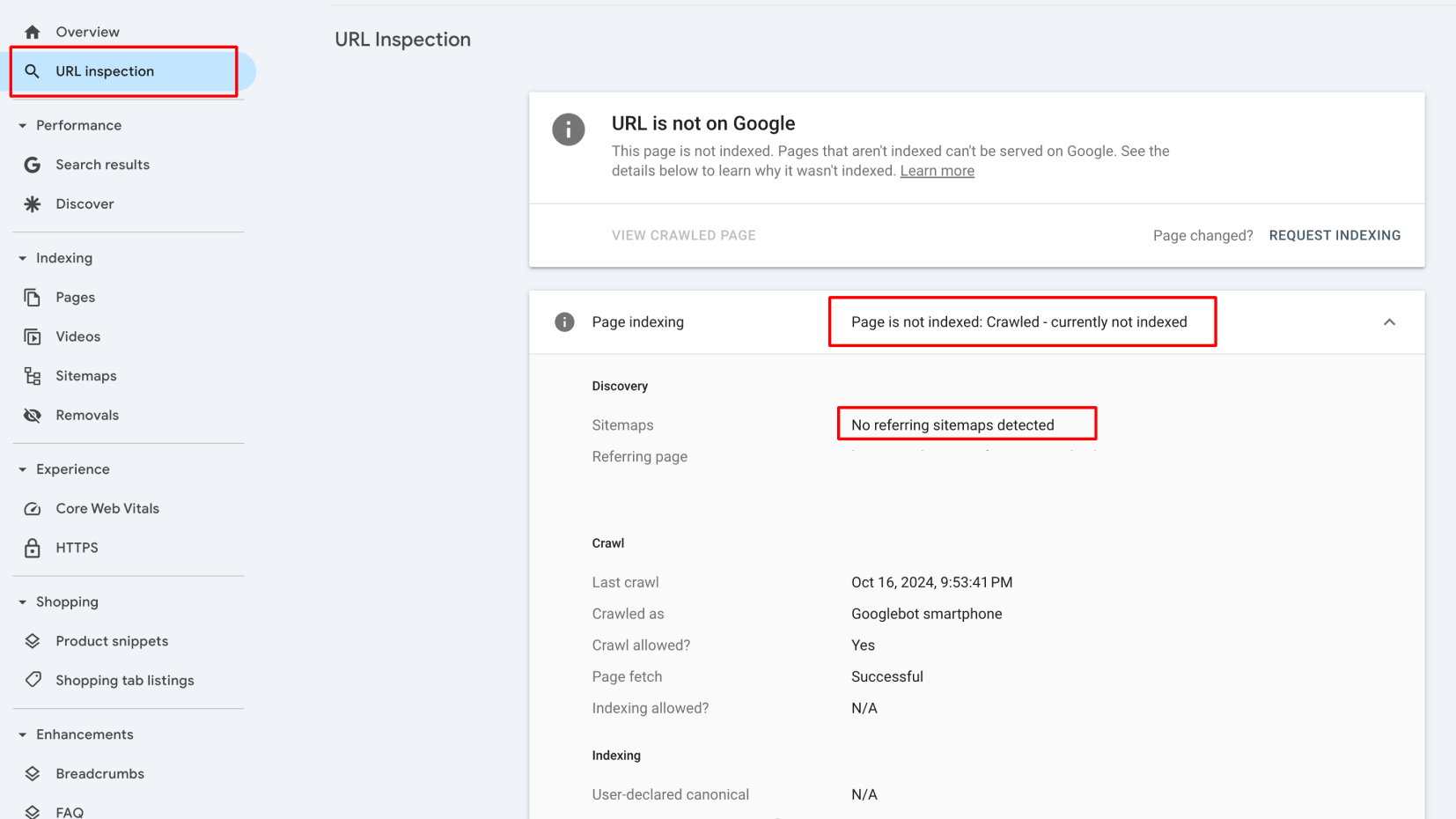

2. Use Google’s URL Inspection Tool

Open Google Search Console > URL Inspection Tool.

Enter your sitemap URL and check for accessibility errors.

Check if Googlebot is able to access the file. If blocked, check your robots.txt, server response, or sitemap format.

User-agent: *

Disallow: /sitemap.xml

To allow search engines to access the sitemap, remove the disallow rule and explicitly specify the sitemap location:

User-agent: * Allow: /sitemap.xml Sitemap: https://yourwebsite.com/sitemap.xml

If you encounter issues such as ‘Indexing request rejected’, you can request indexing in Google Search Console. Validate the sitemap’s content and check for potential plugin conflicts that could prevent Google from accessing the site.

3. Test sitemap accessibility manually

- Open your sitemap URL in a browser (e.g., https://yourwebsite.com/sitemap.xml).

- If the page returns a 404 (Not Found) or 403 (Forbidden) error, Googlebot won’t be able to fetch it.

- If you see an XML file but still get the error, verify its format and structure using the W3C Validator.

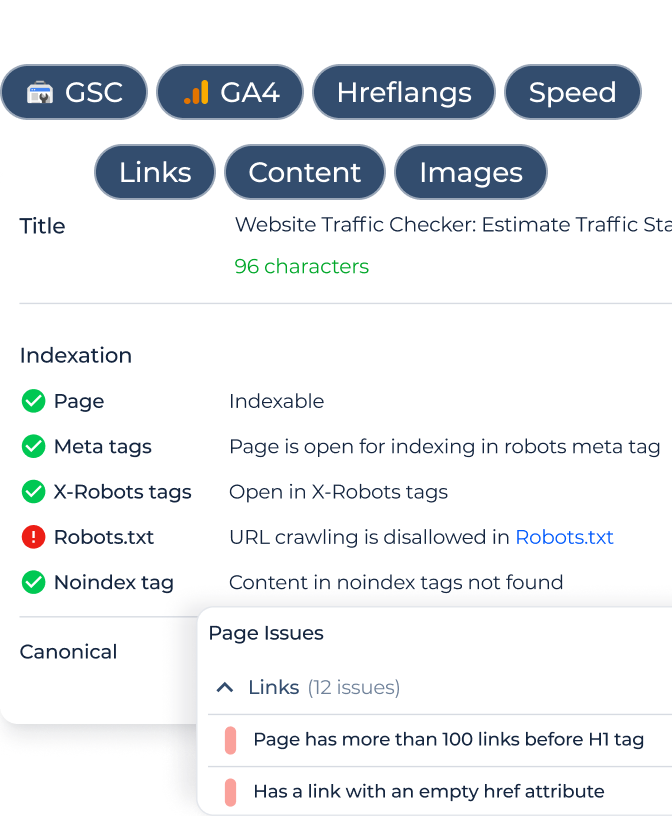

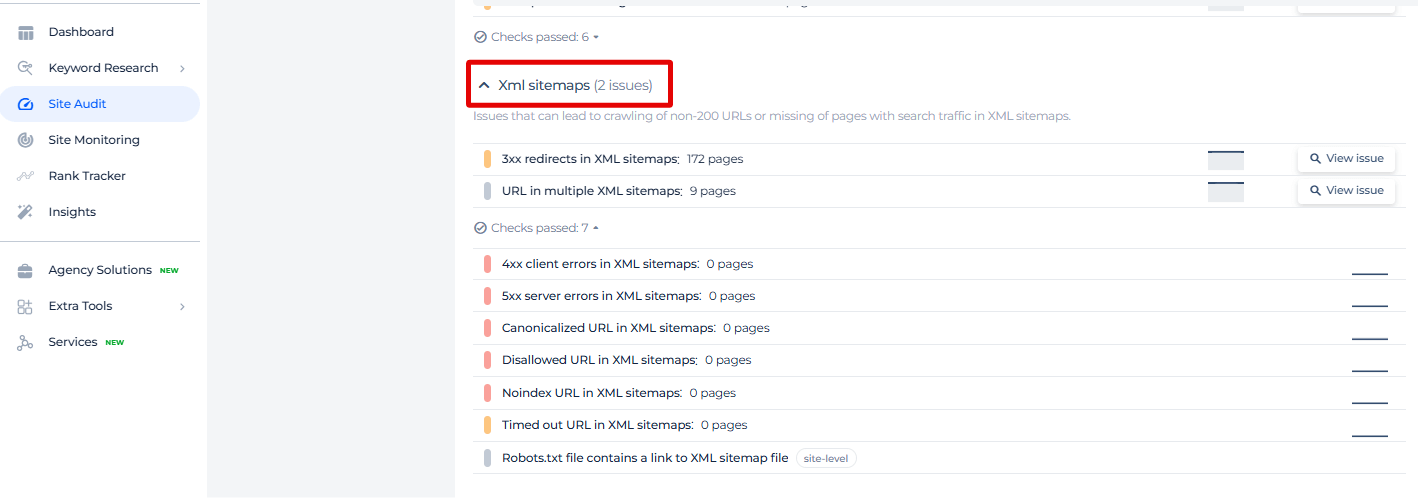

4. Diagnose sitemap issues with Sitechecker

Sitechecker’s XML Sitemap Checker can scan for invalid URLs, duplicate entries, and missing pages.

Sitemap Not Fetching? Diagnose & Fix It!

Check why Google Search Console can't fetch your sitemap and get step-by-step solutions.

5. Review server logs for Googlebot activity

To check if Googlebot has tried to fetch your sitemap, review your server access logs. Look for entries where the user agent is Googlebot and see whether the request was successful, indicated by a 200 OK status, or if it was blocked. If you don’t see any requests from Googlebot, try re-submitting your sitemap in Google Search Console to prompt a new crawl.

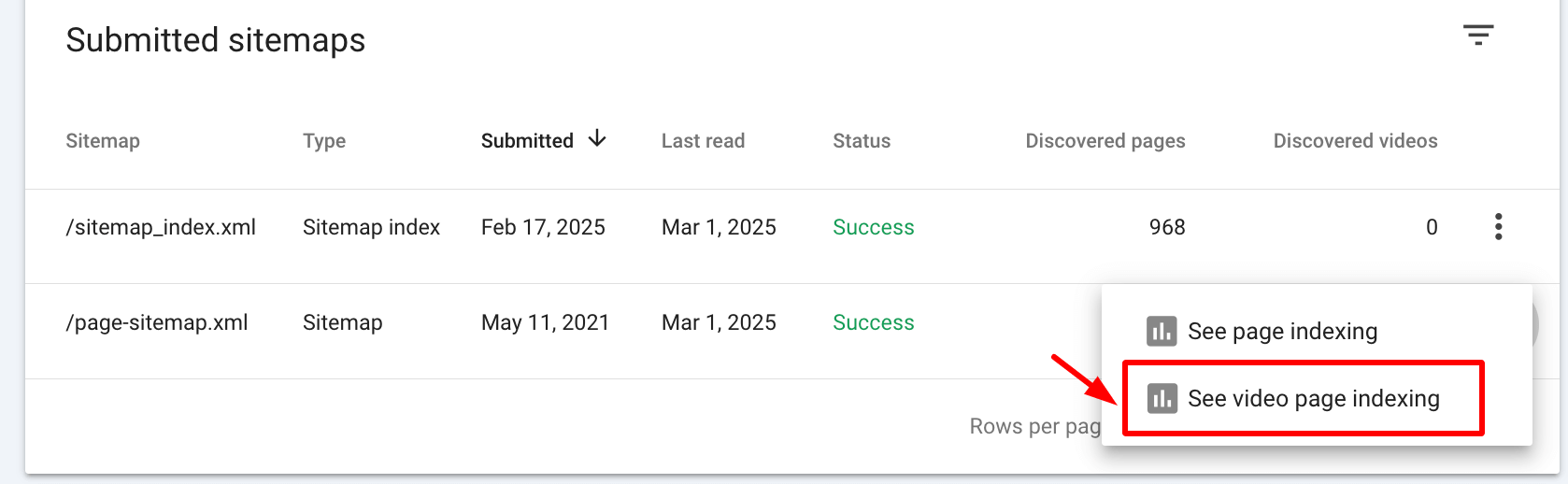

6. Optimize large sitemaps for better crawling

If your sitemap is larger than 50MB or contains more than 50,000 URLs, break it into smaller sitemaps to ensure proper processing. Use a sitemap index file (sitemap_index.xml) to organize and reference all sub-sitemaps, making it easier for Google to find and crawl your content efficiently.

<sitemapindex xmlns="http://www.sitemaps.org/schemas/sitemap/0.9">

<sitemap>

<loc>http://example.com/sitemap1.xml</loc>

</sitemap>

<sitemap>

<loc>http://example.com/sitemap2.xml</loc>

</sitemap>

</sitemapindex>

7. Debug WordPress & CMS sitemap plugins

If you’re using Yoast SEO start by clearing the cache and regenerating the sitemap. Check for any conflicts with other plugins that could interfere with sitemap generation. Finally, make sure your sitemap is enabled and properly submitted in Google Search Console for accurate indexing.

Validating and optimizing your sitemap

Ensure correct sitemap formatting

To ensure that your sitemap is correctly formatted, follow these steps:

- Use a Sitemap Validation Tool. Utilize tools like the XML Sitemap Validator to check for any errors in your sitemap. These tools can help identify issues with syntax, structure, and encoding.

- Correct Format. Ensure your sitemap is in the correct format, such as XML or RSS. XML sitemaps are the most commonly used and are supported by all major search engines.

- Proper Encoding. Verify that your sitemap is correctly encoded, typically in UTF-8. Incorrect encoding can lead to parsing errors.

- Structured Layout. Check that your sitemap is correctly formatted, with each URL on a new line and in the correct order. Each entry should include essential tags like <loc>, <lastmod>, <changefreq>, and <priority>.

By ensuring that your sitemap is correctly formatted, you can help Google Search Console to crawl and index your website’s content accurately. Proper formatting is crucial for avoiding errors and ensuring that all your pages are discoverable by search engines.

Optimize sitemap for search engines

To optimize your sitemap for search engines, follow these steps:

Include all pages

Ensure that your sitemap includes all the pages on your website, including any subdomains or subdirectories. This comprehensive approach helps search engines understand the full scope of your site.

Use a sitemap index file

If your site has a large number of URLs, use a sitemap index file (sitemap_index.xml) to help Google Search Console efficiently crawl and index your content. This file can link to multiple smaller sitemaps, making it easier for search engines to process.

Regular updates

Keep your sitemap regularly updated to reflect any changes to your website’s content. This includes adding new pages, updating existing ones, and removing obsolete URLs.

Include media

Use your sitemap to help Google Search Console correctly crawl and index your website’s content, including any images, videos, or other media. This can improve the visibility of your multimedia content in search results.

Optimizing your sitemap helps search engines easily find and index your content, improving your website’s visibility and rankings in search results. A well-structured sitemap ensures that important pages are crawled efficiently, boosting your SEO performance.

Re-submit the sitemap in the Google Search Console

Once all issues are resolved, open Google Search Console, go to Sitemaps, and delete the outdated sitemap. Submit the updated sitemap URL and wait for Google to fetch it. If a fetch error occurs, it may indicate a pending status or an issue with the sitemap. Monitor for errors over the next 24-48 hours and use Google Search Console to diagnose and fix any remaining problems.

Final idea

A well-optimized sitemap is crucial for SEO. Regularly update and validate it to improve Google indexing. Still, having issues? Use Sitechecker for advanced diagnostics.