Understanding Robots.txt and XML Sitemaps: Laying the Foundation

In the ever-evolving landscape of search engine optimization (SEO), understanding the technical aspects of website management is crucial. Two essential components in this arena are the robots.txt file and XML sitemaps. These tools play a pivotal role in how search engines crawl and index web content, directly impacting a site’s visibility and performance in search results.

Robots.txt: The Gatekeeper of Your Site

The robots.txt file acts as the gatekeeper for your website, telling search engine bots which pages or sections of your site they can or cannot crawl. This is particularly important for controlling the crawl traffic to ensure that search engines are focusing on your most valuable content and not wasting resources on irrelevant or private areas. Properly configuring your robots.txt file can prevent search engines from accessing duplicate content, private sections, or files that offer no SEO value, thereby enhancing the efficiency of the crawl process.

A well-crafted robots.txt file not only guides search engines smoothly through your site but also protects sensitive information from being inadvertently indexed. It’s the first step in managing your digital footprint on search engines and requires careful consideration to balance accessibility and privacy.

The Role of Sitemap Inclusion in Robots.txt

The inclusion of a sitemap in the robots.txt file goes beyond a mere best practice—it’s a strategic decision that can significantly influence your website’s SEO performance.

Prompting Immediate Search Engine Awareness

Including the path to your sitemap.xml in your robots.txt file serves as a direct invitation to search engines to crawl and index your site. This practice can significantly speed up the discovery of new or updated content, ensuring that your website remains fresh and relevant in search engine results pages (SERPs). It’s a proactive measure to ensure that your content gets the visibility it deserves.

Ensuring Comprehensive Site Crawling

By guiding search engines directly to your own sitemap index, you reduce the risk of important pages being overlooked. This is especially critical for large websites with thousands of pages, where it’s easy for some content to fall through the cracks. A sitemap listed in robots.txt ensures a more thorough and efficient crawling process, enhancing the completeness of your site’s indexing and improving your overall SEO performance.

When to Include Your Sitemap in Robots.txt (And When Not To)

Scenarios Warranting Sitemap Inclusion

The inclusion of a sitemap in robots.txt is highly recommended for websites that:

- Regularly add new content, ensuring that new pages are discovered and indexed promptly.

- Contain a large archive of pages, which may be difficult for crawlers to discover without guidance.

- Have complex or deep website structures, where some valuable content might be overlooked during routine crawling.

When Skipping Sitemap Inclusion is Okay

While including a sitemap in robots.txt is generally beneficial, there are scenarios where it might not be necessary:i

- Small or newly launched websites with a minimal number of pages might be fully crawled without the need for a sitemap.

- Websites where the primary content is easily accessible through navigation and does not require additional cues for discovery.

- In cases where search engines have already indexed the site’s content comprehensively, and the website structure does not frequently change.

The strategic inclusion of a sitemap in robots.txt can significantly impact a website’s search engine visibility and performance. It is a nuanced decision that should be based on the specific needs and characteristics of the website, with the goal of maximizing content discovery and indexing efficiency.

Enhancing Your Sitemap Strategy: Advanced Tips and Best Practices

To maximize the effectiveness of your sitemap and ensure optimal website indexing by search engines, it’s crucial to adhere to a set of advanced tips and best practices. These strategies not only enhance the discoverability of your content by search bots but also improve your site’s overall SEO performance.

Code Quality and Sitemap Integrity

The foundation of an effective sitemap strategy is the quality of the sitemap’s code and its integrity. A sitemap must be correctly formatted in XML, adhering to the protocol established by search engines like Google. This means:

- Correct Syntax: Ensure your XML sitemap is free from errors and follows the correct structure, including proper tagging and adherence to the XML schema.

- No Broken Links: Regularly check your sitemap for broken links and remove or fix them. Search engines may lose trust in your sitemap if they encounter too many errors.

- Include Important URLs Only: Your sitemap should only contain canonical URLs and exclude duplicate pages, session IDs, or pages with noindex tags to maintain its integrity.

Keeping Your Sitemap Up-to-Date

An up-to-date sitemap is crucial for ensuring search engines are aware of new content or changes to existing pages. This includes:

- Regular Updates: Update your sitemap regularly to reflect new content additions, deletions, or modifications.

- Automate Updates: If possible, use tools or CMS features that automatically update your sitemap whenever content is added or changed.

- Prioritize Important Content: Use the <priority> tag to indicate the relative importance of pages within your site, helping search engines understand which content you deem most valuable.

Monitoring Sitemap Performance

To assess the effectiveness of your sitemap, it’s important to monitor its performance using tools provided by search engines, such as Google Search Console:

- Submission Status: Ensure your sitemap is successfully submitted and accepted without errors.

- Index Coverage: Review how many pages in your sitemap are being indexed versus submitted. Investigate and resolve any discrepancies.

- Crawl Rate and Errors: Keep an eye on how often search engines crawl your sitemap and look out for any crawl errors that need to be addressed.

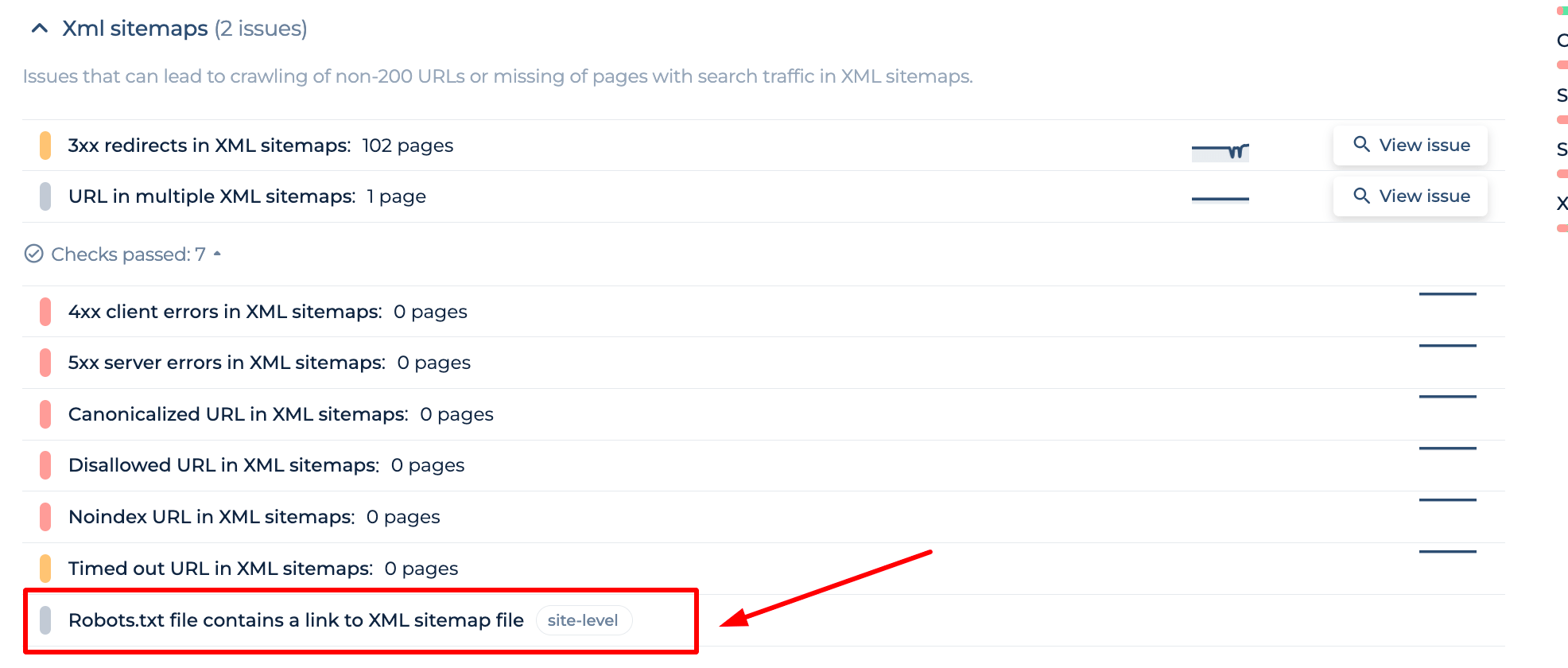

In the screenshot from Sitechecker’s SEO tool, we see the ‘Xml sitemaps’ section highlighted, focusing on the importance of ensuring your robots.txt file contains a link to your XML sitemap file. This is crucial as it guides search engines through the website, ensuring all important pages are discovered and indexed. By clicking on the “View issue” link next to the ‘Robots.txt file contains a link to XML sitemap file’ entry, users can obtain a detailed list of pages impacted by this issue.

This function is particularly helpful as it allows webmasters to quickly verify the presence of the sitemap link in their robots.txt, which is a fundamental step in site-level SEO optimization. The tool simplifies the process by pinpointing exactly where the issue lies, without overwhelming the user with the number of pages affected. The layout makes it easy to understand and act upon, ensuring that your sitemap is recognized and utilized by search engines effectively.

Optimize Your Indexing with Proper Sitemap Placement!

Use our Sitechecker tool to ensure your robots.txt file points them to your sitemap.xml.

When to Seek Professional SEO Advice

While many aspects of sitemap management can be handled in-house, there are situations where seeking professional SEO advice can be beneficial:

- Complex Site Structures: If your website has a complex architecture, an SEO professional can help ensure your sitemap strategy effectively covers all content.

- Technical Issues: For persistent issues with sitemap errors, indexing, or crawl rates, an SEO expert can provide insights and solutions that may not be obvious to non-specialists.

- Major Site Overhauls: Before and after major website redesigns or migrations, consulting with an SEO professional can help minimize negative impacts on your search engine rankings.

Implementing these advanced tips and best practices will not only improve the quality and effectiveness of your sitemap but also enhance your website’s overall SEO health. By ensuring your sitemap is accurate, up-to-date, and monitored for performance, you can significantly improve your site’s visibility and search engine rankings.

Conclusion

The interplay between sitemap.xml files and robots.txt is crucial for optimizing a website’s visibility to search engines. By guiding search engine crawlers effectively, robots.txt acts as a gatekeeper, highlighting valuable content while protecting private sections. Concurrently, XML sitemaps serve as detailed maps, ensuring all important content is indexed and ranks well in search results. Including sitemap.xml in robots.txt can significantly enhance a site’s discoverability, especially for websites that frequently update content or have complex structures. However, it’s not always necessary for smaller sites or those with content easily found through navigation. Advanced strategies like maintaining code quality, updating sitemaps regularly, and monitoring their performance are essential for keeping up with the evolving landscape of SEO.