What is Technical SEO?

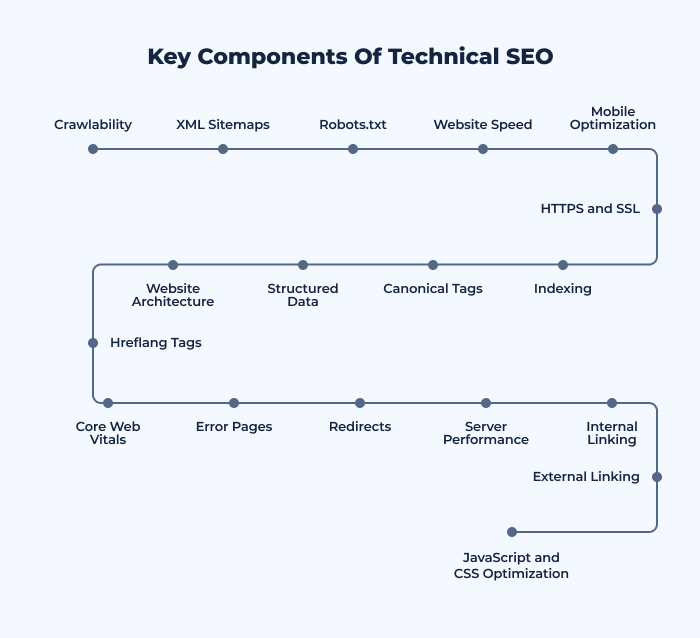

Technical SEO refers to the optimization of the technical aspects of a website to ensure that search engines can crawl and index its pages efficiently. While content and off-site SEO focus on the quality and promotion of content, technical SEO centers on improving the website’s foundational elements. These foundational elements include site speed, mobile optimization, website architecture, and more. Technical SEO ensures that, regardless of how high-quality the content may be, the website’s technical aspects don’t hinder search engines or users from accessing and understanding the content.

What is SEO?

Search Engine Optimization (SEO) is the process of optimizing a website to improve its visibility in search engine results pages (SERPs). The primary goal of SEO is to drive organic (non-paid) traffic to a website. SEO encompasses various tactics and strategies, which can broadly be categorized into three main areas:

- On-page SEO: This relates to the content on your website. It involves optimizing the content to make sure it’s reader-friendly and also contains relevant keywords that people might use when searching for topics in your niche.

- Off-page SEO: This revolves around external signals like backlinks. It focuses on building a strong network of high-quality links pointing back to your site, among other strategies.

- Technical SEO: As discussed above, this pertains to the technical aspects of a site, such as ensuring fast loading speeds, a secure connection, mobile-friendliness, structured data markup, and more.

When combined, these three pillars of SEO help ensure that a website is likable both to search engines and human users, thereby increasing its chances of ranking higher in search results.

Technical SEO refers to the process of optimizing a website for the crawling and indexing phase. By addressing and optimizing technical aspects of a website, you ensure that search engines can easily crawl and index its pages.

Why is Technical SEO Important?

Technical SEO forms the backbone of any effective SEO strategy. While the quality and relevance of content certainly play crucial roles in website ranking, the technical foundation on which content rests can significantly influence its visibility in search engine results. Here’s why Technical SEO is indispensable:

| Enhanced Crawlability & Indexability | For a website to appear in search results, search engines first need to crawl its pages and index them. Technical issues, such as poor site structure or a faulty robots.txt file, can prevent or limit search engines from accessing your site, making your content virtually invisible. |

| Optimal User Experience | Users have little patience for slow-loading pages, difficult navigation, or non-mobile-friendly sites. By optimizing these technical elements, you ensure users have a seamless and positive experience, which search engines recognize and reward with higher rankings. |

| Avoiding Duplicate Content | Technical SEO can help in managing and avoiding duplicate content issues, which can dilute site authority and confuse search engines about which version of a page to rank. |

| Secure and Accessible Website | With the rise of cyber threats, a secure website (HTTPS as opposed to HTTP) is not just beneficial for users but is also a ranking factor for Google. |

| Optimizing for Organic Rankings | While content gets you in the game, technical SEO ensures that you’re in the best position to compete. Proper implementation can significantly affect page rankings, especially in competitive niches. |

| Adaptability to Algorithm Changes | Search engine algorithms evolve constantly. A technically sound website is in a better position to weather these changes and even benefit from them. |

| Future-Proofing | With the advent of new technologies and features, such as voice search and the increasing importance of Core Web Vitals, a solid technical foundation ensures your website remains adaptable and competitive. |

In essence, while content might be king, Technical SEO provides the castle from which it rules. Ignoring it can result in even the best content going unnoticed, but with it, content can shine and achieve the visibility it deserves.

Google About Technical SEO in Today’s

John Mueller has said on Twitter that technical SEO is still important, and that it is the foundation of everything built on the open web. He has also said that technical SEO is not going away, and that it is a mistake to ignore it.

In response to a tweet claiming that technical SEO is becoming less important, Mueller said:

He has also said that technical SEO is essential for creating a good user experience, which is a key ranking factor for Google.

In another tweet, Mueller said:

This shows that technical SEO is still a very important factor in Google’s ranking algorithm.

Overall, John Mueller’s tweets on technical SEO make it clear that it is still a very important part of SEO. He has said that technical SEO is the foundation of the open web, that it is essential for creating a good user experience, and that it is a key ranking factor for Google.

Here are some specific examples of John Mueller’s tweets on technical SEO:

Understanding Crawling

Crawling is the process by which search engines like Google discover updated content on the web, such as new sites or changes to existing sites. Web crawlers, also known as spiders or bots, scan the web and collect data about each page to help search engines index and serve the most up-to-date and relevant content to users. To ensure that your website is easily and efficiently crawled, certain technical considerations need to be in place.

Create SEO-Friendly Site Architecture

An SEO-friendly site architecture facilitates both user navigation and search engine crawling. By creating a logical hierarchy of information, you make it easier for users to find the content they’re interested in and for search engines to understand your site’s structure. Here are some key components:

- Logical URL Structure: Ensure that URLs are descriptive and give an idea of the page’s content.

- Utilize Internal Linking: By interlinking related content, you help crawlers find new pages and understand the context of each page.

- Use Heading Tags Appropriately: Heading tags (H1, H2, H3, etc.) provide a structure to your content, making it more readable for users and comprehensible for search engines.

- Limit Depth: Ideally, any page should be reachable within three to four clicks from the homepage, ensuring no content is buried too deep.

Submit Your Sitemap to Google

A sitemap is a file that lists all the important pages of your website, giving search engines a roadmap to understand the structure of your site. Submitting your sitemap directly to Google via Google Search Console ensures that Google is aware of all the pages on your site, including any new pages you’ve added or updated.

Optimize Your Robots.txt

The robots.txt file acts as a guide for web crawlers, indicating which parts of your site should or shouldn’t be crawled. Properly optimizing this file ensures:

- You don’t waste crawl budget on unimportant or duplicate pages.

- You prevent certain pages (like admin pages or private data) from being indexed.

- You guide crawlers to the most essential parts of your site.

However, be cautious: an incorrectly configured robots.txt can inadvertently block search engines from crawling your entire site or important sections of it.

Specify a Preferred Domain

Deciding whether your website should be accessed with the “www” prefix or without it is essential for SEO. For instance, Google sees “www.example.com” and “example.com” as two different websites. By specifying a preferred domain, you ensure that search engine ranking metrics are consolidated for your chosen version. This can be set in Google Search Console, and it’s also recommended to implement a 301 redirect from the non-preferred version to your chosen one to avoid any potential duplication issues.

Understanding Indexing

Indexing refers to the process where search engines store and organize the content found during the crawling process. Once a page is indexed, it becomes eligible to be displayed in search engine results. Effective indexing is crucial for SEO because if your pages aren’t indexed, they won’t appear in search results. To optimize the indexing of your site, consider the following elements:

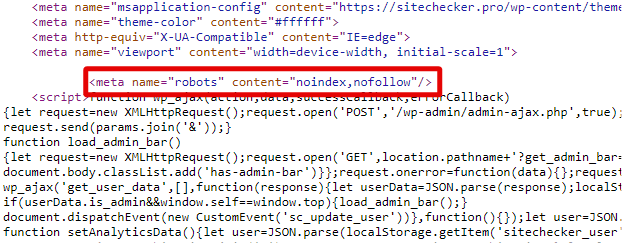

Noindex Tag

The noindex tag instructs search engines not to include a particular page in their index. This can be useful for:

- Pages with duplicate or thin content.

- Temporary pages or those with time-sensitive information.

- Private or confidential pages. Including the noindex tag ensures that these pages won’t appear in search results, conserving your crawl budget and maintaining the quality of your indexed pages.

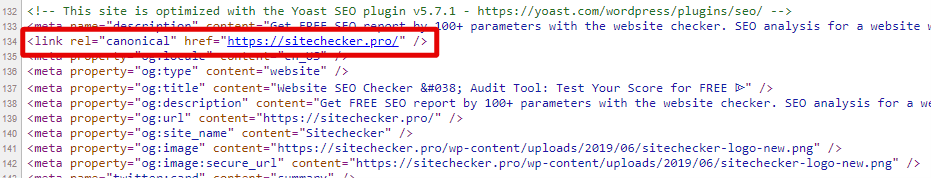

Check Your Canonical URLs

Canonical URLs are used to indicate the preferred version of a webpage when multiple versions exist (due to parameters, session IDs, etc.). By setting a canonical URL:

- You signal to search engines which version of the page should be indexed.

- You prevent issues with duplicate content, ensuring only the preferred version is considered for ranking.

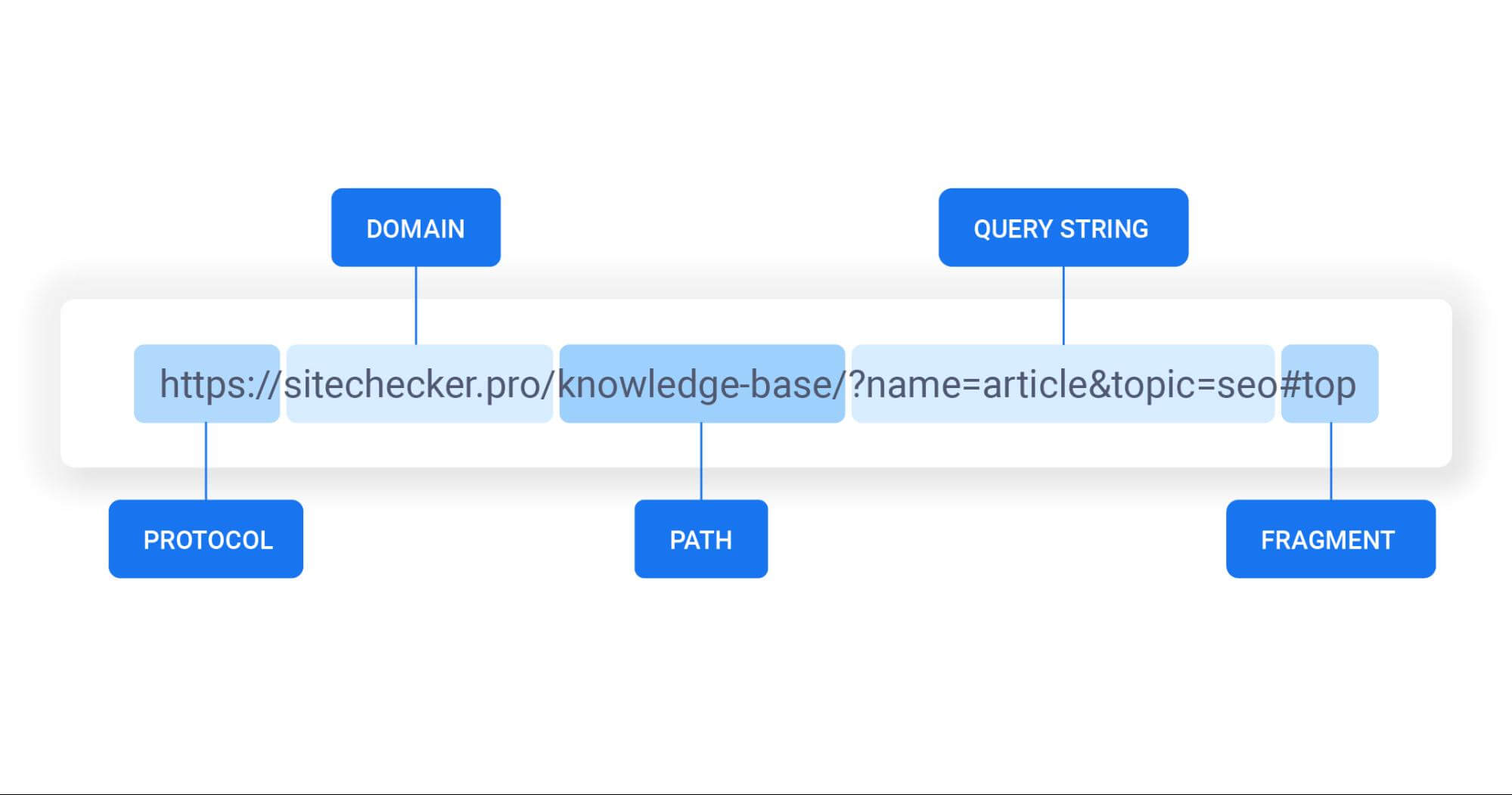

Optimize Your URL Structure

A clean and descriptive URL structure benefits both users and search engines:

- It provides a clear hierarchy, making the content more accessible.

- Descriptive URLs give users and search engines an idea of the page’s content.

- Shorter URLs are more shareable and user-friendly.

Navigation and Website Structure

A clear and logical website structure aids in the indexing process:

- It ensures all pages are easily discoverable by search engines.

- Simplifies the crawling process, ensuring efficient use of crawl budget.

- Facilitates the distribution of page authority and ranking power throughout the site.

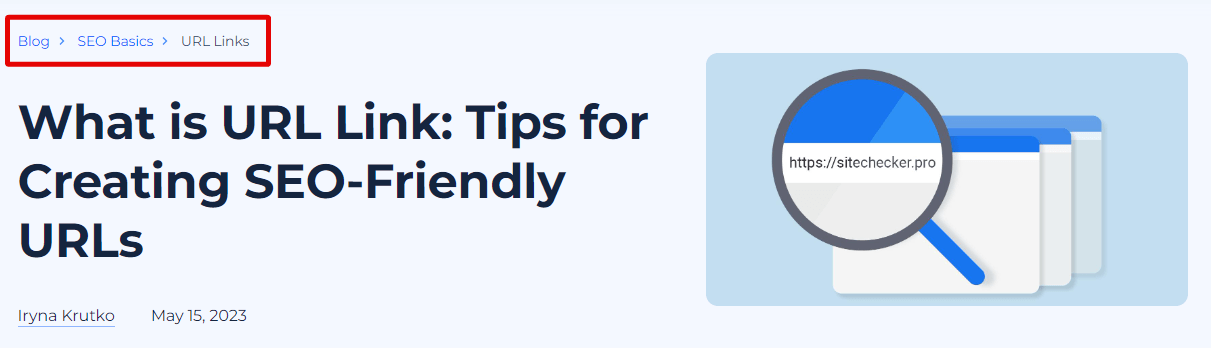

Add Breadcrumb Menus

Breadcrumbs are navigational aids that display the user’s location within a website. They offer several benefits:

- Provide another form of internal linking, boosting the indexing of related pages.

- Enhance user experience, making navigation simpler.

- Give search engines a clearer picture of a site’s structure.

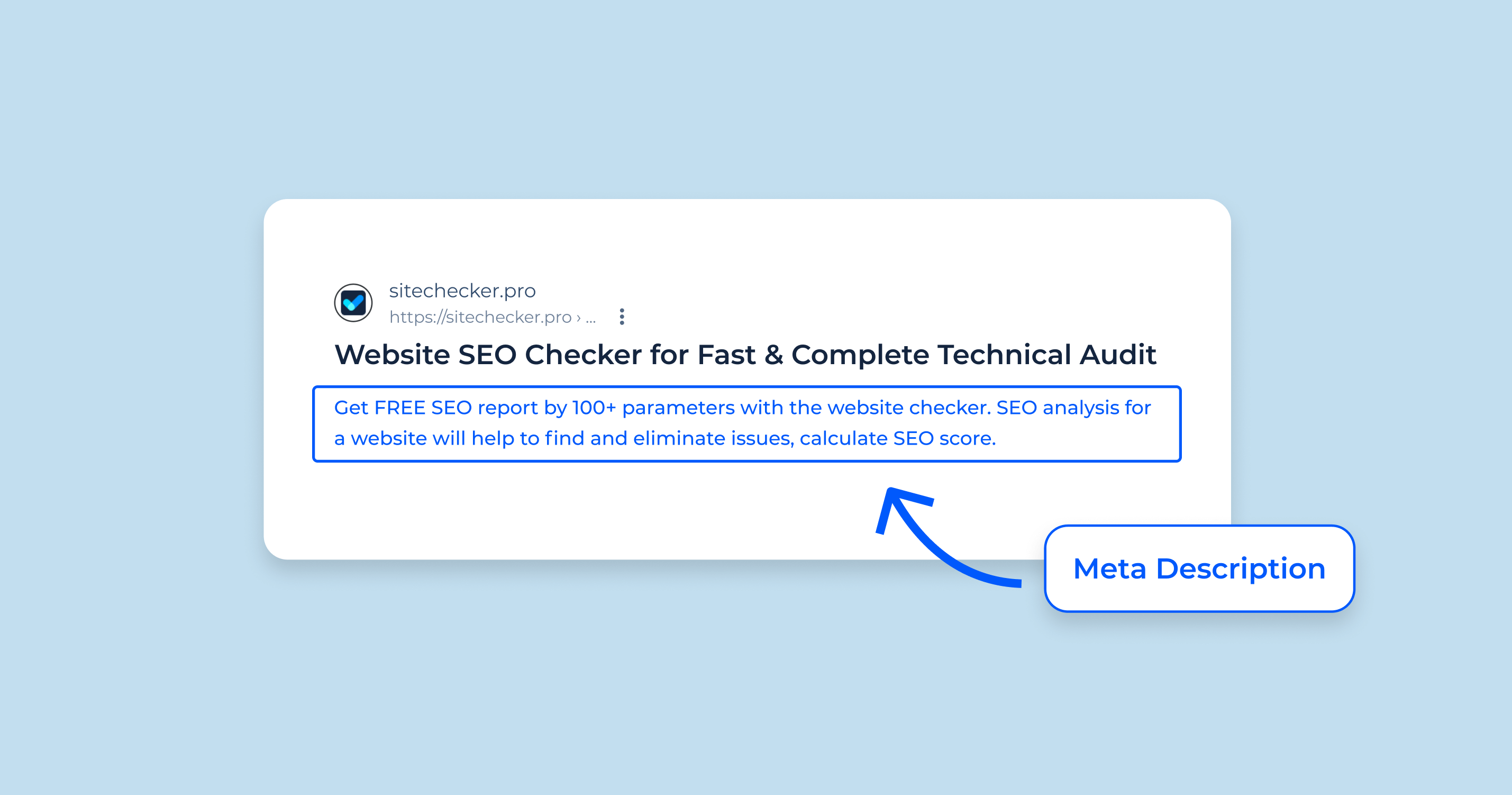

Structured Data Markup and SEO

Structured data markup, often implemented using Schema.org vocabulary, is a way to provide additional context about your content to search engines. By adding this markup:

- You can qualify for rich snippets in search results, such as reviews, ratings, and more.

- It gives search engines clear insights into the type and nature of your content.

- Enhances visibility and can improve click-through rates.

Technical SEO Best Practices

For a website to function optimally in the eyes of search engines and users, it’s essential to adopt a set of best practices that form the foundation of technical SEO. These practices not only help in improving rankings but also offer a better user experience.

1. Use HTTPS and SSL

- Security: An HTTPS website, underpinned by SSL (Secure Socket Layer), encrypts data transfer between the user’s browser and the website, ensuring protection against eavesdroppers.

- Trustworthiness: Browsers like Chrome flag non-HTTPS websites as ‘Not Secure,’ which can deter visitors. An HTTPS badge improves user trust.

- SEO Ranking: Google has confirmed that HTTPS is a ranking signal, meaning secure websites have a slight edge in search rankings.

2. Make Sure Only One Version of Your Website Is Accessible to Users and Crawlers

Ensuring a single, canonical version of your website avoids content duplication issues. Whether it’s “www” vs. “non-www” or “http” vs. “https,” choose a version and redirect the others to your preferred one.

3. Website Speed and Optimization for Core Web Vitals

- User Experience: Faster sites reduce bounce rates and improve overall user experience.

- Search Rankings: Site speed is a confirmed ranking factor. Google’s Core Web Vitals, which focus on user experience metrics like Largest Contentful Paint (LCP) and Cumulative Layout Shift (CLS), emphasize this further.

4. Ensure Your Website Is Mobile-Friendly

With mobile searches overtaking desktop, a mobile-responsive design is non-negotiable. Google’s mobile-first indexing also means that the mobile version of your website will be considered the primary version for ranking.

5. Implement Structured Data

Structured data, using formats like Schema.org, help search engines understand the context of your content, enabling features like rich snippets, knowledge boxes, and enhanced SERP displays.

6. Find & Fix Duplicate Content Issues

Duplicate content can dilute your site’s relevance and authority. Tools like Website Crawler for Technical SEO Analysis can help identify and address these issues.

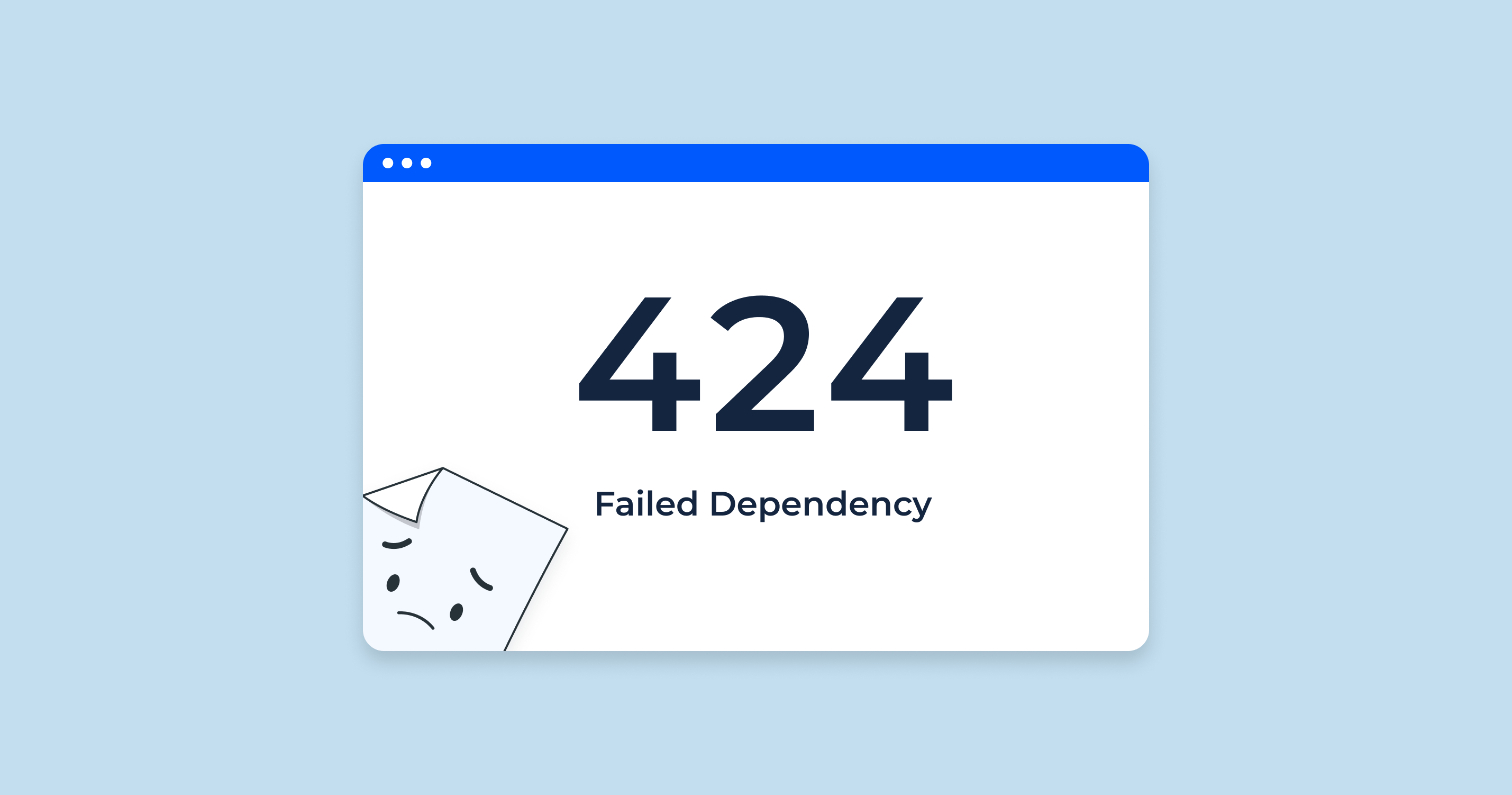

7. Optimize Your 404 Page and Find & Fix Broken Pages

A custom 404 page can guide lost users back into your site, improving user experience. Regularly check for broken links or missing pages and fix or redirect them appropriately.

8. Pagination, Hreflang for Content in Multiple Languages, and Multilingual Websites

- Pagination ensures content-heavy pages are accessible without overwhelming users.

- Hreflang tags signal to search engines which language you’re using on a specific page, ensuring the right content is served to the right audience.

- A well-structured multilingual site can tap into global markets and audiences.

9. Register Your Website with Webmaster Tools

Tools like Google Search Console provide insights into how your site is performing in search, highlighting issues like crawl errors, broken links, or manual actions.

10. Stay On Top of Technical SEO Issues

The digital landscape is dynamic. Regularly audit your website for technical issues, stay updated with the latest best practices, and adjust your strategies accordingly.

Technical SEO Checklist

Technical SEO can be complex, but having a checklist can simplify the process, ensuring that you don’t miss out on any critical aspects. Here’s a comprehensive checklist to guide your efforts:

-

Domain Configuration:

- Choose a preferred domain (www vs. non-www).

- Ensure HTTP to HTTPS redirect is set up if using SSL.

-

Site Accessibility:

- Test robots.txt to ensure crucial pages aren’t blocked.

- Set up an XML sitemap and submit it to search engines.

-

Website Speed:

- Optimize images (size, format).

- Use browser caching.

- Minify CSS, JavaScript, and HTML.

- Implement a Content Delivery Network (CDN) if necessary.

-

Mobile Optimization:

- Ensure responsive design.

- Test mobile usability via tools like Google’s Mobile-Friendly Test.

-

Indexing:

- Check for noindex tags on important pages.

- Use canonical tags to handle duplicate content.

- Monitor crawl errors in webmaster tools.

-

Site Architecture and Navigation:

- Maintain a logical hierarchy.

- Implement breadcrumb menus.

- Ensure a shallow depth (users should access any page in 3-4 clicks).

-

Structured Data:

- Implement Schema.org markups where relevant.

- Test structured data using Google’s Structured Data Testing Tool.

-

Content Issues:

- Scan for duplicate content.

- Fix or redirect broken links.

- Set up a user-friendly custom 404 page.

-

Multilingual and International SEO:

- Use hreflang tags for multilingual content.

- Ensure separate URL structures for different languages or regional content.

-

Security:

- Ensure SSL certificate is valid.

- Monitor site for potential security issues or breaches.

-

Core Web Vitals and User Experience:

- Check for Largest Contentful Paint (LCP), First Input Delay (FID), and Cumulative Layout Shift (CLS) scores.

- Ensure interactive elements are user-friendly.

-

Integration with Webmaster Tools:

- Register the site with Google Search Console and Bing Webmaster Tools.

- Monitor for crawl errors, manual actions, and sitemap status.

-

Regular Audits:

- Schedule regular technical SEO audits to identify and rectify issues.

- Use tools like Screaming Frog, Ahrefs, or SEMrush for comprehensive scans.

Always remember that technical SEO is an ongoing process. As search algorithms evolve and website technologies change, it’s crucial to revisit this checklist and ensure your site remains up-to-date and optimized.

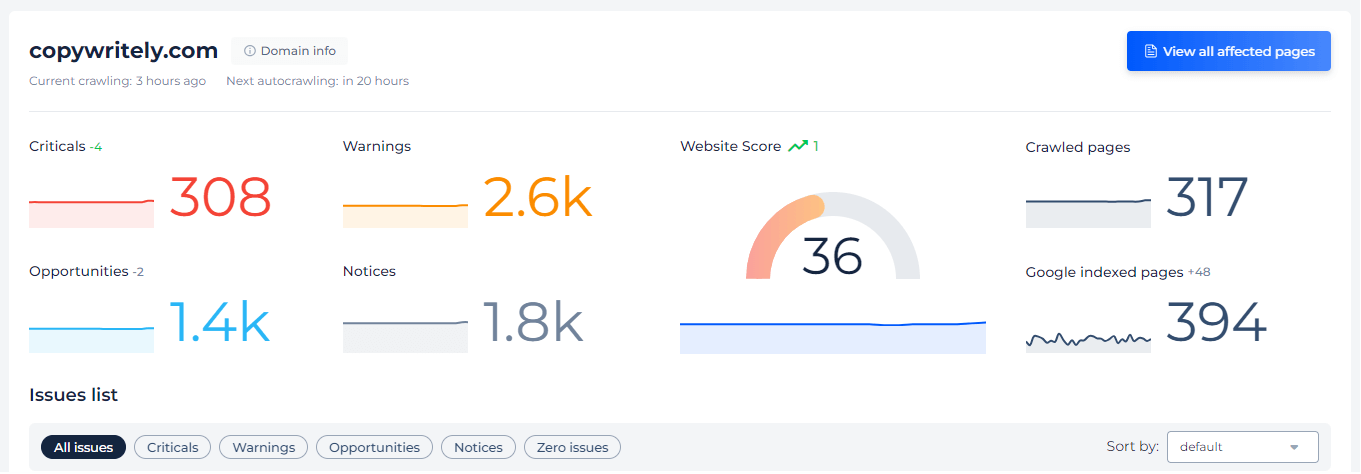

Website SEO Checker & Audit Tool to Boost Your On-Page SEO

The Website SEO Checker & Audit Tool by SiteChecker is an invaluable resource for webmasters and SEO professionals seeking to elevate their website’s search engine performance. This comprehensive tool offers a detailed analysis of a website’s overall SEO health, pinpointing areas that need improvement. By simply entering a website URL, users receive an instant evaluation covering key aspects such as on-page optimization, backlink profiles, and technical SEO. This makes it an essential tool for identifying issues that could be hindering a site’s search engine ranking and providing actionable insights for enhancement.

Beyond the basic SEO audit, this tool boasts additional features like tracking website ranking changes, monitoring competitors’ SEO strategies, and providing customized recommendations based on the latest search engine algorithms. It also offers a user-friendly interface that breaks down complex SEO data into understandable metrics, making it accessible for users of all expertise levels. With regular updates and tips, the Website SEO Checker & Audit Tool is not just a diagnostic instrument but a guide for continuous SEO improvement, making it a staple in any digital marketer’s toolkit.

Leave No Page Unchecked!

Dive deep into your website with our comprehensive Website Audit tool.

Conclusion

Navigating the intricate realm of technical SEO might seem daunting, but its importance cannot be overstated. A solid technical foundation ensures that your content is accessible, both to users and search engines, laying the groundwork for all other SEO efforts. While the landscape of SEO continually shifts with algorithm updates and technological advancements, the tenets of technical SEO largely remain consistent. By ensuring your site is technically sound, you’re taking a pivotal step towards optimizing visibility, user experience, and ultimately, your site’s success in the digital ecosystem.